Table of contents

ComfyUI is a visual workflow tool based on Stable Diffusion, but it can consume a lot of VRAM (video memory) and RAM (system memory) when running. If your device has low memory (such as a 4GB-8GB GPU), or encounters an OOM (Out of Memory) error while running, you can reduce memory usage by following optimization methods.

In ComfyUI, the main factors that affect memory usage include:

The size of the model itself

Image resolution

Sample and sampling steps

Additional models such as ControlNet, LoRA, etc.

ComfyUI's running mode

Computational optimization (such as xFormers, Torch 2.0)

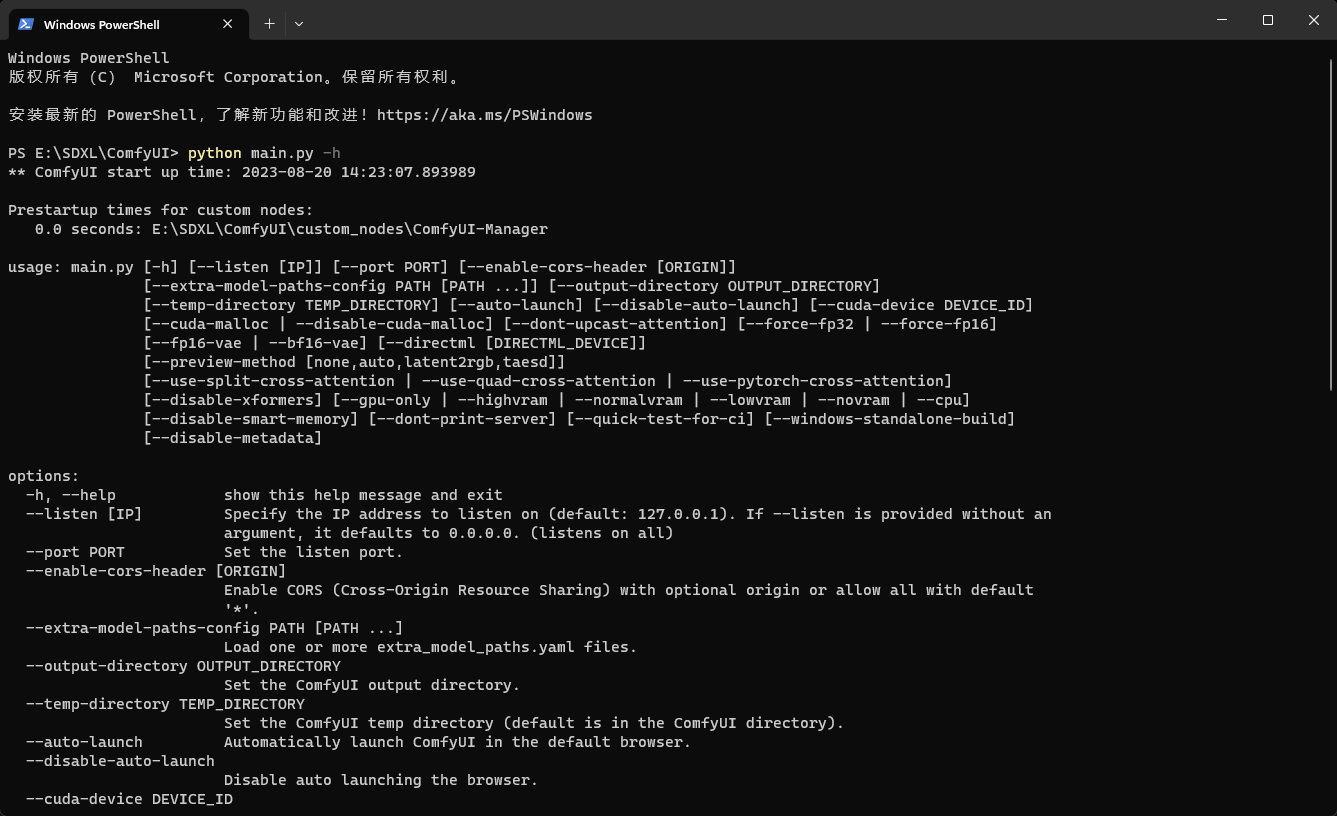

When starting ComfyUI, you can enable some memory-saving modes through command line parameters:

python main.py --lowvram --xformers --enable-torch2

Parameter description:

--lowvram low memory mode, suitable for GPUs with 4GB video memory, slows down but reduces memory usage.

--medvram medium video memory mode, suitable for GPUs with 6GB-8GB video memory, reducing occupancy but not affecting too much performance.

--xformers Enable xFormers to optimize Transformer computing and improve memory utilization.

--disable-metadata Turn off metadata storage and reduce RAM usage.

--enable-torch2 enables Torch 2.0 computing optimization to improve memory management efficiency.

Different Stable Diffusion models have different sizes and occupy different video memory:

SD 1.5 (fp16) → Approximately 3GB VRAM

SDXL (fp16) → Approximately 7GB VRAM

SDXL (fp32) → Approximately 12GB VRAM

SDXL-Turbo → Larger video memory requirements

4-bit quantitative model (GPTQ, bitsandbytes) → can reduce video memory usage by 30%-50%

If you have insufficient video memory, it is recommended to use fp16 or 4-bit quantization model:

Stable Diffusion 1.5 (fp16)

Stable Diffusion XL (fp16)

4-bit quantization SDXL (such as GPTQ quantization)

When generating high-resolution images, the memory usage will increase dramatically. For example:

512x512 → SD 1.5 about 2GB, SDXL about 4GB

768x768 → SD 1.5 about 4GB, SDXL about 6GB

1024x1024 → SD 1.5 about 6GB, SDXL about 10GB

Optimization suggestions:

Generate small-sized images ( 512x512 or 768x768 ) and zoom in with super resolution ( Upcaler ).

Using FreeU technology ( in Stable Diffusion's KSampler (sampler) or U-Net structures, image details can be enhanced by adjusting FreeU-related parameters ) to reduce computational requirements while maintaining quality.

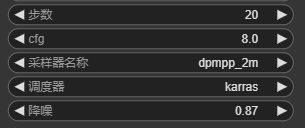

The more sampling steps, the higher the memory usage and the longer the calculation time.

DPM++ SDE Karras → High Quality & Low Video Memory Essence (Recommended)

Euler a → Fast speed, suitable for low-video devices

DDIM → Low occupancy, suitable for quick picture output

Optimization suggestions:

Set the sampling step number to 20-30 to avoid high steps of 50+.

Use high-efficiency samplers such as DPM++ SDE Karras.

ControlNet and LoRA will significantly increase memory demand.

ControlNet takes up an additional 1GB-3GB

Single LoRA takes up an additional 500MB-1GB

Multiple LoRAs may exceed video memory

Optimization method:

Avoid loading multiple ControlNets at the same time, and 1-2 control nodes are recommended.

Use low-memory LoRA (such as 4-bit LoRA).

Load LoRA dynamically, enable and close on demand.

Part of the calculation can be handed over to the CPU to reduce GPU pressure:

VAE decoding (VAEDecode)

CLIP processing

Preprocessing (such as canny, depth)

In ComfyUI, you can manually set some tasks to run on the CPU, or use the --cpu startup parameter (but it will slow down).

If you have insufficient video memory, Windows/Linux can enable virtual memory (Swap):

Windows Setting Method:

Open System Properties → Advanced → Performance → Advanced

In virtual memory , manually set more than 16GB

Linux settings:

sudo fallocate -l 16G /swapfile sudo chmod 600 /swapfile sudo mkswap /swapfile sudo swapon /swapfile

If you run multiple tasks at the same time in ComfyUI, the memory will be full.

suggestion:

Avoid running multiple graphs at once

Close unnecessary nodes

Clean up occupied processes in Task Manager/System Monitor

If the video memory is insufficient (4GB-8GB), the following settings are recommended:

python main.py --lowvram --xformers --enable-torch2

at the same time:

Quantitative model using FP16 or 4-bit

Reduce resolution (recommended 512x512 or 768x768)

Set the sampling step number to 20-30, use DPM++ SDE Karras

Turn off unnecessary ControlNet/LoRA

Let the CPU handle some tasks (such as VAE decoding)

Turn on swap to swap memory to prevent overflow

This can effectively reduce memory usage and improve the operation efficiency of ComfyUI on low-video memory devices.