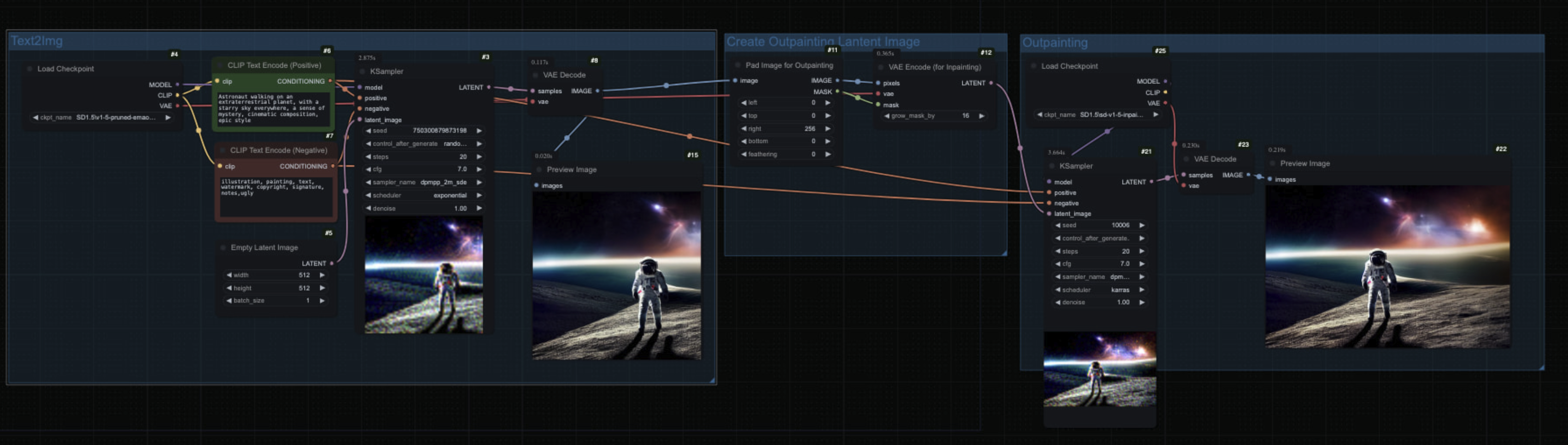

ComfyUI workflow is an AI painting generation process based on node operations. Users can use drag and drop and connect different functional modules to achieve image generation. Unlike ordinary AI painting tools, it adopts a visual node editing interface, where each node represents an independent function (such as model loading, parameter adjustment, image repair), forming a complete image generation logical chain through connections.

Workflow is the most core concept in ComfyUI. Simply put, it is a graphical interface composed of multiple nodes (Nodes) connections to describe the entire processing process of AI drawing. Just like building Lego bricks, we combine nodes with different functions to achieve various AI drawing effects.

1. Model loading node: used to load basic models (such as SD1.5, SDXL), LoRA models and ControlNet models. The CheckpointLoader node supports loading multiple models at the same time for combined creation.

2. Prompt word processing node: The CLIPTextEncode node converts text prompts into AI-recognizable vector data, supporting positive/negative prompt word hierarchical management.

3. Sampler node: KSampler node controls sampling methods (such as DPM++ 2M), iteration steps (20-30 steps are best) and CFG value (7-12 has better effect).

4. Image processing nodes: including special modules such as LatentUscale (resolution improvement), VAEDecode (latent space to image), ImageComposite (multi-image synthesis).

5. Output control node: The SaveImage node supports batch export, the PreviewImage node provides real-time preview function, and some workflows also include historical version comparison modules.

ComfyUI supports workflow templated storage and reuse, and users can save common processes as JSON files. Professional creators often share a complete workflow containing node parameter presets through GitHub, for example:

• Role Design Workflow: Integrated Face Repair, Pose Control, Multi-View Generation Modules

• Scene generation workflow: including depth of field control, light and shadow layering, and material refinement nodes

• Animation storyboard workflow: special nodes such as keyframe generation, action coherence optimization, etc.

ComfyUI has a very rich ecosystem, including images, videos, audio, 3D, etc. With the development of the open source community, these contents are now very rich, but because of the complex content, you may need to spend some time understanding and learning, understanding the role of each type of building block, understanding how the corresponding building blocks are combined and used, etc.