In the field of artificial intelligence, the DeepSeek team recently released the latest research results and launched an innovative sparse attention mechanism called NSA (Native Sparse Attention). The core goal of this technology is to improve the speed of long-context training and reasoning, especially optimized for modern hardware, which greatly improves the efficiency of training and reasoning.

The launch of NSA technology has brought significant changes to the training of artificial intelligence models. First, it significantly improves inference speed and effectively reduces the cost of pre-training through a series of design optimizations for modern computing hardware features. More importantly, while improving speed and reducing costs, NSA still maintains a high level of model performance, ensuring that the model's performance in multiple tasks is not affected.

The DeepSeek team adopted a layered sparse strategy in its research, dividing the attention mechanism into three branches: compression, selection, and sliding windows. This design allows the model to capture both global context and local details, thereby improving the model's processing power for long text. In addition, NSA optimization in memory access and computing scheduling has greatly reduced the computational delay and resource consumption of long context training.

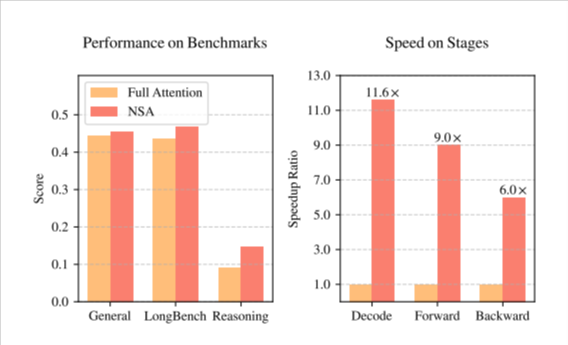

NSA demonstrates its excellent performance in a series of general benchmarks. Especially in long context tasks and instruction-based reasoning, NSA performance is even comparable to the full attention model, and in some cases is better. The release of this technology marks another leap in AI training and reasoning technology, and will bring new impetus to the future development of artificial intelligence.

NSA paper (https://arxiv.org/pdf/2502.11089v1).