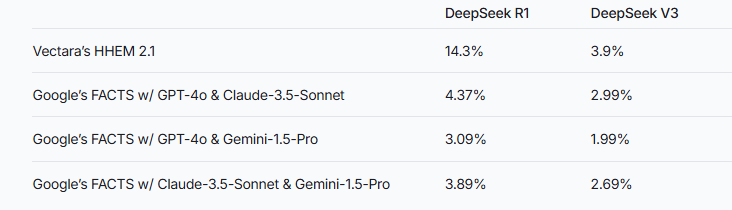

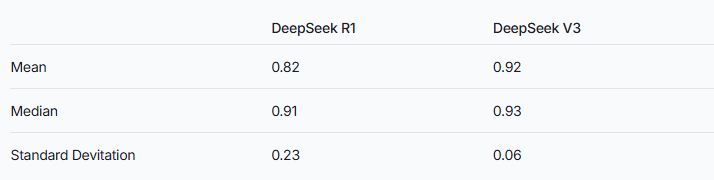

Recently, Vectara's machine learning team conducted in-depth hallucination tests on two models of the DeepSeek series. The results showed that the hallucination rate of DeepSeek-R1 was as high as 14.3%, significantly higher than the 3.9% of its predecessor, DeepSeek-V3. This suggests that DeepSeek-R1 produces more inaccurate or inconsistent with the original information during the process of augmenting reasoning. This result triggers extensive discussion on the hallucination rate of inference-enhanced large language model (LLM).

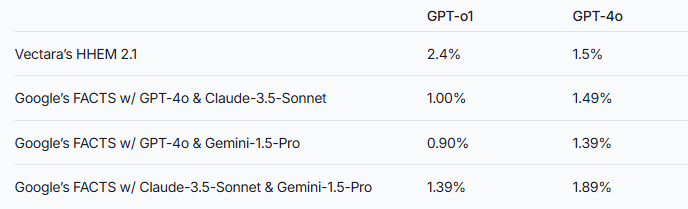

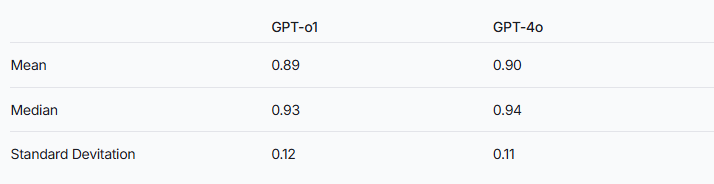

The research team pointed out that inference augmentation models may be more prone to hallucinations than ordinary large language models. This phenomenon is particularly evident in the comparison of the DeepSeek series with other inference enhancement models. Taking the GPT series as an example, the difference in hallucination rate between the reasoning-enhanced GPT-o1 and the normal version of GPT-4o also verifies this speculation.

To evaluate the performance of these two models, the researchers used Vectara's HHEM model and Google's FACTS method to make judgments. As a specialized hallucination detection tool, HHEM exhibits higher sensitivity when capturing the increase in hallucination rate of DeepSeek-R1, while the FACTS model performs relatively poorly in this regard. This reminds us that HHEM may be more effective than LLM as a standard.

It is worth noting that DeepSeek-R1, despite its excellent performance in reasoning, is accompanied by a higher hallucination rate. This may be related to the complex logic required to process inference enhancement models. As the complexity of model reasoning increases, the accuracy of generated content may be affected instead. The research team also emphasized that if DeepSeek can focus more on reducing hallucinations during the training phase, it may be possible to achieve a good balance between reasoning ability and accuracy.

While inference-enhanced models generally exhibit higher hallucinations, this does not mean that they do not have an advantage in other respects. For the DeepSeek series, it is still necessary to solve hallucinations in subsequent research and optimization to improve the overall model performance.

Reference: https://www.vectara.com/blog/deepseek-r1-hallucinates-more-than-deepseek-v3