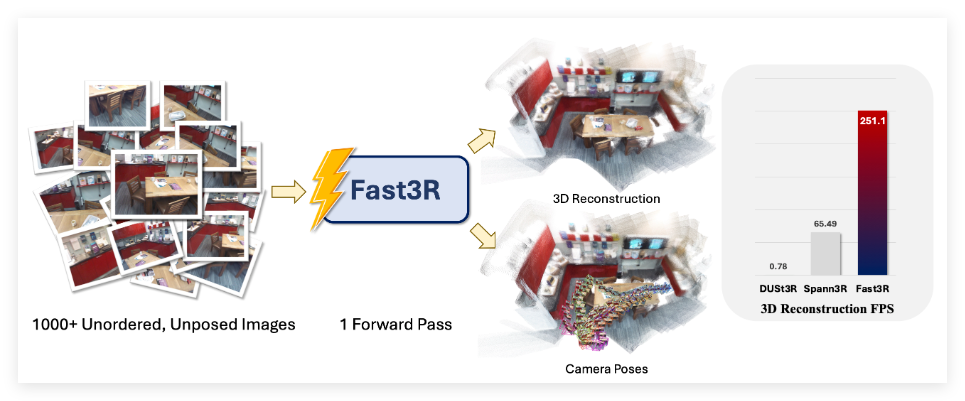

In the field of computer vision, multi-view 3D reconstruction has been an important and challenging task, especially when accurate and scalable representations are required. The existing mainstream methods, such as DUSt3R, mainly adopt pairwise processing. This method requires complex global alignment procedures when performing multi-view reconstruction, which is both time-consuming and labor-intensive. To solve this problem, the research team proposed Fast3R, an innovative multi-perspective reconstruction technology that can process up to 1,500 images in a single forward propagation, greatly improving the reconstruction speed.

The core of Fast3R is a Transformer-based architecture that can process multiple view information in parallel, thus eliminating the process of iterative alignment. This new approach has verified its outstanding performance in camera pose estimation and 3D reconstruction tasks through extensive experiments, significantly improving inference speed and reducing error accumulation, making Fast3R a powerful alternative in multi-view applications.

In the implementation of Fast3R, researchers used a series of large-scale model training and inference techniques to ensure efficient and scalable processing capabilities. These technologies include FlashAttention2.0 (for memory-efficient attention calculation), DeepSpeed ZeRO-2 (for distributed training optimization), position embedding interpolation (for short-term training and long-term testing), and tensor parallelism (accelerating multi-GPU inference).

In terms of computing efficiency, Fast3R performs well on a single A100GPU, showing significant advantages over DUSt3R. For example, when processing 32 images with a resolution of 512×384, Fast3R only takes 0.509 seconds, while DUSt3R takes 129 seconds, and when processing 48 images, it faces memory overflow. Fast3R not only performs outstandingly in time and memory consumption, but also shows good scalability in model and data scale, heralding its broad prospects in large-scale 3D reconstruction.

Project entrance: https://fast3r-3d.github.io/