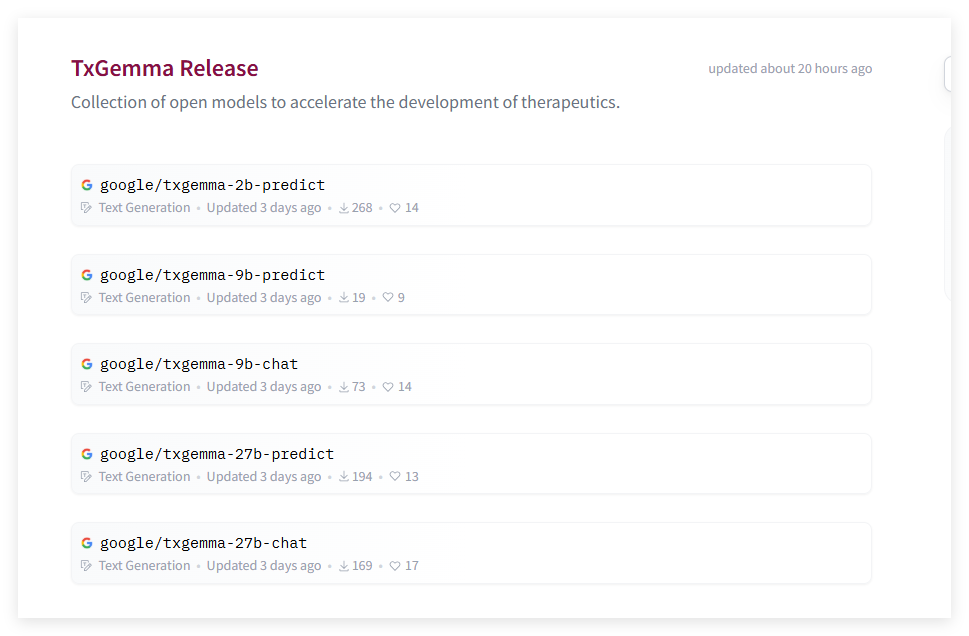

Google AI recently launched TxGemma, a universal large-scale language model (LLM) series designed specifically for drug development. TxGemma integrates data sets from multiple fields such as small molecules, proteins, nucleic acids, diseases and cell lines, covering multiple stages of the treatment development process. The series of models provides 200 million, 900 million and 2.7 billion parameters, all based on the Gemma-2 architecture fine-tuned by a comprehensive treatment dataset. In addition, TxGemma also includes an interactive dialogue model TxGemma-Chat, through which scientists can conduct detailed discussions and mechanism explanations to improve the transparency of the model.

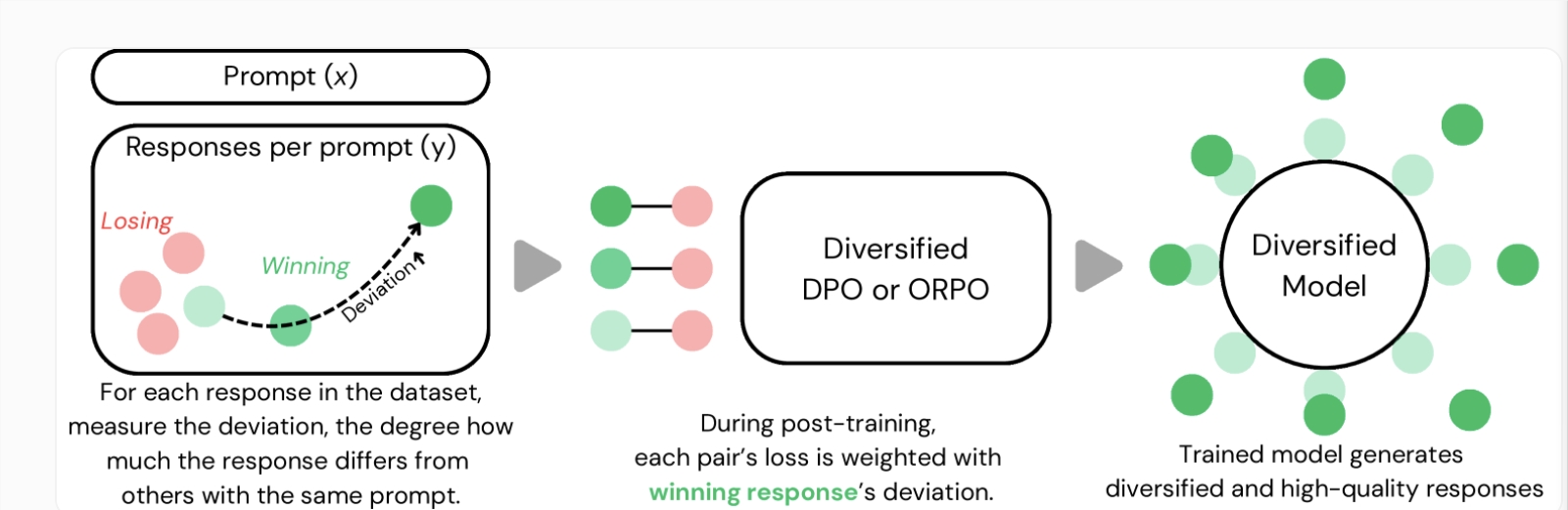

From a technical point of view, TxGemma utilizes the Therapeutic Data Community (TDC), a comprehensive data set covering 66 million data points. TxGemma-Predict, as a predictive variant in the model family, performs well on these datasets, with performance comparable to or better than the general and professional models currently used in treatment modeling. It is worth mentioning that TxGemma's fine-tuning method provides important advantages in the field of data scarcity because it is able to optimize prediction accuracy with significantly reducing training samples.

The practicality of TxGemma is fully reflected in the prediction of adverse events in clinical trials, which is a key link in the assessment of treatment safety. TxGemma-27B-Predict demonstrates strong predictive performance while using significantly fewer training samples than traditional models, indicating improvements in data efficiency and reliability. In addition, TxGemma's inference speed also supports actual real-time applications, especially in scenarios such as virtual filtering, the 27B parameter model can efficiently process large-scale samples.

TxGemma launched by Google AI marks another important advance in computing therapy research, combining predictive efficacy, interactive reasoning and data efficiency. By making TxGemma public, Google makes it possible to further validate and adapt to a variety of proprietary data sets, promoting wider applicability and repeatability of therapeutic research.

Model: https://huggingface.co/collections/google/txgemma-release-67dd92e931c857d15e4d1e87