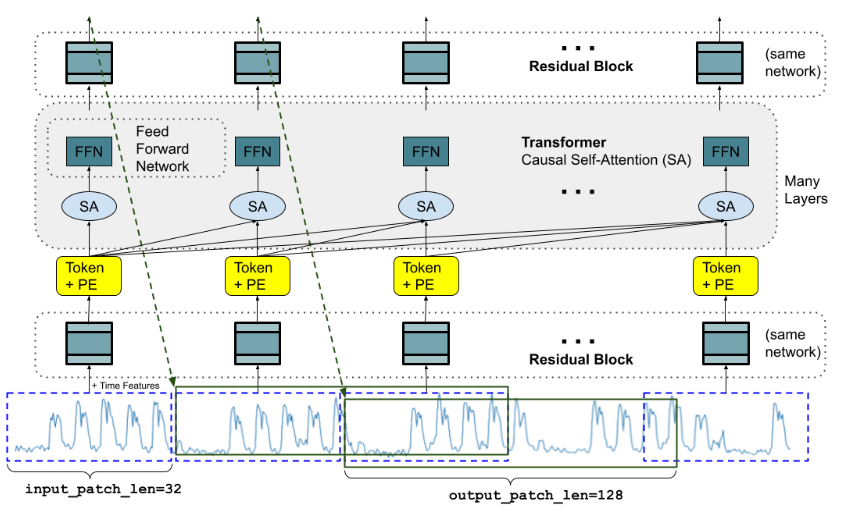

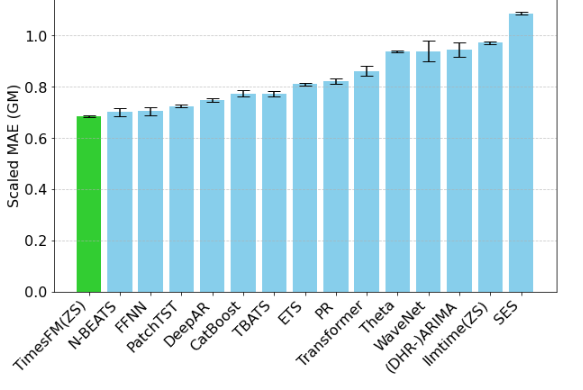

The Google research team recently launched TimesFM (Time Series Basic Model) 2.0, a pre-trained model designed specifically for time series prediction. The model aims to improve the accuracy of time series predictions and promote the development of artificial intelligence through open source and scientific sharing.

The TimesFM2.0 model has powerful functions and can handle univariate time series forecasts up to 2048 time points, and supports any forecast time span.

It is worth noting that although the model is trained with a maximum context length of 2048, in practical applications, longer contexts can be processed. The model focuses on point prediction, while 10 quantile heads are experimentally provided, but these have not been calibrated after pre-training.

In terms of data pre-training, TimesFM2.0 contains a combination of multiple data sets, including the pre-training set of TimesFM1.0 and additional data sets from LOTSA. These data sets cover multiple fields, such as residential electricity load, solar power generation, traffic flow, etc., providing a rich foundation for model training.

Through TimesFM2.0, users can more easily conduct time series predictions and promote the development of various applications, including retail sales, stock trends, website traffic and other scenarios, environmental monitoring and intelligent transportation and other fields.

Model entrance: https://huggingface.co/google/timesfm-2.0-500m-pytorch