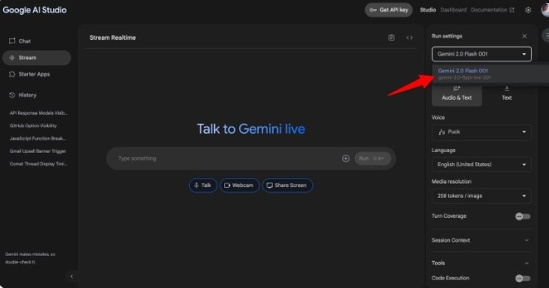

For developers, in addition to its powerful performance, truly practical AI tools must be able to "implement" - fast speed, easy access, and support real-time response. Recently, Google's Gemini-2.0-flash-live-001 model was officially launched for these needs.

As the latest update of Google AI Studio, this model marks the development of the Gemini Flash series from experimental to practicality, and also indicates that real-time multimodal AI applications will usher in a faster and more reliable development stage.

Gemini-2.0-flash-live-001 is the first publicly preview version under the Gemini Live API, designed for building real-time responsive multimodal AI applications:

Supports text, audio, video and other streaming inputs

Optimize processing speed and stability

Especially suitable for building virtual assistants, meeting minutes, monitoring systems and other scenarios

Compared with the previous experimental version (Gemini 2.0 Flash Experimental), the new model not only improves the system response speed, but also enhances support for concurrent processing. This means developers can deploy it to a real business environment with more peace of mind.

Beginners can easily get started: the supporting APIs and documents have been fully open, the platform guidance is clear, the interface is concise, and there is no need for complicated configuration.

This update also marks Google's official launch of the billing mechanism of the Gemini Flash model, which means:

Developers can obtain higher request rates and stronger resource support

Models move from test-based products to formal products, suitable for production-level deployment

API stability and performance are gradually aligned with commercial standards

At the same time, the Google AI Studio platform is also optimized simultaneously, further reducing the threshold for model calls and integration, allowing developers to quickly test and launch new functions.

The core advantage of Gemini-2.0-flash-live-001 is "real-time multimodal processing capability". This brings new technological possibilities to multiple industries:

Online education : Create a highly responsive, natural communication interactive learning assistant

Enterprise Services : for real-time customer support, smart meeting minutes and summary

Entertainment and Virtual Reality : Building an immersive experience system with AI responsiveness

Google's goal is very clear: to truly bring AI into the future of "instant interaction" by strengthening Live API capabilities.

According to feedback from several technical analysts, Gemini-2.0-flash-live-001 is based on the Flash architecture:

Introducing lighter inference optimization

Expand the upper limit of concurrency processing

Keep low latency features and compatible with more development scenarios

This is an excellent starting point for developers who are new to real-time AI applications:

You can start with a simple chatbot or audio transcription and explore the construction of complex interactive tasks step by step.

The launch of Gemini-2.0-flash-live-001 is not only a model iteration, but also a key step taken by Google in the field of real-time AI processing. For developers, this means:

A more mature product experience

Lower access threshold

More authentic commercial potential

In the next few months, Google will continue to adjust the stability and billing strategy of the model, and recommend that developers try it out and feedback as soon as possible to grasp the growth dividends of the new generation of AI tools.