Recently, the VITA-MLLM team announced the launch of VITA-1.5, an upgraded version launched by the team based on VITA-1.0, dedicated to improving the real-time and accuracy of multi-modal interaction. VITA-1.5 not only supports English and Chinese, but also achieves significant improvements in multiple performance indicators, providing users with a smoother interactive experience.

In VITA-1.5, the interaction delay is greatly reduced, from the original 4 seconds to only 1.5 seconds. Users can hardly feel the delay during voice interaction. In addition, this version has also significantly improved multi-modal performance. After evaluation, the average performance of VITA-1.5 in multiple benchmark tests such as MME, MMBench and MathVista increased from 59.8 to 70.8, demonstrating excellent capabilities.

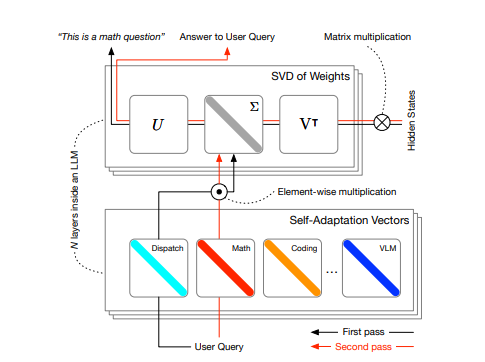

VITA-1.5 has also been deeply optimized in terms of speech processing capabilities. Its automatic speech recognition (ASR) system's error rate has been significantly reduced, from 18.4 to 7.5, which makes voice commands understood and responded to more accurately. At the same time, VITA-1.5 introduces an end-to-end text-to-speech (TTS) module that can directly accept the embedding of a large language model (LLM) as input, thereby improving the naturalness and coherence of speech synthesis.

In order to ensure the balance of multi-modal capabilities, VITA-1.5 adopts a progressive training strategy, so that the new speech processing module has the least impact on visual-language performance, and the image understanding performance dropped slightly from 71.3 to 70.8. Through these technological innovations, the team has further pushed the boundaries of real-time visual and voice interaction, laying the foundation for future intelligent interactive applications.

In terms of using VITA-1.5, developers can get started quickly through simple command line operations, and basic and real-time interactive demonstrations are provided. Users need to prepare some necessary modules, such as the Voice Activity Detection (VAD) module, to improve the real-time interaction experience. In addition, VITA-1.5 will also open source its code to facilitate the participation and contribution of developers.

The launch of VITA-1.5 marks another important progress in the field of interactive multi-modal large language models, demonstrating the team's unremitting pursuit of technological innovation and user experience.

Project entrance: https://github.com/VITA-MLLM/VITA?tab=readme-ov-file

AI courses are suitable for people who are interested in artificial intelligence technology, including but not limited to students, engineers, data scientists, developers, and professionals in AI technology.

The course content ranges from basic to advanced. Beginners can choose basic courses and gradually go into more complex algorithms and applications.

Learning AI requires a certain mathematical foundation (such as linear algebra, probability theory, calculus, etc.), as well as programming knowledge (Python is the most commonly used programming language).

You will learn the core concepts and technologies in the fields of natural language processing, computer vision, data analysis, and master the use of AI tools and frameworks for practical development.

You can work as a data scientist, machine learning engineer, AI researcher, or apply AI technology to innovate in all walks of life.