On March 6, 2025, Beijing Zhiyuan Artificial Intelligence Research Institute announced the open source multimodal vector model BGE-VL, which is a new breakthrough in the field of multimodal retrieval. The BGE-VL model has achieved the best results in multimodal search tasks such as graphic and text retrieval and combined image retrieval, which has significantly improved the performance of multimodal search.

The development of BGE-VL is based on the large-scale synthetic dataset MegaPairs, which efficiently mines multimodal triple data from a massive graphic corpus by combining multimodal representation model, multimodal large model and large language model. This method not only has excellent scalability, but also can continuously generate diverse and high-quality data at extremely low cost, but also greatly improves data quality. Compared with traditional manual annotation data, MegaPairs only needs 1/70 of the data volume to achieve better training results.

In terms of technical implementation, the construction of MegaPairs is divided into two key steps: first, use multiple similarity models to mine a variety of image pairs from the image dataset; second, use open-source multimodal large models and large language models to synthesize open domain search instructions. Through this approach, MegaPairs can scale-based, high-quality and diverse multimodal search instruction datasets without manual participation. The release of this release covers 26 million samples, providing rich data support for the training of multimodal retrieval models.

Based on the MegaPairs dataset, the Zhiyuan BGE team trained three multimodal retrieval models of different sizes, including BGE-VL-Base, BGE-VL-Large and BGE-VL-MLLM. These models show leading performance far exceeding previous methods on multiple tasks. Among the 36 multimodal embedding evaluation tasks of Massive Multimodal Embedding Benchmark (MMEB), BGE-VL achieved optimal performance in both zero-sample performance and supervised fine-tuning performance, proving its good task generalization ability.

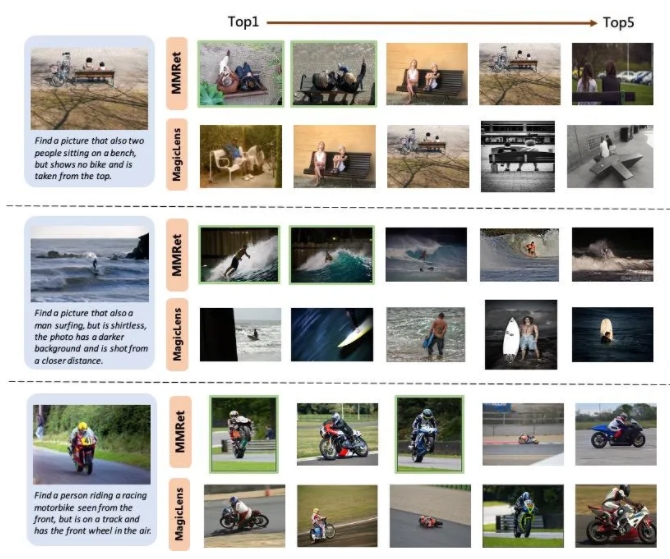

In the combined image retrieval task, BGE-VL refreshed the existing benchmark on the CIRCO evaluation set, significantly surpassing the comparison baselines such as Google's MagicLens series and Nvidia's MM-Embed. BGE-VL-MLLM improves 8.1 percentage points compared to the previous SOTA model, while the BGE-VL-Base model surpasses the multimodal retrievers of other large-modal bases with less than 1/50 of the parameters.

In addition, the study also shows that the MegaPairs dataset has good scalability and efficiency. As data size increases, the BGE-VL model shows a consistent performance growth trend. Compared with Google MagicLens, the SOTA model trained on 37M closed source data, MegaPairs only requires 1/70 of the data scale (0.5M) to achieve significant performance advantages.

Project homepage:

https://github.com/VectorSpaceLab/MegaPairs

Model address:

https://huggingface.co/BAAI/BGE-VL-MLLM-S1