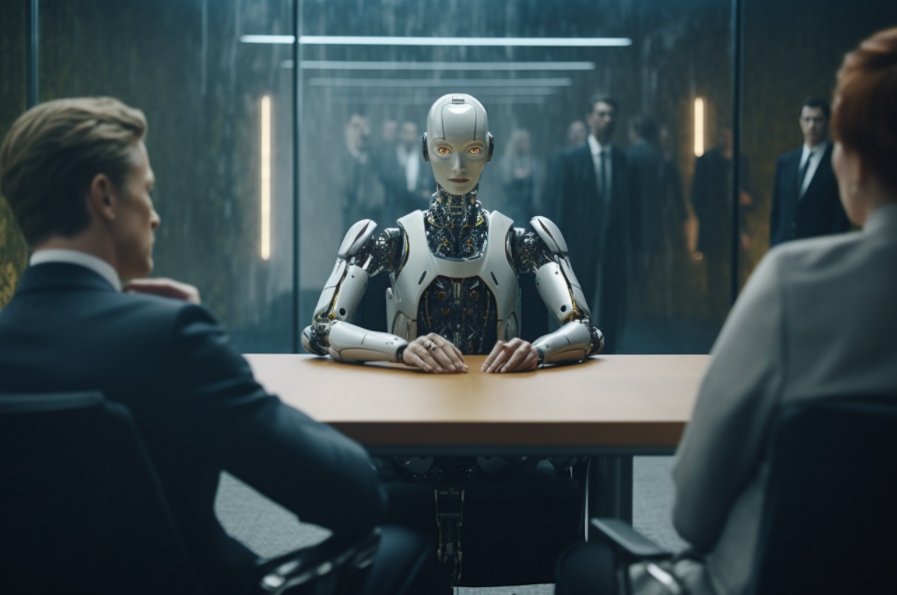

Recently, LinkedIn was sued for allegedly providing private InMail messages of paying users to third parties for artificial intelligence (AI) model training.

The lawsuit, filed in California federal court by Alessandro De La Torre, claims that LinkedIn actually used users' private message data for AI training in a policy change announced last year. The policy change allows LinkedIn to use members' posts and personal data to train its AI models and provide the data to third parties.

It is worth noting that LinkedIn clearly states in these policies that the data of users living in Canada, the European Union, the European Economic Area, the United Kingdom, Switzerland, Hong Kong, or mainland China will not be used to train content generation AI models. However, for US users, LinkedIn offers a setting that is enabled by default called "Data is used to generate AI improvements." This setting allows LinkedIn and its affiliates to use personal data and content created by users on the platform.

The complaint mentioned that LinkedIn promised in its contract with Premium users not to disclose users' confidential information to third parties. However, the lawsuit still has no concrete evidence as to whether InMail messages were indeed used as the basis for data sharing. The complaint hinges on certain policy changes by LinkedIn and its failure to explicitly deny access to users' InMail content, raising questions about its conduct. The complaint points out that LinkedIn has never publicly denied the possibility of disclosing Premium users' InMail content for third-party AI training.

LinkedIn has denied the accusations, saying they are baseless. A company spokesperson said: "These are baseless and false claims." At present, further developments on the case are still ongoing, and the issue of trust between LinkedIn and its users has also caused widespread concern.