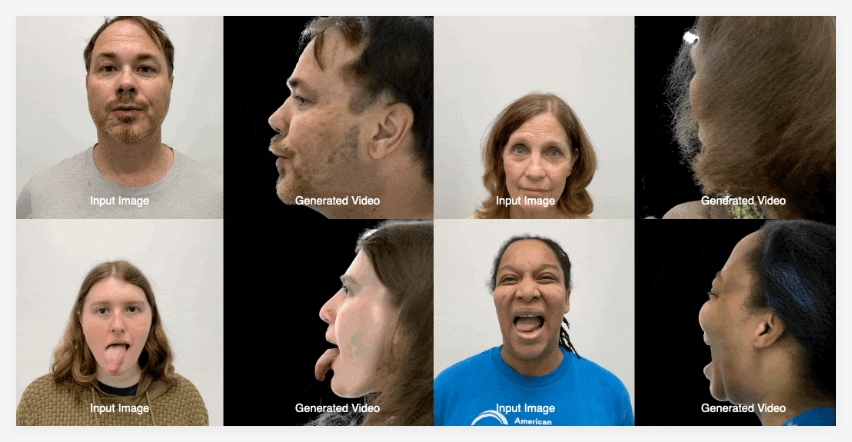

Recently, the research team of Meta Reality Labs jointly released an innovative generative model called "Pippo", which can generate a dense turnover video with a resolution of up to 1K from a random photo. This breakthrough technology marks another important progress in the fields of computer vision and image generation.

The core of the Pippo model lies in the design of its multi-view diffusion converter. Unlike traditional generative models, Pippo does not require any additional input, such as the fitted parametric model or the camera parameters that took the image. Users only need to provide a normal photo, and the system can automatically generate multi-view video effects, presenting users with a more vivid and three-dimensional character image.

For the convenience of developers, Pippo is released as a code-only version this time, without pre-training weights. The research team provided the necessary models, configuration files, inference codes, and sample training codes for the Ava-256 dataset. Developers can quickly get started with training and application through simple command cloning and setting up code bases.

Future plans for the Pippo project include organizing and cleaning up the code, as well as launching inference scripts for pre-trained models. These improvements will further enhance the user experience and promote the widespread use of the technology in practical applications.

Project: https://github.com/facebookresearch/pippo