As enterprises increasingly apply large language models (LLMs), how to improve the knowledge accuracy of the models and reduce hallucinations has become an important challenge. Researchers at Meta AI propose a "scalable memory layer" in a new paper that may provide a solution to this problem.

The core idea of the scalable memory layer is to add more parameters to LLMs without increasing the computing resources during inference, thereby improving their learning capabilities. This architecture is suitable for application scenarios that need to store a large amount of factual knowledge but want to maintain reasoning speed.

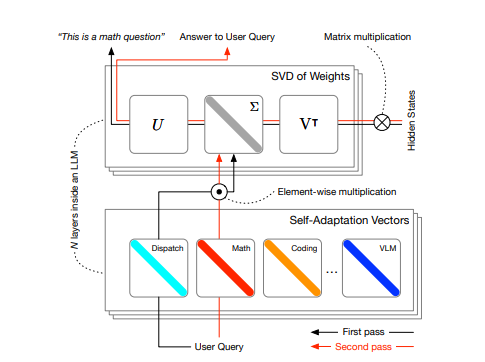

Traditional language models use "dense layers" to encode large amounts of information. In dense layers, all parameters are activated almost simultaneously during inference, enabling complex functions to be learned, but this requires additional computing and energy resources. For simple factual knowledge, it will be more efficient and easier to understand using a simple layer with an associative memory architecture. This is the role of the memory layer. The memory layer encodes and retrieves knowledge through simple sparse activation and key-value lookup mechanisms. Although the sparse layer has a higher memory footprint than the dense layer, it uses only a few parameters at the same time, thus improving computational efficiency.

Although memory layers have been around for many years, they have seen little use in modern deep learning architectures, primarily because they are not optimized for current hardware accelerators. Current cutting-edge LLMs typically employ some form of "expert hybrid" architecture, which has similarities to the memory layer. Expert hybrid models consist of multiple specialized small expert components, with routing mechanisms activating specific experts at inference time.

To overcome the challenge of the memory layer being computationally lightweight but memory-intensive, researchers at Meta proposed several improvements to make it feasible for large-scale applications. They configured parallelization for the memory layer to be able to store millions of key-value pairs on multiple GPUs without slowing down the model. In addition, they developed specific CUDA kernels for handling high memory bandwidth operations and implemented a parameter sharing mechanism that allows multiple memory layers to share a set of memory parameters.

The researchers tested the memory enhancement model by modifying the Llama model by replacing one or more dense layers with shared memory layers. Their research found that the memory model performed well on multiple tasks, especially on tasks requiring factual knowledge, significantly outperforming dense baselines and even competing with models that used 2 to 4 times the computing resources.

Paper entrance: https://arxiv.org/abs/2412.09764

AI courses are suitable for people who are interested in artificial intelligence technology, including but not limited to students, engineers, data scientists, developers, and professionals in AI technology.

The course content ranges from basic to advanced. Beginners can choose basic courses and gradually go into more complex algorithms and applications.

Learning AI requires a certain mathematical foundation (such as linear algebra, probability theory, calculus, etc.), as well as programming knowledge (Python is the most commonly used programming language).

You will learn the core concepts and technologies in the fields of natural language processing, computer vision, data analysis, and master the use of AI tools and frameworks for practical development.

You can work as a data scientist, machine learning engineer, AI researcher, or apply AI technology to innovate in all walks of life.