Meta Corporation recently released a breakthrough research result. They have developed a new memory layer technology that can significantly improve the factual accuracy of large language models (LLM) and achieve unprecedented expansion in parameter scale. This technology not only challenges the expansion method of traditional neural networks, but also provides new directions for future AI architecture design.

The core of this research is to utilize a trainable key-value lookup mechanism to add additional parameters to the model without increasing the computational load (FLOPs). The core idea of this approach is to supplement computationally intensive feed-forward layers with sparsely activated memory layers, thereby providing specialized capabilities for storing and retrieving information.

Compared to traditional dense networks, memory layers are more efficient at handling information storage. For example, the language model needs to learn simple associated information such as names, birthdays, and country capitals. The memory layer can be implemented through a simple key-value search mechanism, which is more efficient than using a feed-forward network.

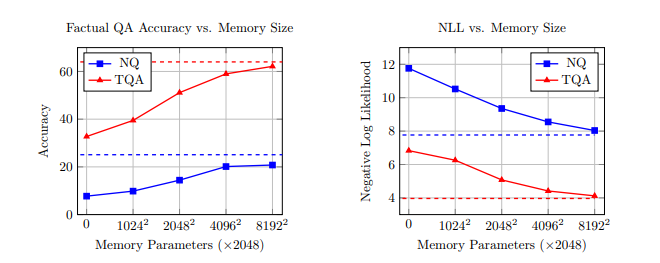

The main contribution of this research is to expand the size of the memory layer to an unprecedented extent, reaching 128 billion parameters. Experimental results show that in downstream tasks, a language model equipped with an improved memory layer not only outperforms a dense model with twice the amount of computation, but also outperforms a hybrid expert model when the amount of computation and parameter amount match. Especially on factual tasks, the performance improvement is more significant.

Researchers at Meta achieved this by replacing one or more feedforward networks (FFN) in the Transformer network with memory layers. This replacement method shows consistent advantages across different base model sizes (from 134 million to 8 billion parameters) and memory capacity (up to 128 billion parameters). Experimental results show that the memory layer can improve the factual accuracy of language models by more than 100%, while also achieving significant improvements in code writing and general knowledge. In many cases, models equipped with memory layers can even achieve the performance of dense models that require 4 times the computational effort.

The researchers also made several improvements to the memory layer to overcome its challenges in scaling up:

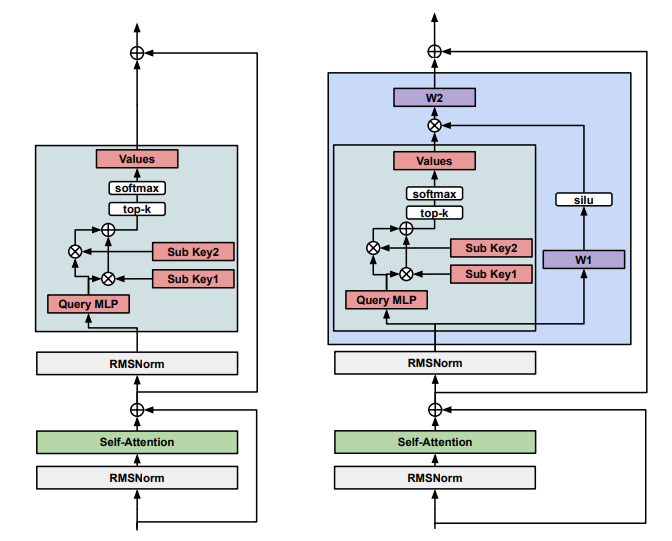

Adopting a product key lookup mechanism: In order to solve the bottleneck of query key retrieval in large-scale memory layers, this study adopts a trainable product quantization key, thereby avoiding the need to compare each query key pair.

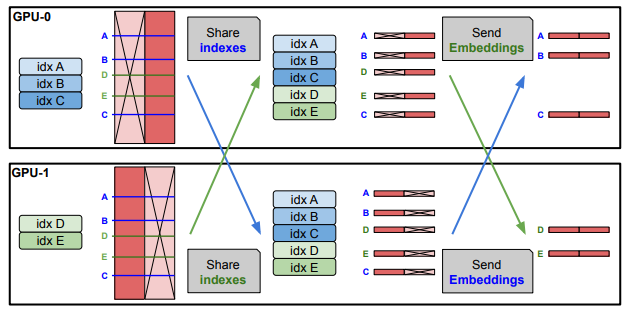

Parallelizing the memory layer: To achieve parallelization of the memory layer in a multi-GPU environment, the researchers distributed the embedding lookup and aggregation operations across multiple GPUs.

Shared memory mechanism: To maximize parameter sharing, researchers use a shared memory parameter pool between all memory layers.

Optimized performance and stability: Researchers optimized the EmbeddingBag operation using a custom CUDA kernel, significantly improving memory bandwidth utilization. In addition, an input-dependent gating mechanism with silu nonlinearity is introduced to improve training performance and stability.

The experimental results also revealed the following key findings:

The size of the memory layer has a significant impact on performance: as the size of the memory layer increases, the performance of factual question answering continues to improve.

Multiple memory layers are better than a single memory layer: using multiple memory layers that share parameters can improve performance, but too many memory layers can degrade performance. The optimal number of memory layers is three.

The memory layer can learn facts faster: In the early stages of training, the performance of models equipped with memory layers improves faster, indicating that the memory layer helps the model learn facts faster.

Memory layers and dense layers are complementary: Experiments show that both sparse memory layers and dense feed-forward layers are essential.

To verify the effectiveness of the memory layer technology, the researchers evaluated it on multiple benchmarks, including:

Factual Questions (NaturalQuestions, TriviaQA)

Multi-hop question answering (HotpotQA)

Scientific and general knowledge (MMLU, HellaSwag, OBQA, PIQA)

Coding (HumanEval, MBPP)

The results show that the model equipped with a memory layer outperforms the baseline model in these tests, especially in answering factual questions, where the performance improvement is most obvious.

This research by Meta not only provides new ideas for the expansion of AI models, but also opens up new paths for solving factual problems and improving model performance. Researchers believe that memory layer technology is highly scalable and is expected to be widely used in various AI applications in the future. They also pointed out that although the memory layer still faces challenges in hardware acceleration, they believe that through continued research and optimization, its performance can be comparable to or even surpass that of traditional feedforward networks.

In addition, Meta's research team also hopes to further improve the performance of the memory layer through new learning methods, reduce forgetting and hallucinations of the model, and achieve continuous learning.

The release of this research undoubtedly injects new vitality into the field of AI and makes us full of expectations for the future development of AI.

Paper: https://arxiv.org/pdf/2412.09764

AI courses are suitable for people who are interested in artificial intelligence technology, including but not limited to students, engineers, data scientists, developers, and professionals in AI technology.

The course content ranges from basic to advanced. Beginners can choose basic courses and gradually go into more complex algorithms and applications.

Learning AI requires a certain mathematical foundation (such as linear algebra, probability theory, calculus, etc.), as well as programming knowledge (Python is the most commonly used programming language).

You will learn the core concepts and technologies in the fields of natural language processing, computer vision, data analysis, and master the use of AI tools and frameworks for practical development.

You can work as a data scientist, machine learning engineer, AI researcher, or apply AI technology to innovate in all walks of life.