A while ago, I wrote an article on Open-Sora1.0 environment construction & reasoning test ( Open-Sora1.0 environment construction & reasoning test_Build your own sora service , please move on if you are interested). When Open-Sora1.1 was released, I glanced at the news. I turned my head back and forgot about this. I accidentally flipped into its open source website and found that 2024.6.17 has released version 1.2. So let’s see if there are any great progress. Yohe, now it supports 720P high-definition video, and the quality and generation time have also made breakthrough progress. OK, then let's start.

1. Introduction to upgrade

Based on the previous version, a video compression network (Video Compression Network), better diffusion model algorithm, more controllability, and a 1.1B diffusion generation model was trained using more data. Stable Diffusion 3, the latest diffusion model, significantly improves the quality of image and video generation by rectified flow technology instead of DDPM. Although the rectified flow training code of SD3 has not been published yet, the Open-Sora team of Luchen has provided a complete training solution based on the research results of SD3, including:

(1) Simple and easy-to-use rectified flow training

(2) Logit-norm time step sampling for training acceleration

(3) Time step sampling based on resolution and video length

2. Environmental installation

(1) Model download

https://huggingface.co/hpcai-tech/OpenSora-STDiT-v3/tree/main

https://huggingface.co/hpcai-tech/OpenSora-VAE-v1.2/tree/main

https://huggingface.co/PixArt-alpha/pixart_sigma_sdxlvae_T5_diffusers/tree/main/vae

(2) Code download

git clone GitHub - hpcaitech/Open-Sora: Open-Sora: Democratizing Efficient Video Production for All

3. Inference test

docker run -it --gpus=all --rm -v /datas/work/zzq/:/workspace open-sora:v1.0 bash

pip install -v .

cd PixArt-sigma

pip install -r requirements.txt

Note: open-sora:v1.0 is the docker image name installed in the previous OpenSora blog post

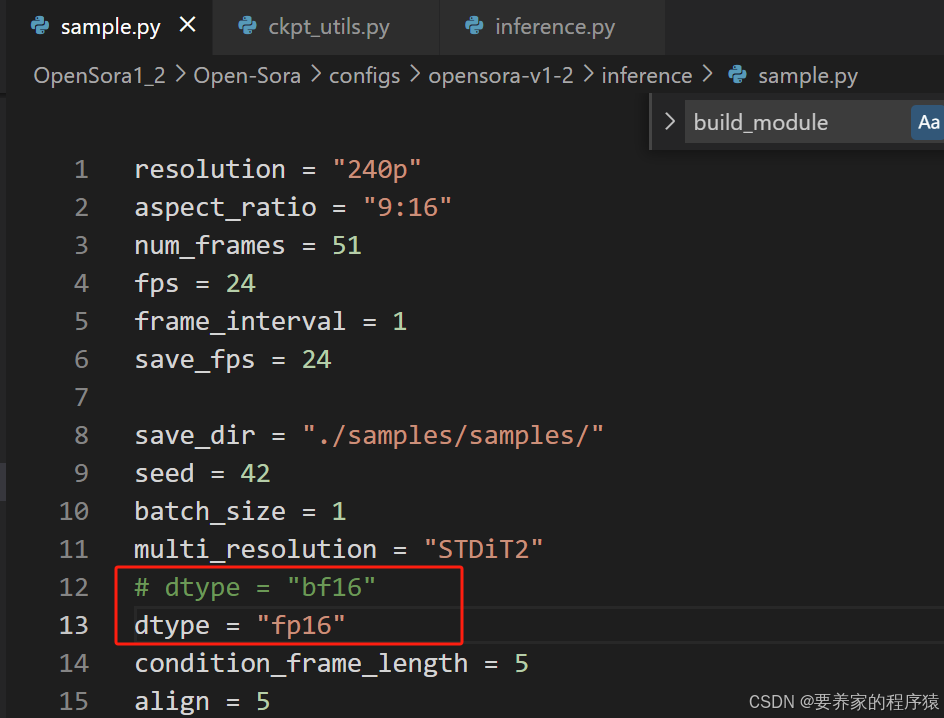

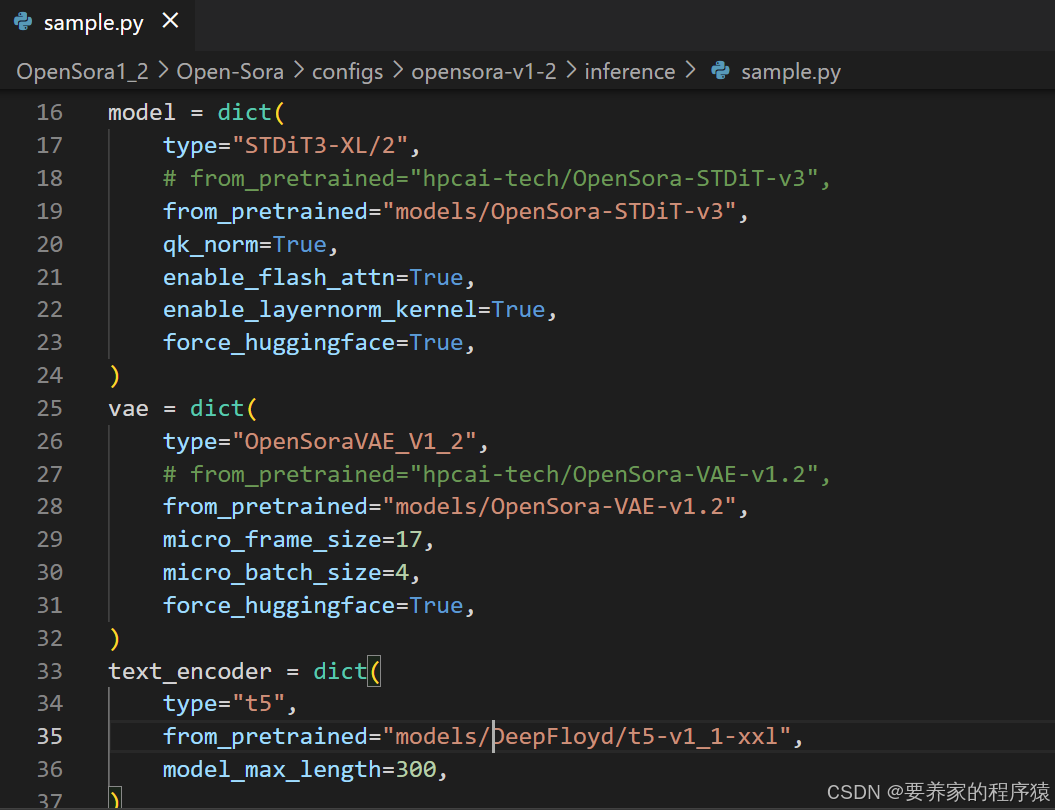

Modify the code

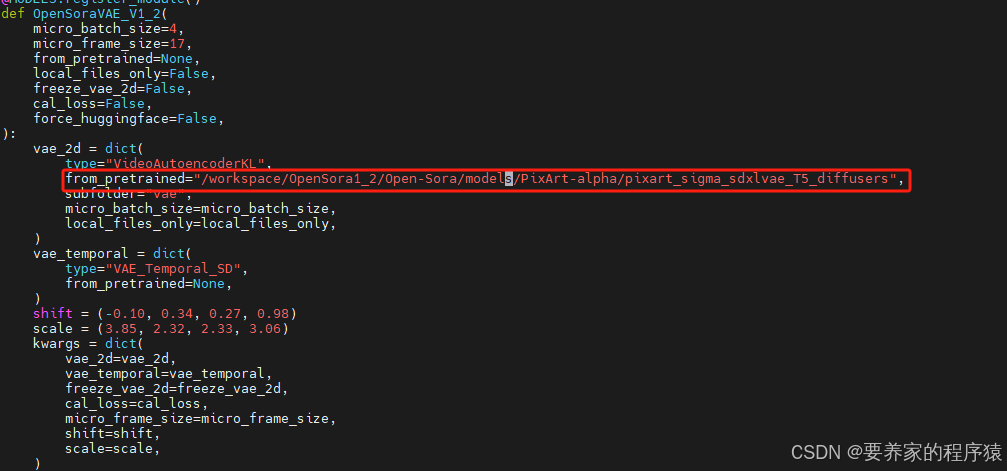

Modify the code vi /opt/conda/lib/python3.10/site-packages/opensora/models/vae/vae.py

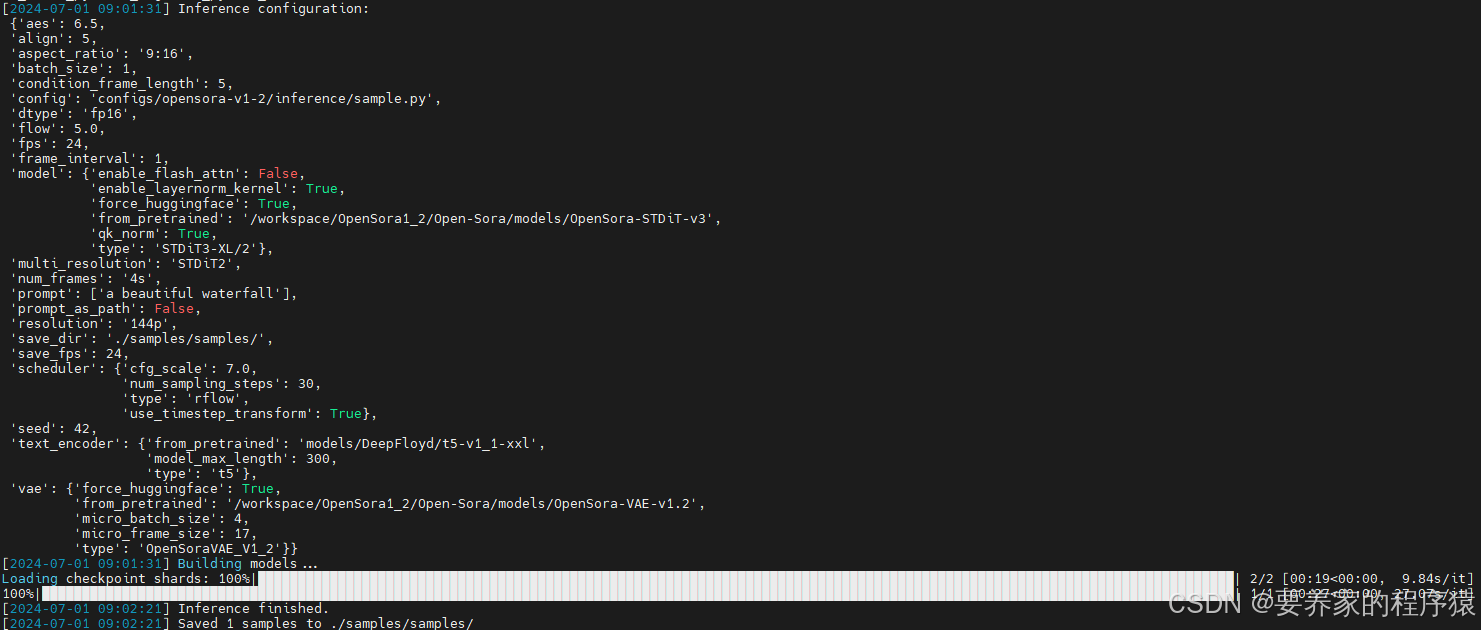

python scripts/inference.py configs/opensora-v1-2/inference/sample.py --num-frames 4s --resolution 720p --aspect-ratio 9:16 --num-sampling-steps 30 --flow 5 - -aes 6.5 --prompt "a beautiful waterfall"

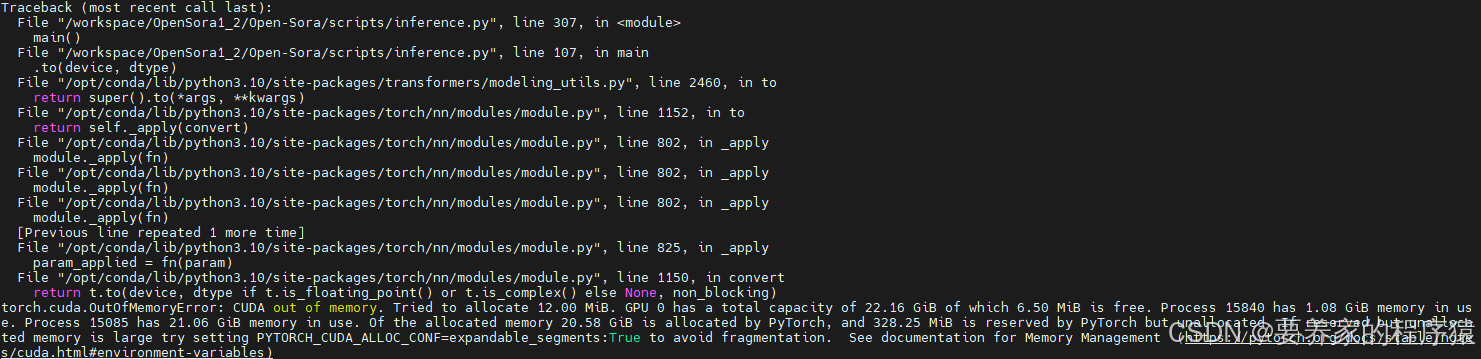

Insufficient video memory, change to smaller resolution

python scripts/inference.py configs/opensora-v1-2/inference/sample.py --num-frames 4s --resolution 144p --aspect-ratio 9:16 --num-sampling-steps 30 --flow 5 - -aes 6.5 --prompt "a beautiful waterfall"

The generation effect is as follows

OpenSora 1.2 version video