DeepSeek's latest large-scale language model, DeepSeek-V3-0324, has attracted widespread attention in the AI industry. This model with a capacity of up to 641GB quietly appeared on Hugging Face, continuing the company's low-key but influential launch style.

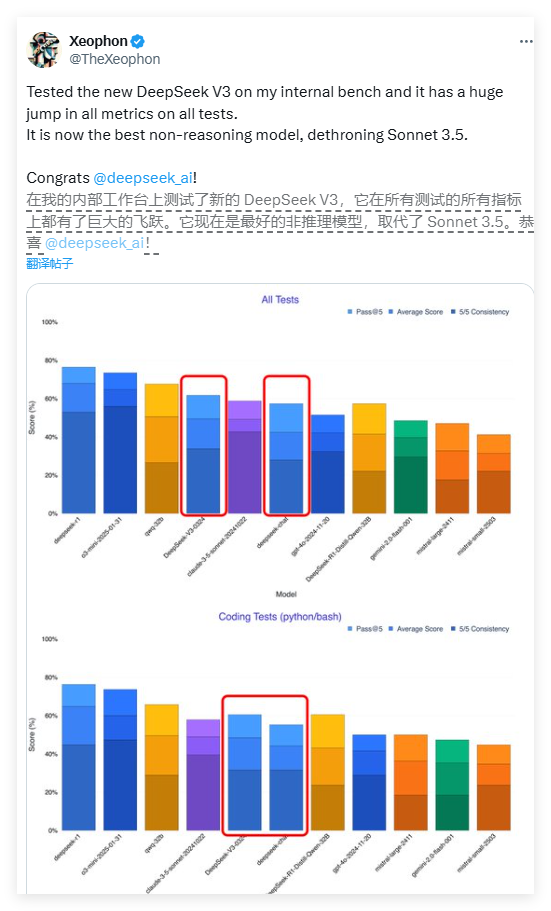

Early testers reported that the new model has made great progress in various indicators. AI researcher Xeophon said DeepSeek V3 “had a huge leap forward” in its internal testing, calling it “the best non-inference model that replaced Sonnet3.5.” If this statement is verified, DeepSeek's new model will surpass Anthropic's commercial AI system, Claude Sonnet3.5.

Unlike Sonnet, which requires subscription, the model weights of DeepSeek-V3-0324 are completely free and are under a MIT license, allowing free use for commercial purposes. This open attitude is in stark contrast to the practice of Western AI companies often placing models behind paywalls.

DeepSeek V3-0324 adopts a Hybrid Expert (MoE) architecture that activates approximately 37 billion of its 685 billion parameters in a specific task only, significantly reducing computing needs while achieving performance comparable to a larger fully activated model. In addition, the model incorporates long potential attention (MLA) and multi-token prediction (MTP) technologies, which increase output speed by nearly 80%.

Developer tool creator Simon Willison pointed out that the 4-bit quantized version reduces storage footprint to 352GB, making it possible to run on high-end consumer hardware such as Mac Studio with M3Ultra chips. AI researcher Awni Hannun said the DeepSeek-V3-0324 runs at >20 tokens per second on a 512GB M3 Ultra equipped with mlx-lm, and consumes less than 200 watts of power.

Early users reported that the communication style of the new model had changed significantly, presenting a more formal and technical-oriented style. Some users think the new version sounds “not that human-like” and loses the previous version’s “human-like tone.” This shift may reflect the conscious design choices of DeepSeek engineers aimed at repositioning the model as a more professional and technical application.

DeepSeek's release strategy reflects the fundamental differences in AI business philosophy between Chinese and Western companies. U.S. leaders such as OpenAI and Anthropic put their models behind paywalls, while Chinese AI companies are increasingly inclined to adopt loose open source licenses. This openness is rapidly changing China's AI ecosystem, allowing startups, researchers and developers to innovate based on advanced AI technologies without the need for large capital expenditures.

The release of DeepSeek-V3-0324 is also considered the basis of its next-generation inference model, DeepSeek-R2. Considering that Nvidia CEO Jen Hung recently pointed out that DeepSeek's R1 model "expends 100 times more computational than non-inference AI", DeepSeek achieves such performance with resource constraints is impressive. If DeepSeek-R2 follows the trajectory of R1, it could pose a direct challenge to the upcoming release of the GPT-5 from OpenAI.

Currently, users can download the complete model weights through Hugging Face, or experience the DeepSeek-V3-0324 API interface through platforms such as OpenRouter. DeepSeek's open strategy is redefining the global AI landscape, heralding the arrival of a more open and popular era of AI innovation.