Recently, Microsoft Research, in conjunction with researchers from the University of Washington, Stanford, University of Southern California, University of California, Davis and the University of California, San Francisco, launched the LLaVA-Rad, a new small multimodal model (SMM). ), aiming to improve the efficiency of clinical radiological reports generation. The launch of this model not only marks a major advance in medical image processing technology, but also brings more possibilities to the clinical application of radiology.

In the field of biomedical science, research based on large-scale basic models has shown good application prospects, especially under the development of multimodal generation AI, text and images can be processed simultaneously, thus supporting tasks such as visual question-and-answer and radiological report generation. . However, there are still many challenges, such as the high resource demand for large models and the difficulty in wide deployment in clinical settings. Although small multimodal models have improved efficiency, there is still a significant gap in performance compared to large models. In addition, the lack of open source models and reliable method for assessing factual accuracy also limits clinical applications.

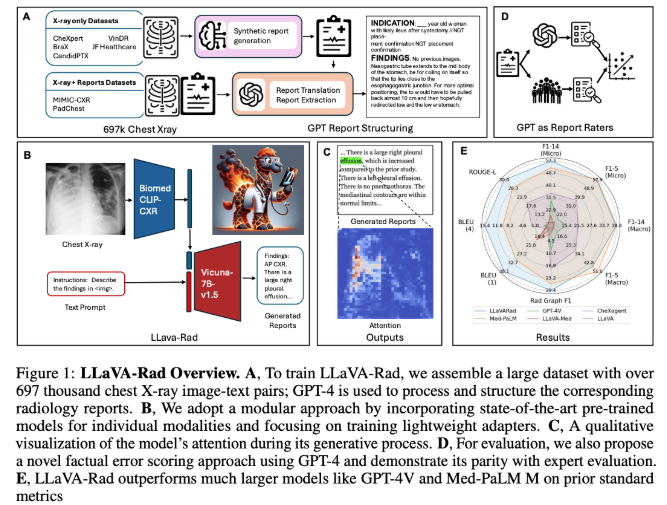

The training of the LLaVA-Rad model is based on a dataset of 697,435 pairs of radiological images and reports from seven different sources, focusing on chest X-ray (CXR) imaging, the most common type of medical imaging. The design of this model adopts a modular training method, including three stages: single-modal pre-training, alignment and fine-tuning, and uses an efficient adapter mechanism to embed non-text modality into text embed space. Although the size of LLaVA-Rad is smaller than that of some large models, such as Med-PaLM M, it performs excellently in performance, especially in key indicators such as ROUGE-L and F1-RadGraph, which is 12.1% higher than other similar models. and 10.1%.

It is worth mentioning that LLaVA-Rad maintains superior performance on multiple data sets and performs stably even in unseen data tests. All this is attributed to its modular design and efficient data utilization architecture. In addition, the research team also launched CheXprompt, an indicator for automatic scoring factual accuracy, further solving evaluation difficulties in clinical applications.

The release of LLaVA-Rad is undoubtedly a major step in promoting the application of basic models in clinical settings, providing a lightweight and efficient solution for the generation of radiological reports, marking a further integration between technology and clinical needs. .

Project address: https://github.com/microsoft/LLaVA-Med