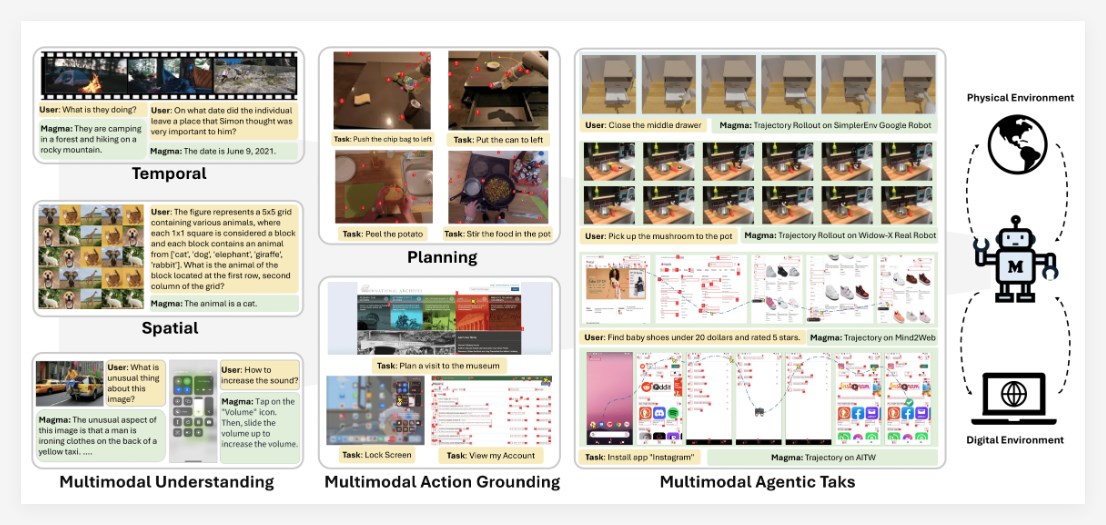

Recently, Microsoft officially opened up a multimodal AI Agent basic model called "Magma" on its official website. This new artificial intelligence has the ability to span the digital and physical worlds, and can process multiple data types such as images, videos, texts, etc. at the same time. Compared with traditional AI assistants, Magma is unique in its psychological prediction capabilities, allowing it to more accurately understand the intentions and future behaviors of characters or objects in the video.

Magma has a wide range of application scenarios, and users can use this AI to automatically place orders and check weather and other daily operations. In addition, it can automatically control physical robots and provide real-time help to users during activities such as chess. This multimodal capability allows Magma to perform well in different environments and be able to adapt to a variety of complex tasks.

According to official reports, Magma is particularly suitable for AI-powered assistants or robots, helping them better understand their surroundings and take corresponding actions. For example, it can guide home robots to learn how to organize items you’ve never seen before, or help virtual assistants generate step-by-step guides for users. This feature greatly improves the robot's learning ability and practicality.

The Magma model is one of the VLA (Visual Language Action) series. By learning massive amounts of public visual and linguistic data, it can integrate language, space and time intelligence, thereby effectively responding to complex tasks and challenges in real life. With the development of artificial intelligence technology, the launch of Magma marks another big step forward for smart assistants and robotics.

Project link: https://microsoft.github.io/Magma/