Recently, a research team from the Tubingen Ellis Institute, the University of Maryland and Lawrence Livermore National Laboratory developed a new language model called Huginn, which uses a recursive architecture that significantly improves inference capabilities. Unlike traditional models, Huginn can independently reason in the "latent space" of the neural network without special "inference chain" training and then output the results.

The research team developed a new language model called Huginn, which uses a recursive architecture that significantly improves inference capabilities. Unlike traditional models, Huginn can independently reason in the "latent space" of the neural network without special "inference chain" training and then output the results.

The Huginn model was trained at a large scale using 4096 AMD GPUs on the Frontier supercomputer. Its training method is unique, using variable calculation number of iterations, and the system randomly determines the number of repeated calculation modules, so that the model can better adapt to different task complexity.

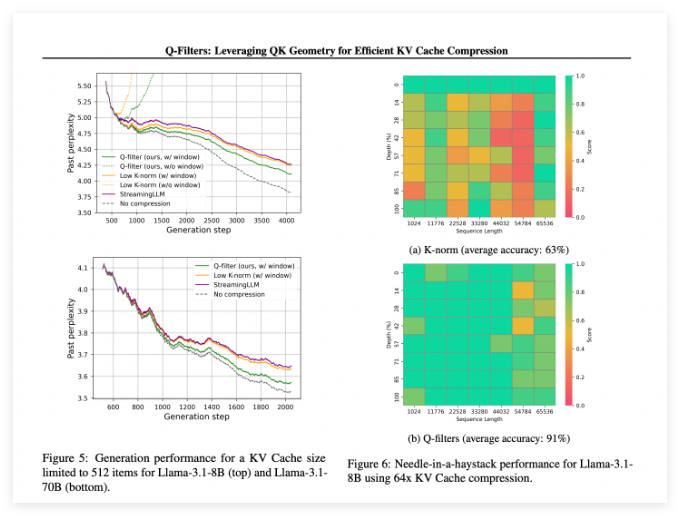

Tests show that Huginn performs outstandingly in math and programming tasks, surpassing open source models with both parameter size and training data volume several times higher than itself in GSM8k and MATH benchmarks. The researchers observed that Huginn was able to adjust the depth of computation based on task complexity and develop chains of inference within the “latent space.” Analysis shows that the model forms complex computational patterns in the "latent space", such as presenting circular trajectories when solving mathematical problems. This proves that Huginn can learn independently and reason in novel ways.

Researchers believe that although Huginn's absolute performance needs to be improved, it has shown amazing potential as a proof-of-concept model. With the prolonged inference time and increased capabilities, large models using Huginn architecture are expected to become an alternative to traditional inference models. The team emphasized that Huginn's approach may be able to capture indescribable types of reasoning and plans to continue research in the future and explore extension methods such as reinforcement learning to further improve model performance.