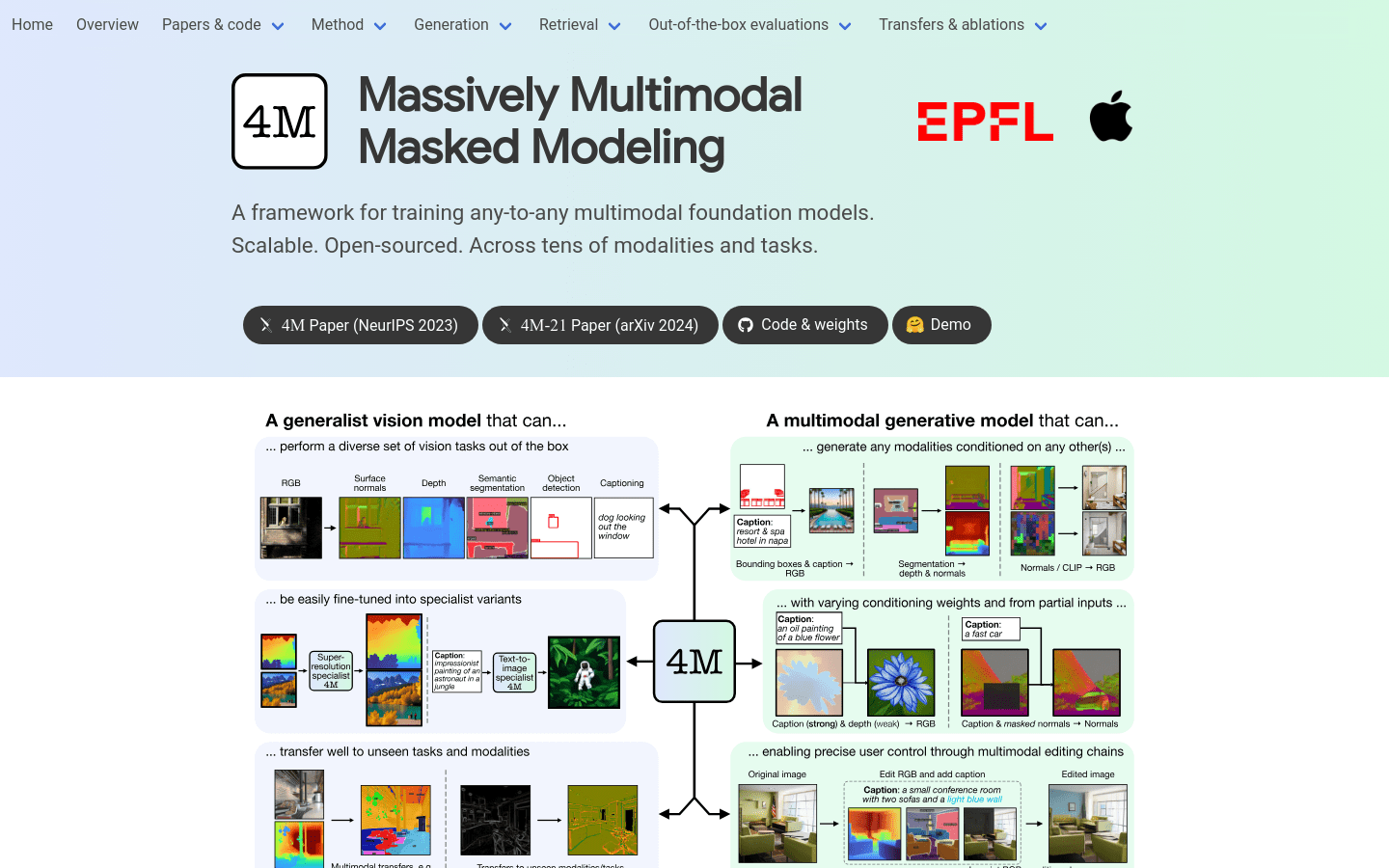

4M is a powerful framework for training multi-modal and multi-task models. In simpler terms, it's a tool that can handle many different types of visual tasks and create new content using different kinds of information (like images and text) at the same time. It's been shown to be versatile and adaptable for many visual tasks, paving the way for more advanced multi-modal learning in computer vision and beyond.

4M is primarily designed for researchers and developers in computer vision and machine learning. If you're interested in processing multiple types of data (like images and text) and building models that can generate new content, then 4M is relevant to your work.

4M offers a wide range of applications, including:

Image and Video Analysis: Understand and extract information from images and videos.

Content Creation: Generate new images, videos, or other content based on different inputs.

Data Augmentation: Create variations of existing data to improve model training.

Multi-modal Interaction: Allow different types of data to interact and influence each other.

Generating depth maps and surface normals from a standard RGB image.

Image inpainting: reconstructing a complete RGB image from a partial input.

Multi-modal retrieval: finding images that match a given text description.

Multi-modal and Multi-task Training: 4M can predict or generate outputs from various types of input data simultaneously.

Unified Transformer Architecture: It uses a single Transformer encoder-decoder architecture, making it efficient and easy to use. Different data types are converted into a common format (sequences of tokens) before processing.

Partial Input Prediction: 4M can generate outputs even when only part of the input is available, enabling chain generation of multi-modal data.

Self-Consistent Predictions: It generates outputs that are consistent across different modalities, ensuring reliable results.

Fine-grained Multi-modal Generation and Editing: Supports tasks such as semantic segmentation and depth map generation.

Controllable Multi-modal Generation: Allows you to control the output by adjusting the weights of different input conditions.

Multi-modal Retrieval: Utilizes pre-trained models (like DINOv2 and ImageBind) to perform efficient image retrieval based on textual descriptions.

Here's a step-by-step guide to get started:

1.Access the GitHub repository: Find the 4M code and pre-trained models on GitHub.

2.Install dependencies: Follow the documentation to set up the necessary software and libraries.

3.Load a pre-trained model: Download and load one of the pre-trained 4M models.

4.Prepare your input data: This could be text, images, or other relevant data types.

5.Select a task: Choose whether you want to perform generation or retrieval.

6.Run the model: Execute the model and observe the results. Adjust parameters as needed.

7.Post-process the output: Convert the generated tokens back into the desired format (e.g., images).

This information should provide a clear understanding of 4M and its capabilities. Remember to consult the official GitHub repository for the most up-to-date documentation and instructions.