Product introduction

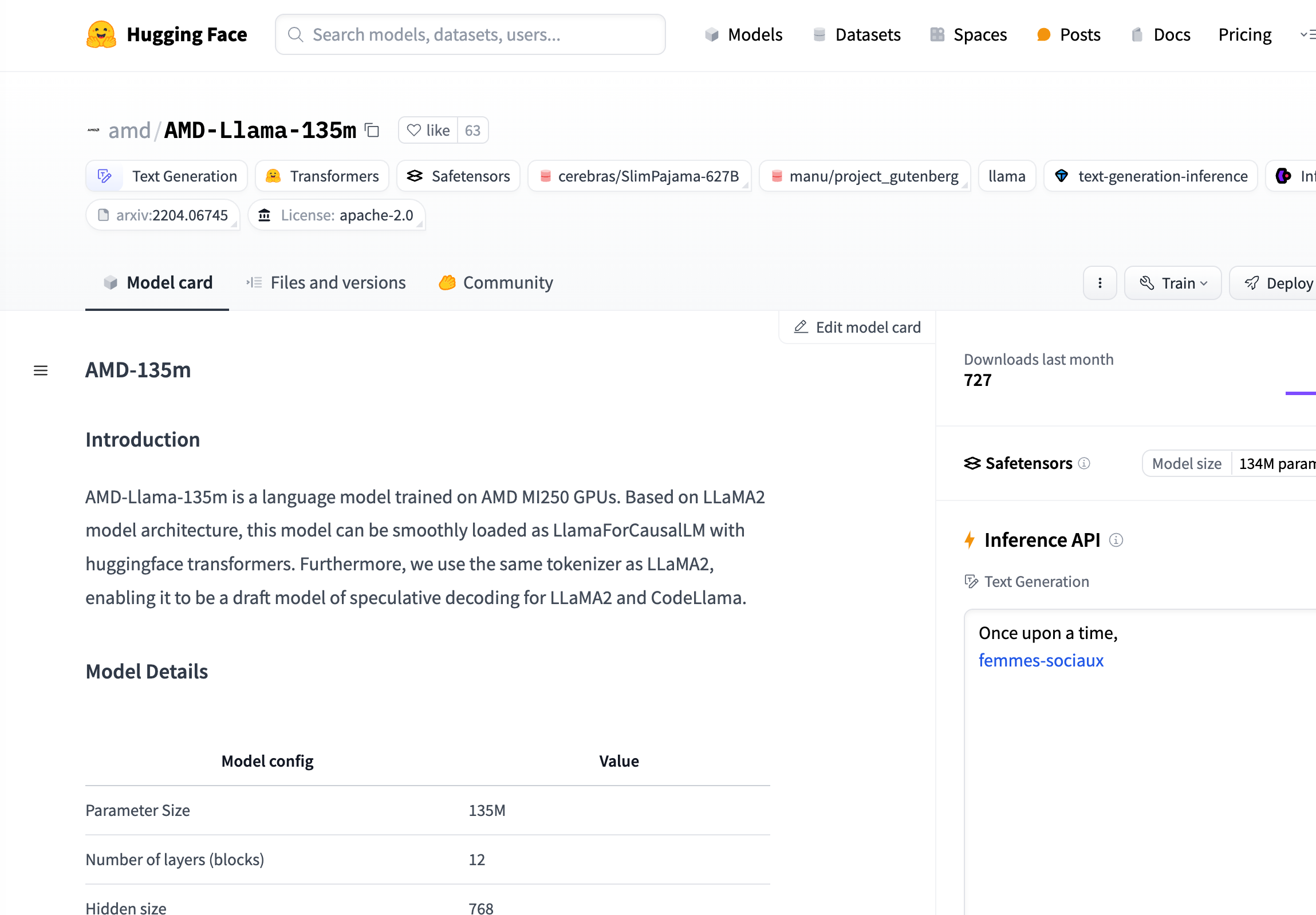

AMD-Llama-135m is a language model trained based on the LLaMA2 model architecture and can run smoothly on AMD MI250 GPU. This model supports text generation and code generation and is suitable for a variety of natural language processing tasks.

target audience

This product is suitable for developers, data scientists, and machine learning engineers. They can use this model to perform text generation, code generation and natural language processing related work.

Usage scenario examples

Generate story text: create novels, short stories, and more.

Coding Assistance: Help writing and debugging code.

Natural language processing research: the study of language analysis and understanding.

Product features

Support text generation

Support code generation

Based on LLaMA2 model architecture

Use the same tokenizer as LLaMA2

Can be loaded and used on the Hugging Face platform

Supports pre-training and fine-tuning

Supports multi-language text generation

Tutorial

1. Load the model from Hugging Face

2. Use AutoTokenizer for text encoding

3. Generate text or code through the model

4. Decode the generated text or code

5. Pre-train and fine-tune the model to adapt to specific tasks

6. Call the model API for text generation or code generation in actual applications

Hopefully the above information will help you better understand and use the AMD-Llama-135m model.