What is stackblitz?

Stackblitz is a web-based IDE TAILORORORD for The Javascript Ecosystem. It uses webContainers, Powered by Webassembly, to create instant node.js Environments DI Rectly in your browser. This projects exceptional speed and security.

---

Product introduction

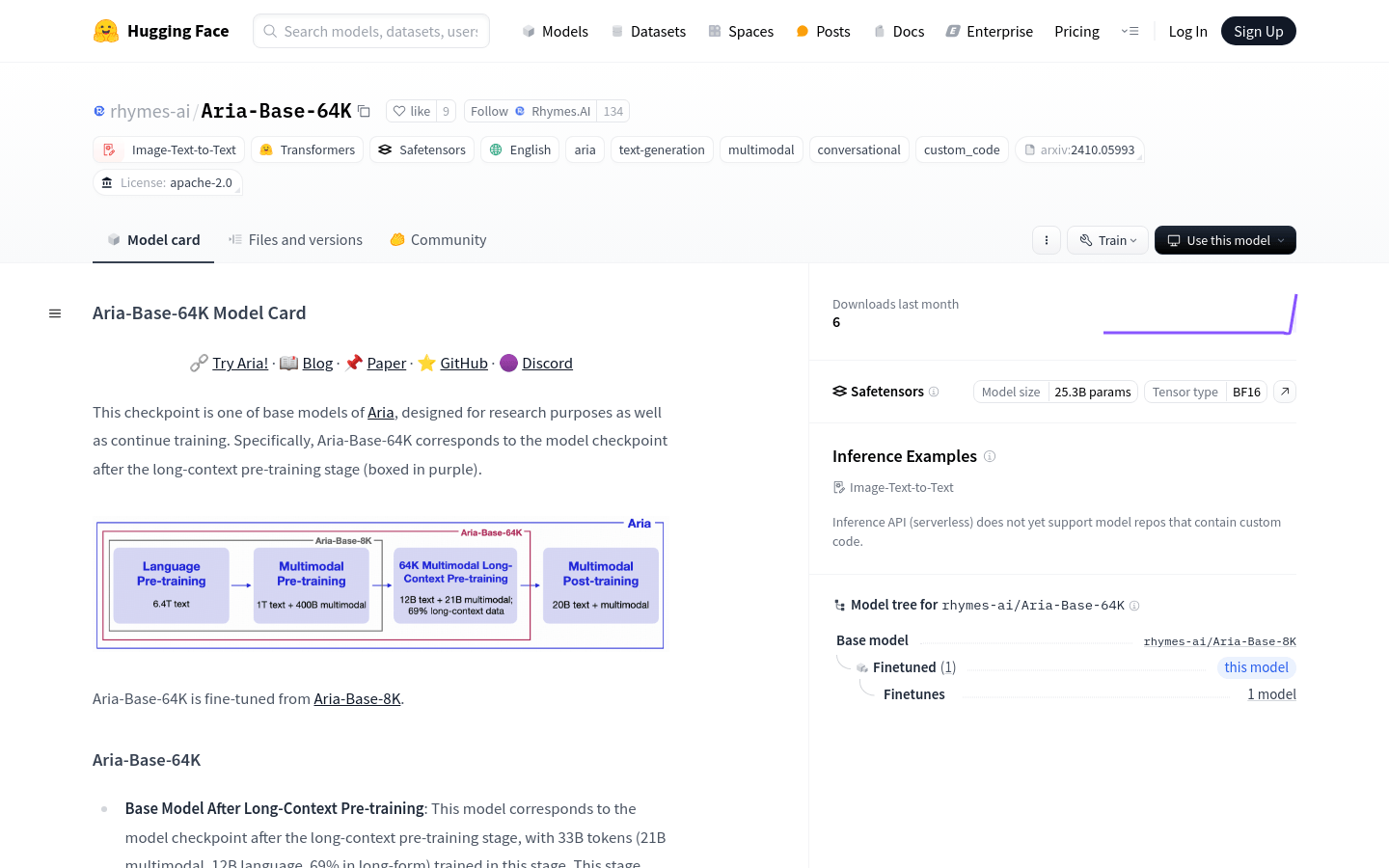

Aria-Base-64K is one of the basic models of the ARIA series, which aims to study and further train.

Pre -training: After 33B Token training, including 21B multimodal data, 12B language data, 69% of which are long text.

Application scenario: It is suitable for the continued pre -training or fine -tuning of long video quiz data sets or long document quiz data sets.

Advantages: Even in the case of limited resources, you can use short instructions to tune data sets for post -training and transfer to long -text question and answer scenes.

Multi -mode understanding: Can understand up to 250 high -resolution images or 500 medium resolution images.

Basic performance: It performs well in language and multi-mode scenarios, which is the same as Aria-Base-8K.

Training features: Only about 3% of the data is trained in the chat template format.

Target user base

Researchers and developers, especially those who handle long text and multi -mode data sets.

Example

Develop a video Q & A system to enhance the ability to understand video content.

Apply to long document quiz, improve the efficiency of document search and understanding.

Develop new multi -mode applications, such as combined reasoning of images and text.

Product

Long text pre -training

Multi -mode understanding

Powerful basic performance

Low -proportion chat template training

Quickly start support

Advanced reasoning and fine -tune

Tutorial

1. Install the necessary library: Use PIP to install Transformers, Accelerate and SentencePiece.

2. Load the model: Use Automodelformallm.from_Pretraied to load the Aria-Base-64K model.

3. Process input: Use autopropesor.from_pretraine to process input text and images.

4. Reasoning: The processed input is passed to the model to perform the generation operation.

5. Decoding output: Use the token output of the processor decoding model to get the final result.

6. Advanced use: access the code library on GitHub to carry out more advanced reasoning and fine -tuning.