What is Aya Expanse?

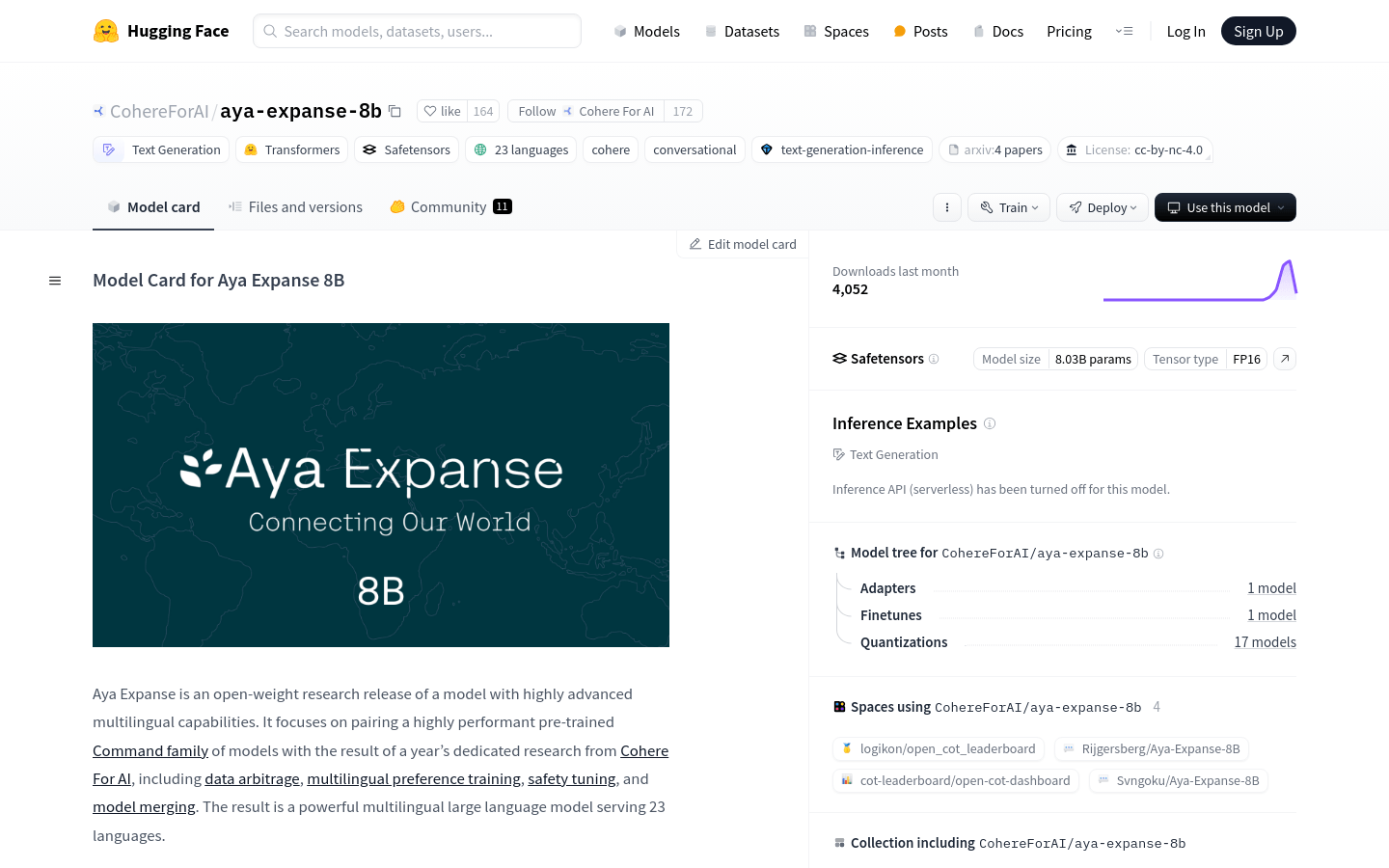

Aya Expanse is a sophisticated research model with advanced multilingual capabilities. It integrates powerful pre-trained models with Cohere For AI's research findings, including data curation, preference training in multiple languages, safety adjustments, and model merging. This makes it a robust multilingual large language model that supports 23 languages.

Who is the target audience?

The primary users include researchers, developers, and businesses needing multilingual text generation. It is particularly useful for international companies handling multilingual text data and academic institutions conducting cross-language research.

What are some example use cases?

In a multilingual writing assistant, Aya Expanse can help users write in different languages. In a multilingual Q&A system, it can understand and answer questions in various languages. In a cooking app, it can provide cooking instructions in different languages.

What are the key features?

Supports text generation in 23 languages

Uses optimized transformer architecture for autoregressive language modeling

Has undergone supervised fine-tuning, preference training, and model merging post-training

Operates with up to 8K context length

Can be tested via Hugging Face Space without downloading weights

Includes detailed installation and usage guides for easy developer onboarding

Allows installation of transformers library via pip and loading of the model using AutoTokenizer and AutoModelForCausalLM

Provides community-contributed example notebooks demonstrating various use cases

How do you use it?

1. Install the transformers library by running pip install 'git+https://github.com/huggingface/transformers.git' in the terminal or command prompt.

2. Import necessary modules like AutoTokenizer and AutoModelForCausalLM in your Python code.

3. Load the model and tokenizer using the model ID CohereForAI/aya-expanse-8b.

4. Format user messages into a model-compatible format.

5. Generate text using the model's generate method.

6. Decode the generated text using the tokenizer's decode method.

7. Output the generated text to the console or use it in your application.