CohereForAI's Aya Vision 8B is a multilingual visual language model with 800 million parameters, which is specially optimized for a variety of visual language tasks and supports functions such as OCR, image description, visual reasoning, summary, and question and answer. The model is based on the C4AI Command R7B language model, combined with the SigLIP2 visual encoder, supports 23 languages and has a 16K context length. Its main advantages include multilingual support, strong visual understanding capabilities, and a wide range of applicable scenarios. The model is released in open source weights and aims to drive the growth of the global research community. Under the CC-BY-NC license agreement, users are required to comply with the acceptable use policy of C4AI.

Demand population:

"This model is suitable for researchers, developers and enterprise users who need visual language processing capabilities, and is especially suitable for scenarios that require multilingual support and efficient visual understanding, such as intelligent customer service, image annotation, content generation, etc. Its open source features also facilitate users to further customize and optimize."

Example of usage scenarios:

Experience visual language abilities in Cohere playground or Hugging Face Space.

Chat with Aya Vision via WhatsApp to test its multilingual dialogue and image comprehension.

Use the model for text recognition (OCR) in images, supporting text extraction in multiple languages.

Product Features:

Supports 23 languages, including Chinese, English, French, etc., covering multiple language scenarios

Have strong visual language comprehension ability, which can be used in OCR, image description, visual reasoning and other tasks

Supports 16K context length, capable of handling longer text input and output

Can be used directly through the Hugging Face platform, providing detailed usage guides and sample code

Supports a variety of input methods, including images and text, to generate high-quality text output

Tutorials for use:

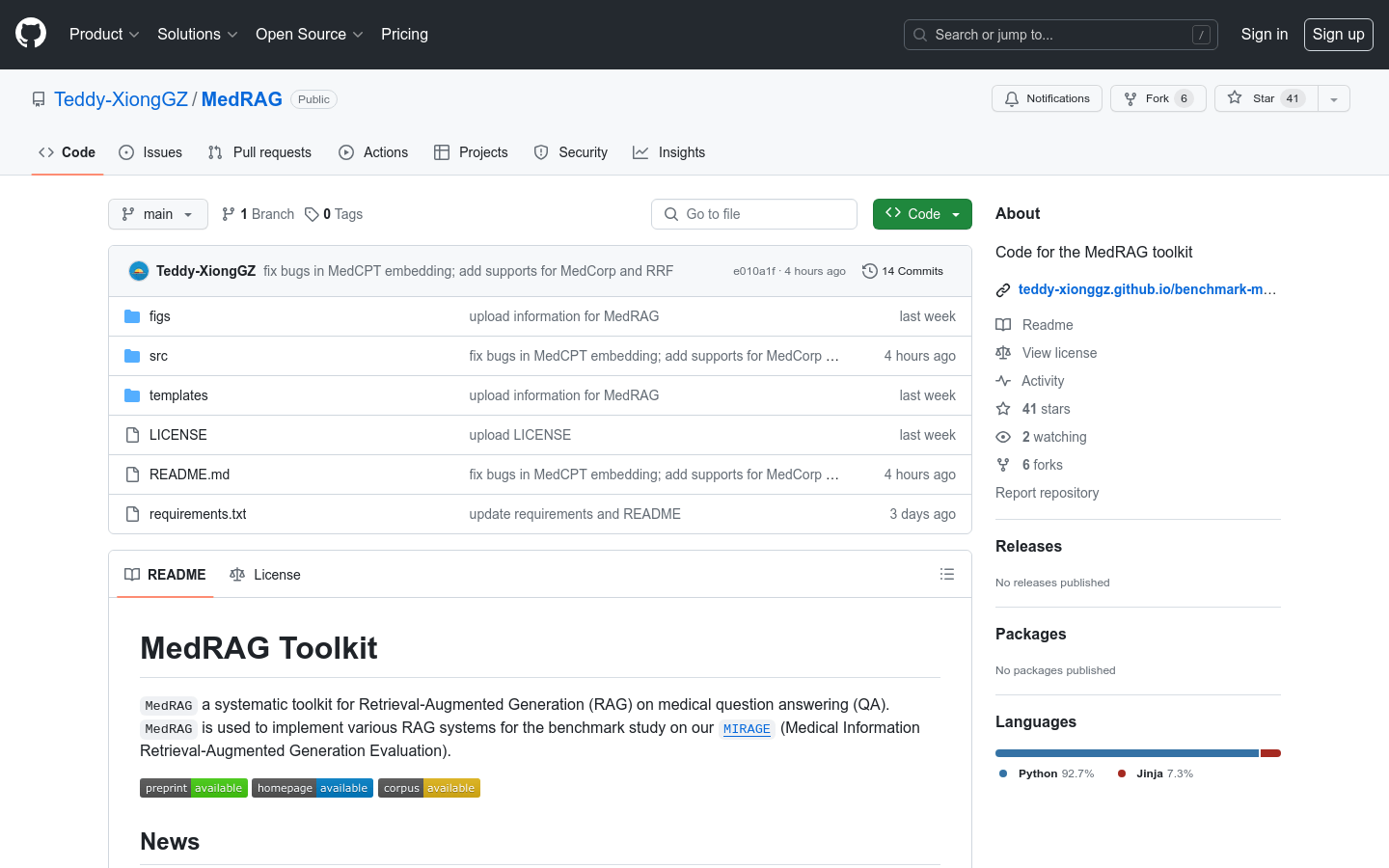

1. Install the necessary libraries: Install the transformers library from the source code to support the Aya Vision model.

2. Import the model and processor: Load the model using AutoProcessor and AutoModelForImageText.

3. Prepare input data: organize images and text in the specified format and use the processor to process the input.

4. Generate output: Call the generate method of the model to generate text output.

5. Use pipeline to simplify operations: Use the model directly to perform image-text generation tasks through transformers' pipeline.