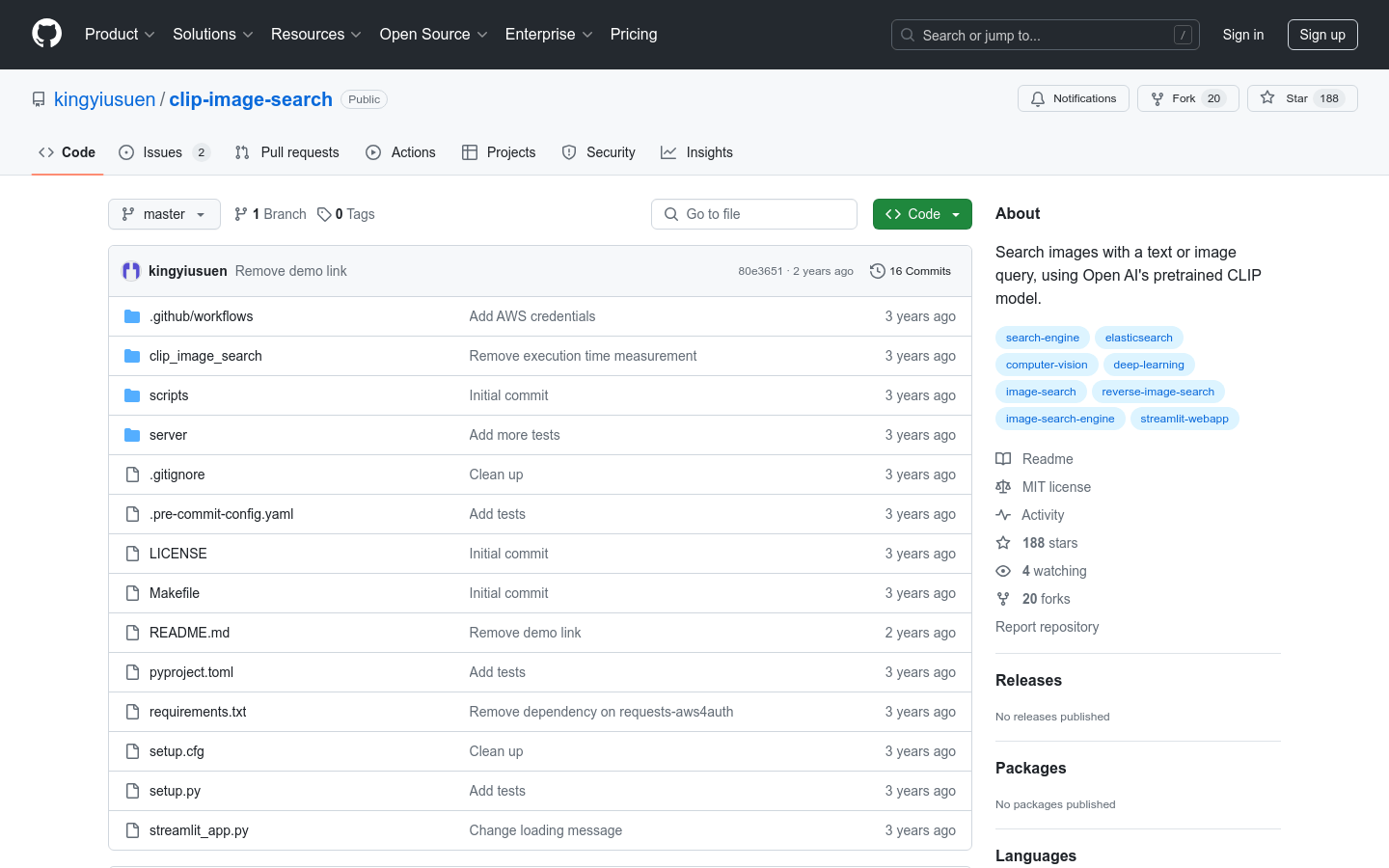

clip-image-search is an image search tool based on Open AI-based pre-trained CLIP model, which can retrieve images through text or image queries. The CLIP model maps images and text to the same latent space through training, so that comparisons can be made by similarity metrics. The tool uses images from the Unsplash dataset and uses Amazon Elasticsearch Service for k-nearest neighbor search, deploys query services through AWS Lambda functions and API gateways, and the front-end uses Streamlit to develop.

Demand population:

"The target audience is for developers and researchers who need to do image searches, especially those interested in image retrieval based on deep learning models. This product is suitable for them because it provides a simple, efficient way to retrieve images and can be easily integrated into existing systems."

Example of usage scenarios:

Researchers use the tool to retrieve pictures that match specific text descriptions for visual recognition research

Developers integrate the tool into their applications to provide text-based image search capabilities

Educators use this tool to help students understand the relationship between images and text

Product Features:

Calculate feature vectors of pictures in dataset using image encoder of CLIP model

Index the image with the image ID, store its URL and feature vector

Calculate its feature vector based on the query (text or picture)

Calculate the cosine similarity between query feature vectors and image feature vectors in the dataset

Return the k pictures with the highest similarity

Tutorials for use:

Install dependencies

Download the Unsplash dataset and extract the metadata

Create an index and upload the image feature vector to Elasticsearch

Building Docker Images for AWS Lambda

Run Docker image as container and request test with POST

Run Streamlit application for front-end display