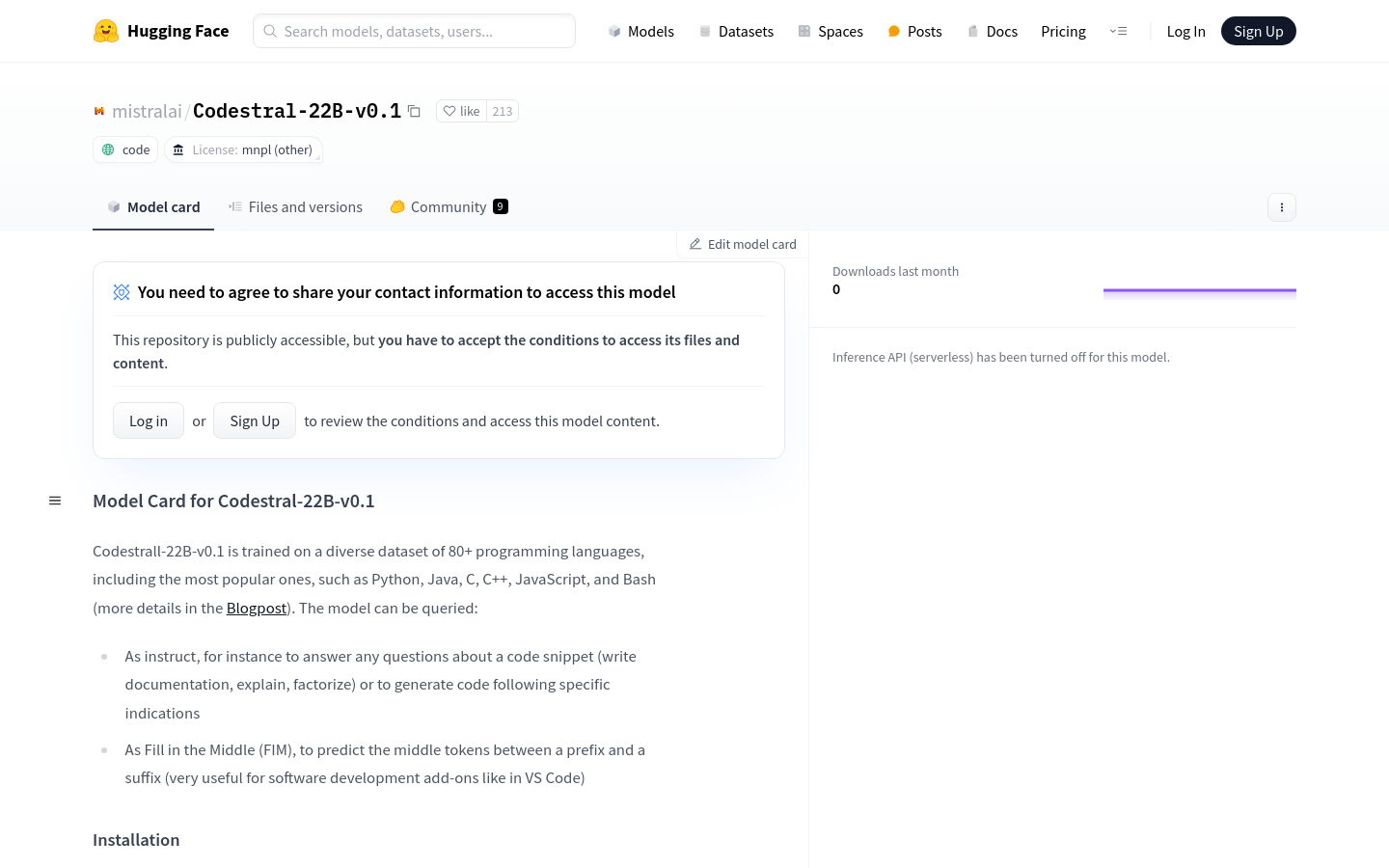

Codestral-22B-v0.1 is a large language model developed by Mistral AI Team. It has been trained in more than 80 programming languages, including Python, Java, C, C++, JavaScript and Bash. This model can generate code based on instructions, or interpret and refactor code snippets, etc. It also supports Fill in the Middle (FIM) function, used to predict the middle part of the code, and is especially suitable for plug-ins for software development tools such as VS Code. The model currently has no content review mechanism, but the development team is seeking community collaboration to enable deployment in environments where content review is required.

Demand population:

"The target audience is software developers, programming educators, and researchers. The model helps developers quickly generate code and improve development efficiency, while also being used for teaching and research, helping students and researchers better understand and learn programming languages."

Example of usage scenarios:

Generate a Fibonacci sequence function written in Rust

Explain and refactor a Python code snippet

As a VS Code plugin, automatically complete the code

Product Features:

Supports code generation and query in 80+ programming languages

As an instruction model, it can answer questions about code snippets

Support Fill in the Middle (FIM) function, predict the middle part of the code

Suitable for software development plug-ins such as VS Code

Model training details and more information can be found in the official blog

Tutorials for use:

Step 1: Install the mistral_inference environment

Step 2: Use pip to install mistral_common and make sure that the version is greater than or equal to 1.2

Step 3: Import necessary modules, such as Transformer, generate, etc.

Step 4: Set the model path and initialize the Tokenizer

Step 5: Define the prefix and suffix of the code

Step 6: Create a FIM request and encode

Step 7: Generate intermediate code using the model

Step 8: Decode and output the generated code