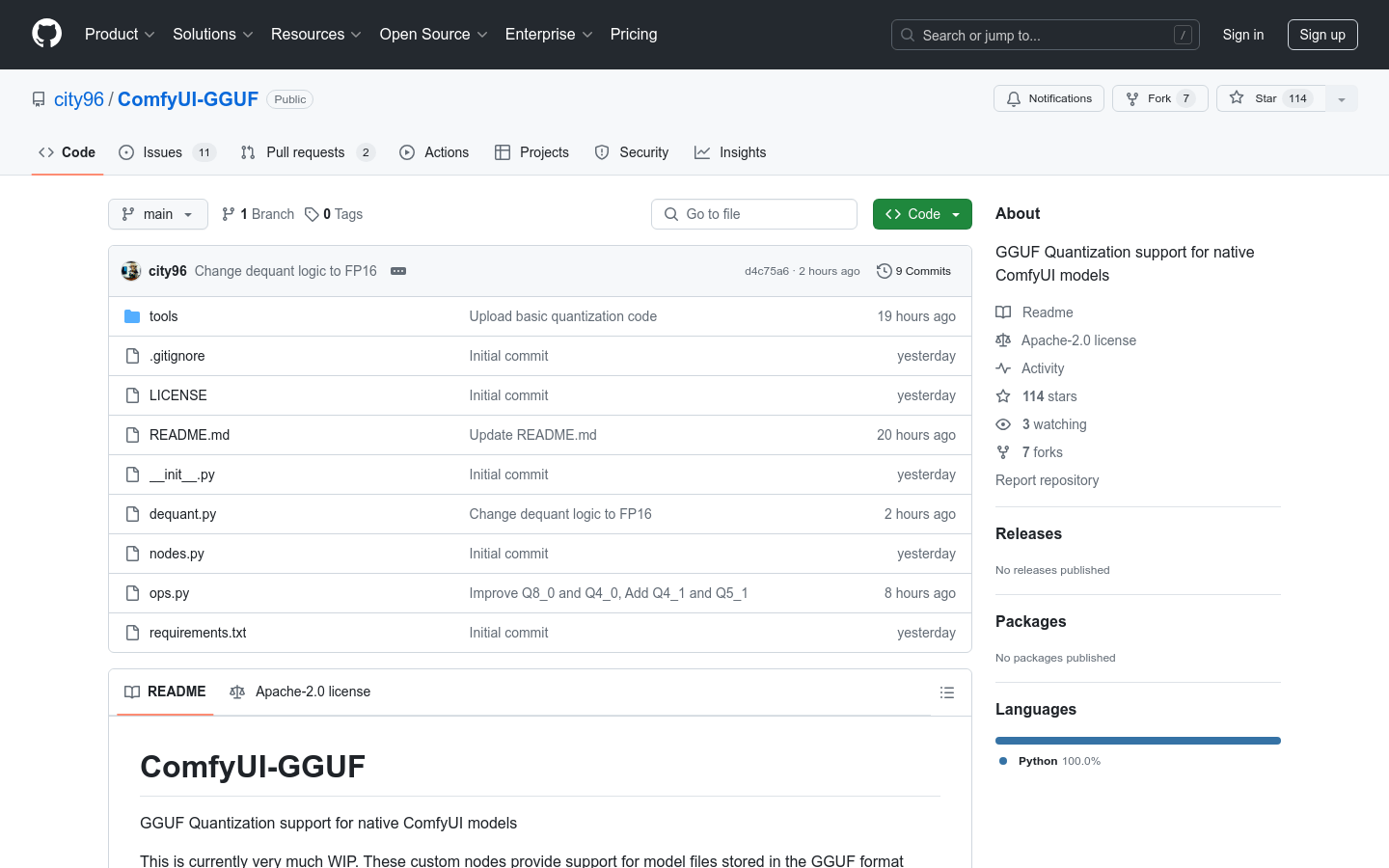

ComfyUI-GGUF is a project that provides GGUF quantitative support for ComfyUI native models. It allows model files to be stored in the GGUF format, a format popularized by llama.cpp. Although regular UNET models (conv2d) are not suitable for quantization, transformer/DiT models like flux seem to be less affected by quantization. This allows them to run at lower variable bitrates per weight on low-end GPUs.

Demand group:

"The target audience is mainly developers and researchers who use ComfyUI for model training and deployment. They need to optimize model performance in resource-constrained environments, and ComfyUI-GGUF helps them achieve this goal through quantitative technology."

Example of usage scenario:

Developers use ComfyUI-GGUF to deploy flux models on low-end GPUs to achieve resource optimization.

The researchers used GGUF quantization technology to improve the performance of the model on edge devices.

When teaching deep learning, educational institutions use ComfyUI-GGUF as a case to teach model optimization techniques.

Product features:

Support quantification of GGUF format model files

Suitable for transformer/DiT models such as flux

Allows running on low-end GPUs, optimizing resource usage

Custom nodes are provided to support model quantification

Does not include LoRA/Controlnet etc support as weights are quantized

Installation and usage guide provided

Usage tutorial:

1. Make sure the ComfyUI version supports custom operations.

2. Use git to clone the ComfyUI-GGUF repository.

3. Install the dependencies required for inference (pip install --upgrade gguf).

4. Place the .gguf model file in the ComfyUI/models/unet folder.

5. Use the GGUF Unet loader, which is located under the bootleg category.

6. Adjust model parameters and settings as needed for model training or inference.