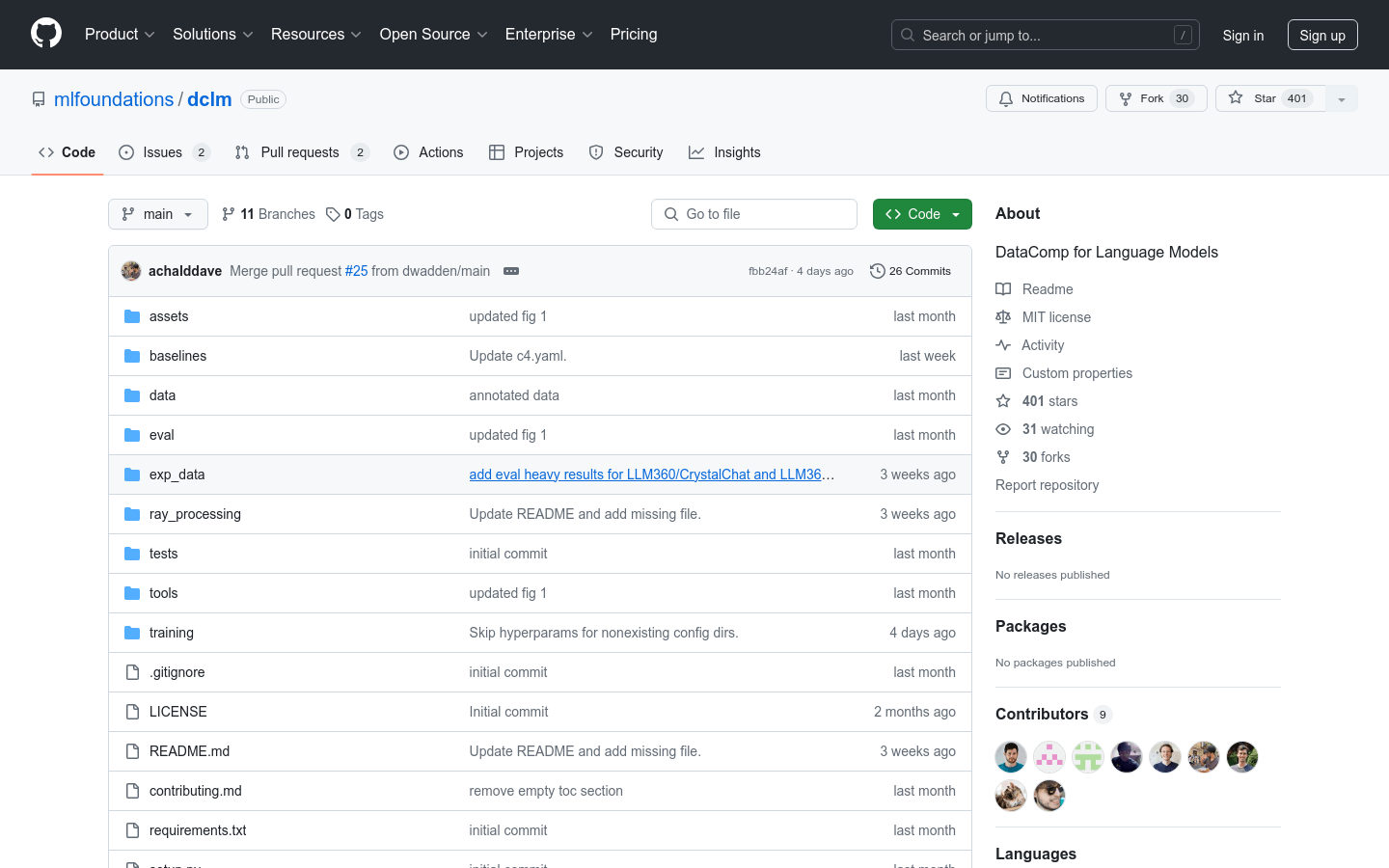

DataComp-LM ( DCLM ) is a comprehensive framework designed for building and training large language models (LLMs), providing a standardized corpus, efficient pre-training recipes based on the open_lm framework, and more than 50 evaluation methods. DCLM enables researchers to experiment with different dataset construction strategies at different computational scales, from 411M to 7B parameter models. DCLM significantly improves model performance through optimized dataset design and has led to the creation of multiple high-quality datasets that outperform all open datasets at different scales.

Demand group:

" DCLM is intended for researchers and developers who need to build and train large language models, especially those professionals who seek to improve model performance by optimizing data set design. It is suitable for those who need to process large-scale data sets and want to operate on different computational scales Scenario for conducting experiments."

Example of usage scenario:

The researchers used DCLM to create DCLM -BASELINE dataset and used it to train the model, showing superior performance compared with closed-source models and other open-source datasets.

DCLM supports training models at different scales, such as 400M-1x and 7B-2x, to adapt to different computing needs.

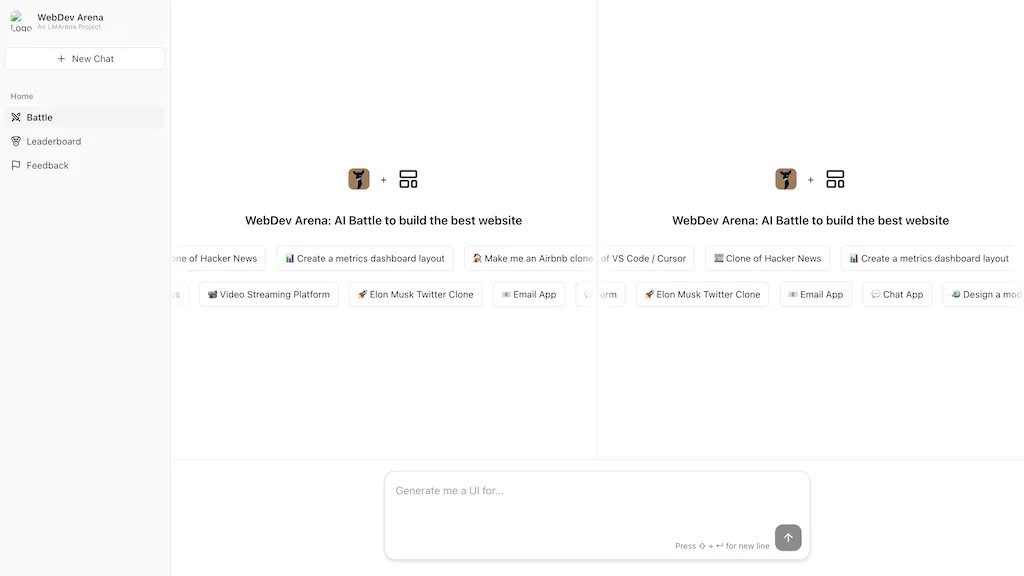

Community members demonstrate the performance of models trained on different datasets and scales by submitting models to DCLM ’s leaderboards.

Product features:

Provides over 300T unfiltered CommonCrawl corpus

Provides effective pre-training recipes based on the open_lm framework

Provides more than 50 evaluation methods to evaluate model performance

Supports different calculation scales from 411M to 7B parameter models

Allows researchers to experiment with different dataset construction strategies

Improve model performance by optimizing dataset design

Usage tutorial:

Clone the DCLM repository locally

Install required dependencies

Set up AWS storage and Ray distributed processing environment

Select original data source and create reference JSON

Define data processing steps and create pipeline configuration files

Set up a Ray cluster and run data processing scripts

Tokenize and shuffle the processed data

Run the model training script using the tokenized dataset

Evaluate the trained model and submit the results to the DCLM ranking list