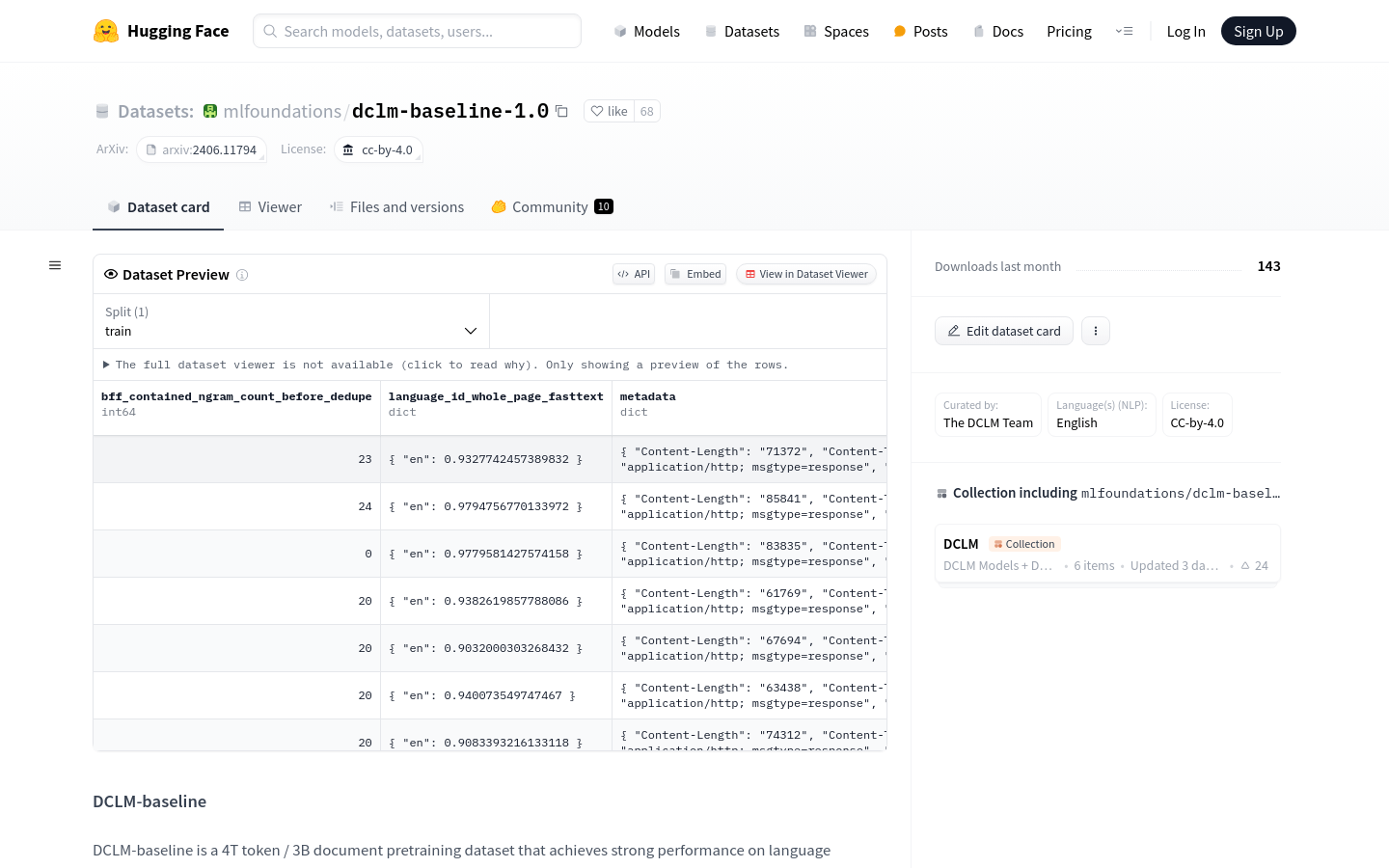

DCLM-baseline is a pre-training data set for language model benchmark testing, containing 4T tokens and 3B documents. It is extracted from the Common Crawl dataset through carefully planned data cleaning, filtering and deduplication steps, aiming to demonstrate the importance of data curation in training efficient language models. This dataset is for research use only and is not suitable for production environments or domain-specific model training, such as coding and mathematics.

Demand group:

"The target audience of the DCLM-baseline dataset is researchers and developers in the field of natural language processing. They can use this dataset to train and evaluate their own language models, especially in terms of benchmarking. Due to the size and quality of the dataset , it is particularly suitable for research projects that require large amounts of data for model training."

Example of usage scenario:

Researchers use DCLM-baseline to train their own language models and achieve excellent results on multiple benchmark tests.

Educational institutions use it as a teaching resource to help students understand the construction and training process of language models.

Enterprises use this data set to conduct model performance testing and optimize their natural language processing products.

Product features:

High-performance dataset for language model benchmarking

Contains a large number of tokens and documents, suitable for large-scale training

After cleaning, filtering and deduplication, data quality is guaranteed

Provides a benchmark for studying language model performance

Not suitable for production environments or domain-specific model training

Helps researchers understand the impact of data curation on model performance

Promotes the research and development of efficient language models

Usage tutorial:

Step 1: Visit the Hugging Face website and search for DCLM-baseline dataset.

Step 2: Read the dataset description and usage guide to understand the structure and characteristics of the dataset.

Step 3: Download the data set and prepare the required computing resources for model training.

Step 4: Use the data set to train the language model and monitor the training process and model performance.

Step 5: After completing the training, use DCLM-baseline data set to evaluate and test the model.

Step 6: Analyze the test results and adjust model parameters or training strategies as needed.

Step 7: Apply the trained model to practical problems or further research.