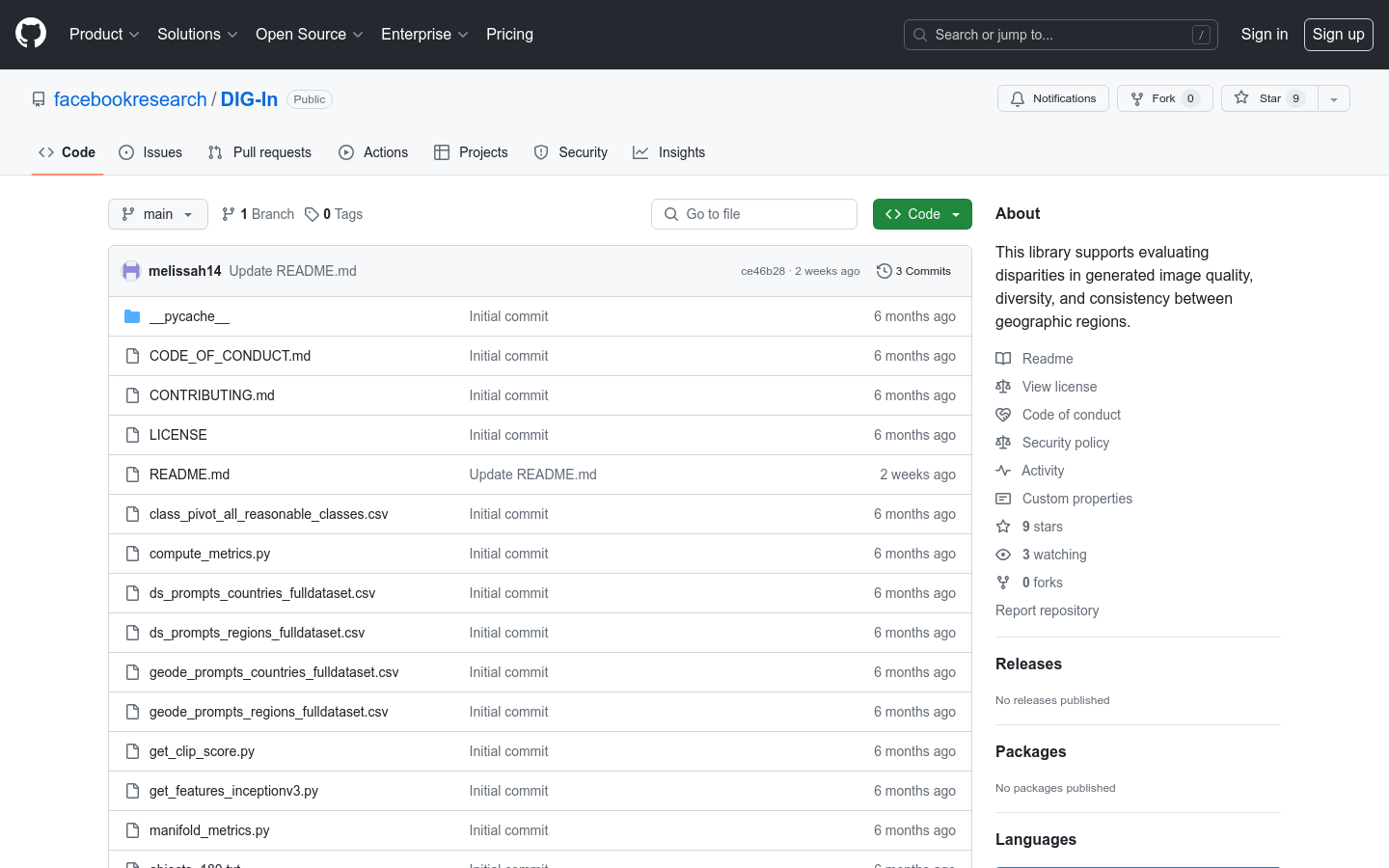

DIG-In is a library for evaluating differences in quality, diversity, and consistency of text-to-image generation models across different geographic regions. It uses GeoDE and DollarStreet as reference datasets to measure the performance of the model by calculating the relevant features and accuracy of the generated image, coverage metrics, and using CLIPScore metrics. The library supports researchers and developers in the audit of geographic diversity of image generation models to ensure their fairness and inclusion on a global scale.

Demand population:

" DIG-In is suitable for researchers and developers who need to evaluate and ensure that their image generation models perform consistently around the world. It is particularly suitable for applications that focus on the fairness and inclusion of models in different cultural and geographical contexts."

Example of usage scenarios:

The researchers used DIG-In to evaluate the output quality of different image generation models in Africa.

Developers use DIG-In to ensure that their applications provide a consistent user experience worldwide.

Educational institutions use DIG-In as a teaching tool to teach students how to evaluate and improve the equity of AI models.

Product Features:

The quality differences in generated images were evaluated using the GeoDE and DollarStreet datasets.

Calculate the accuracy, recall, coverage, and density metrics of the generated image.

Image consistency was evaluated using CLIPScore metrics.

Provides scripts to extract features from generated images.

Supports pointers to customize image or feature paths.

Provide scripts for calculating metrics, including balanced reference datasets.

Tutorials for use:

1. Generate an image corresponding to the prompt in the csv file.

2. Provide pointers to prompt csv and generated image folder to extract image features.

3. Use extracted features to calculate metrics, including accuracy, recall, coverage, and density.

4. Update the path of the feature file as needed.

5. Run scripts that calculate metrics, including balanced reference datasets.

6. Analyze the metric results in the generated csv file to evaluate model performance.