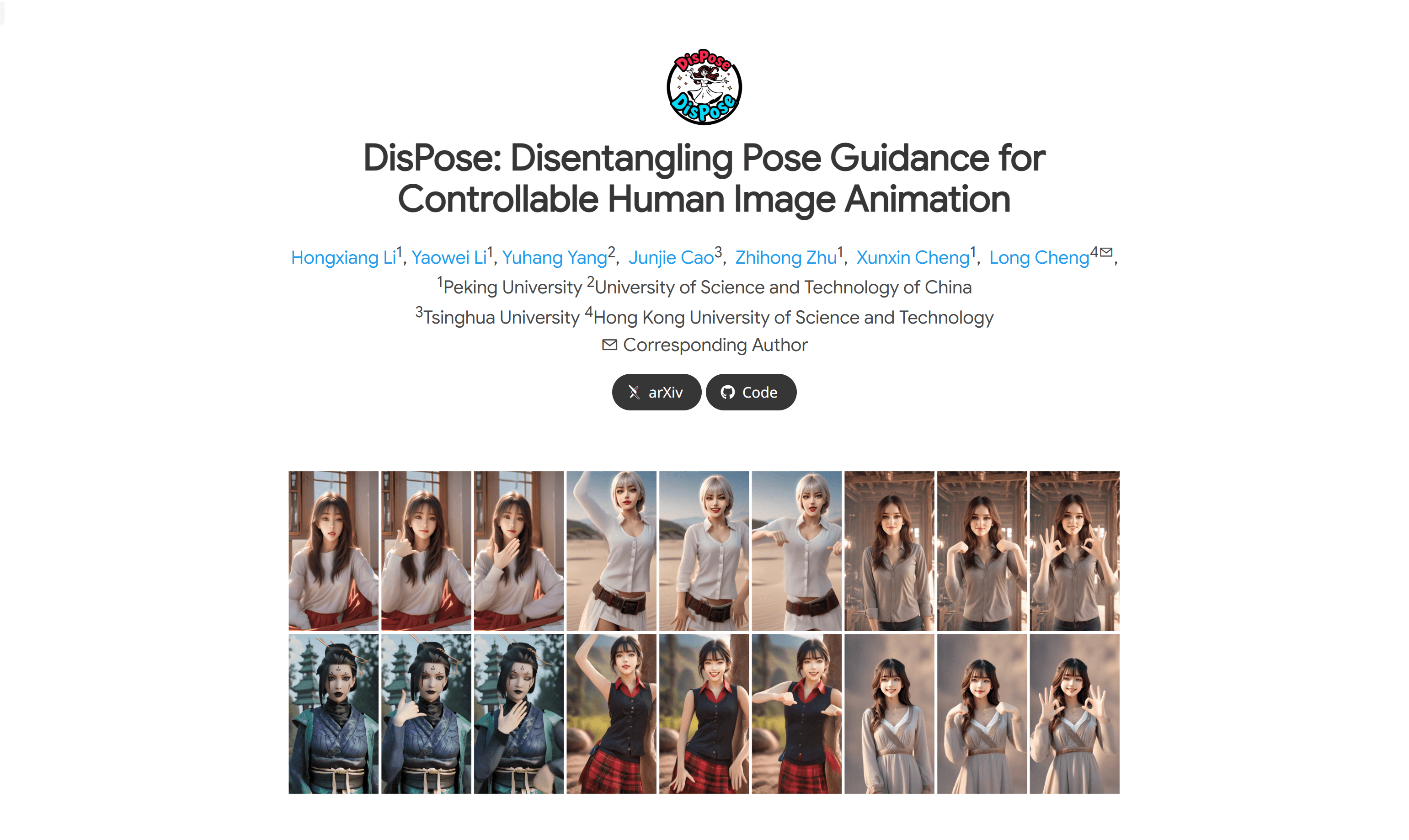

DisPose is a method for controlling human image animation that improves the quality of video generation through motion field guidance and keypoint correspondence. This technology is able to generate videos from reference images and driving videos while maintaining consistency of motion alignment and identity information. DisPose provides region-level dense guidance by generating dense motion fields from sparse motion fields and reference images while maintaining the generalization ability of sparse pose control. Furthermore, it extracts diffusion features corresponding to pose key points from the reference image and transfers these point features to the target pose to provide unique identity information. Key benefits of DisPose include the ability to extract more versatile and efficient control signals without the need for additional dense inputs, as well as improved quality and consistency of generated videos via plug-and-play hybrid ControlNet without freezing existing model parameters.

Demand group:

" DisPose 's target audience is researchers and developers in the fields of computer vision and image animation, especially professionals who need to generate high-quality, highly controlled human animation videos. The technology is suitable for them because it provides a A way to generate realistic animations without complex input while maintaining variety and personalization of the generated content."

Example of usage scenario:

1. Use DisPose technology to generate a video of a character walking from a static picture.

2. Use DisPose to transfer the movements of a character to another character model to achieve seamless transition of movements.

3. In film production, DisPose can be used to generate complex character action scenes, reducing the cost and time of actual shooting.

Product features:

- Playground Guidance: Generates dense playfields from sparse playfields and reference images, providing region-level dense guidance.

- Key point correspondence: Extract diffusion features corresponding to pose key points and transfer them to the target pose.

- Hybrid ControlNet: plug-and-play module that improves video generation quality without modifying existing model parameters.

- Video generation: Use reference images and driver videos to generate new videos, maintaining motion alignment and identity information consistency.

- Improved quality and consistency: Through DisPose technology, the generated video is superior to existing methods in quality and consistency.

- No need for additional dense inputs: Reduce dependence on additional dense inputs such as depth maps and improve the generalization ability of the model.

- Plug-in integration: Can be easily integrated into existing image animation methods to improve performance.

Usage tutorial:

1. Visit DisPose ’s official website and download the relevant code.

2. Read the documentation to learn how to configure the environment and dependencies.

3. Prepare reference images and driver videos to ensure they meet DisPose ’s input requirements.

4. Run the DisPose code and input the reference image and driver video.

5. Observe the generated video to check the consistency of motion alignment and identity information.

6. If necessary, adjust the parameters of DisPose to optimize the video generation effect.

7. Use the generated videos for further research or commercial purposes.