What is DynamicControl?

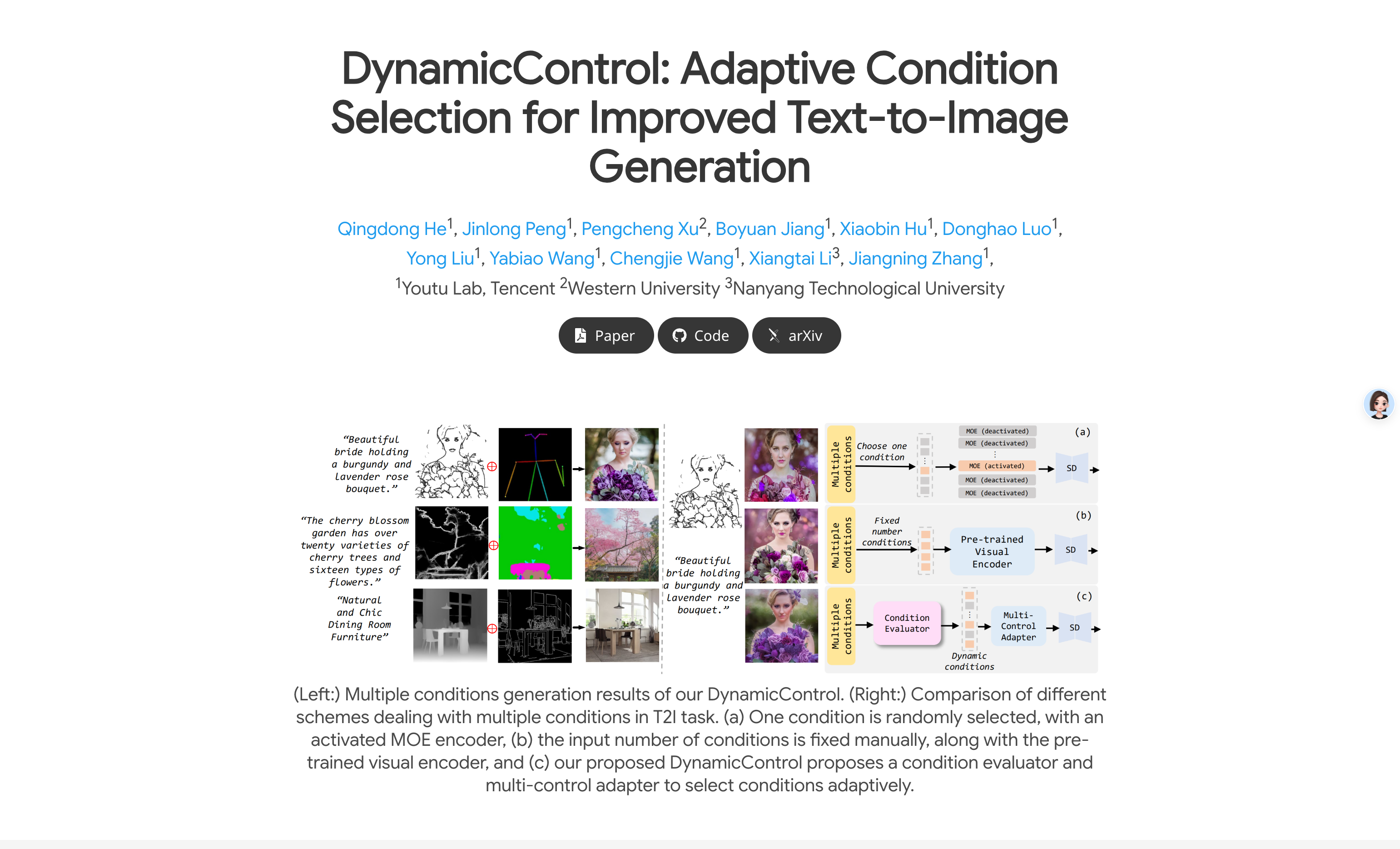

DynamicControl is a framework that enhances text-to-image diffusion models by dynamically combining diverse control signals. It supports adaptive selection of different numbers and types of conditions, improving reliability and detail in image synthesis. The framework uses a double-loop controller with pre-trained condition generation and discrimination models to generate initial real score rankings. It then optimizes these rankings using a multimodal large language model (MLLM) to build an efficient condition evaluator. DynamicControl jointly optimizes the MLLM and diffusion models, leveraging the inference capabilities of the MLLM to enhance multi-condition text-to-image tasks.

Who Can Use DynamicControl?

DynamicControl is ideal for researchers and developers working in image generation, particularly those needing higher precision and control in text-to-image tasks. It offers a new solution for handling complex multi-condition scenarios and potential conflicts, making it suitable for users requiring high-quality and highly controlled images.

Example Scenarios:

Researchers can use DynamicControl to generate specific styles of images, such as landscapes or portraits.

Developers can optimize their image generation applications to meet various user needs and conditions.

Educational institutions can use DynamicControl as a teaching tool to demonstrate how control signals influence image generation processes.

Key Features:

Double-loop Controller: Generates initial real score rankings using pre-trained models.

Condition Evaluator: Optimizes condition order based on score rankings from the double-loop controller.

Multi-condition Text-to-Image Tasks: Enhances control through joint optimization of MLLM and diffusion models.

Parallel Multi-control Adapters: Learns dynamic visual condition feature maps and integrates them to regulate ControlNet.

Adaptive Condition Selection: Dynamically selects conditions based on type and number, improving image synthesis reliability and detail.

Enhanced Control: Improves control over generated images through dynamic condition selection and feature map learning.

Getting Started Tutorial:

1. Visit the DynamicControl project page to learn about its background and features.

2. Download and install required pre-trained models and discriminative models.

3. Set up the double-loop controller and condition evaluator according to the project documentation.

4. Use the MLLM to optimize condition sorting for specific image generation tasks.

5. Input sorted conditions into parallel multi-control adapters to learn feature maps.

6. Adjust ControlNet to generate images with desired attributes.

7. Fine-tune conditions and parameters based on results to optimize image generation.