What is ExaOne 3.5?

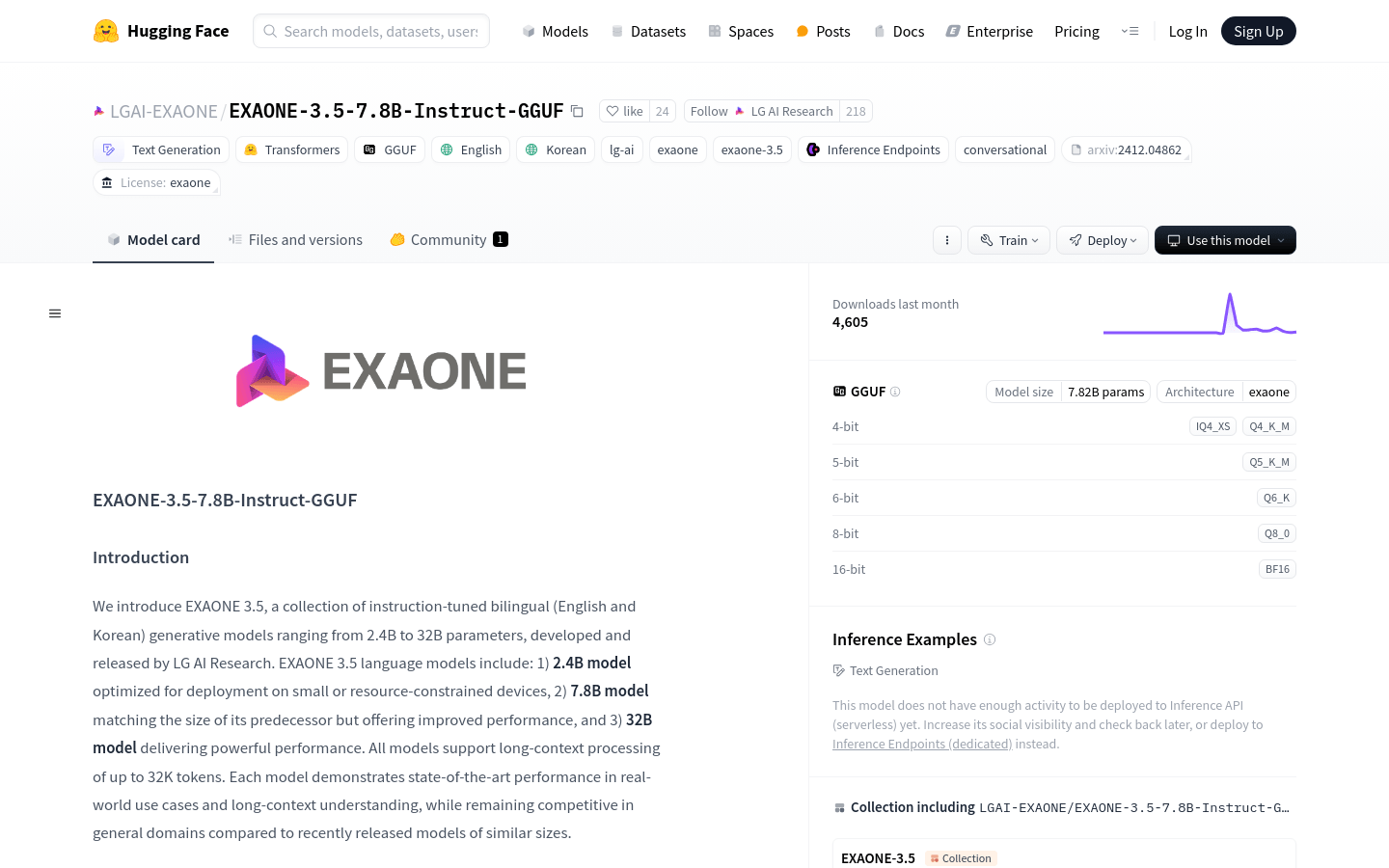

ExaOne 3.5 is a series of bilingual (English and Korean) instruction-tuned language models developed by LG AI Research. These models have parameters ranging from 2.4 billion to 32 billion and support long context processing up to 32,000 tokens. They demonstrate state-of-the-art performance in real-world applications and long-context understanding while remaining competitive in general domains compared to similar-sized recent models.

Who can benefit from ExaOne 3.5?

Researchers and developers needing high-performance language models on resource-constrained devices, as well as application developers working with long context information and multilingual text generation, can benefit from ExaOne 3.5. The model is particularly suitable for scenarios involving large datasets and complex language tasks due to its robust performance.

What are some use cases for ExaOne 3.5?

Researchers can use ExaOne 3.5 for semantic understanding and analysis of long texts.

Developers can leverage ExaOne 3.5 to build multilingual conversational systems.

Enterprises can optimize their customer service automation processes using ExaOne 3.5.

What are the key features of ExaOne 3.5?

Supports up to 32,000 tokens for long context processing.

Demonstrates leading performance in real-world applications and long-context understanding.

Maintains competitive performance in general domains compared to recent models of similar size.

Offers multiple precision levels of instruction tuning including Q80, Q60, Q5KM, Q4KM, IQ4_XS.

Compatible with various deployment frameworks such as TensorRT-LLM, vLLM, SGLang, llama.cpp, and Ollama.

Optimized for small or resource-limited devices.

Provides pre-quantized versions of ExaOne 3.5 using AWQ and multiple quantization types.

How do you use ExaOne 3.5?

1. Install llama.cpp. Refer to the GitHub repository for specific installation instructions.

2. Download the GGUF format file for ExaOne 3.5.

3. Use huggingface-cli to download the specified ExaOne 3.5 model files. For example:

`

huggingface-cli download LGAI-EXAONE/EXAONE-3.5-7.8B-Instruct-GGUF --include "EXAONE-3.5-7.8B-Instruct-BF16*.gguf" --local-dir .

`

4. Run the model for conversational inference using llama-cli. For example:

`

llama-cli -cnv -m ./EXAONE-3.5-7.8B-Instruct-BF16.gguf -p "You are the EXAONE model from LG AI Research, a helpful assistant."

`