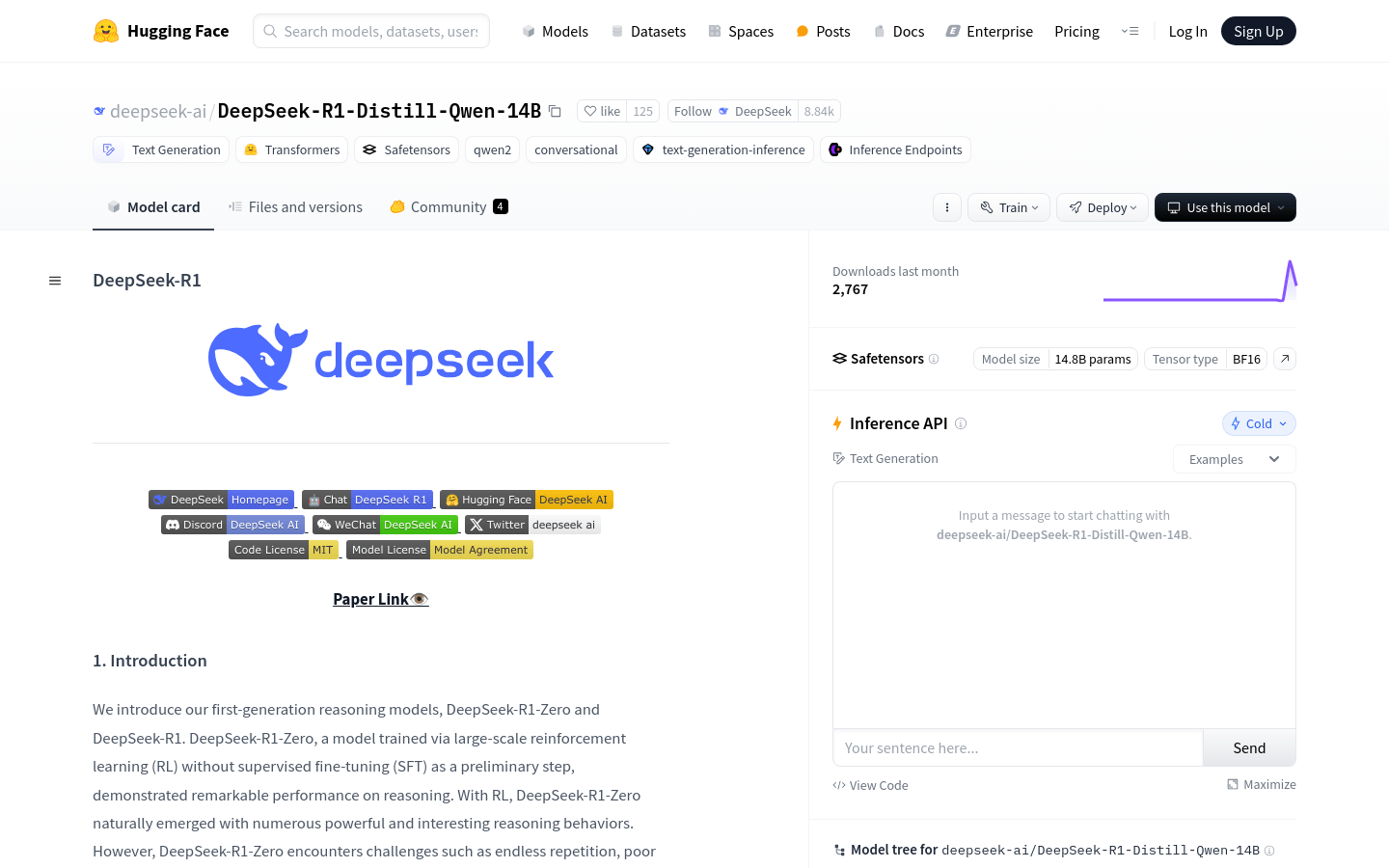

What is Florence-2?

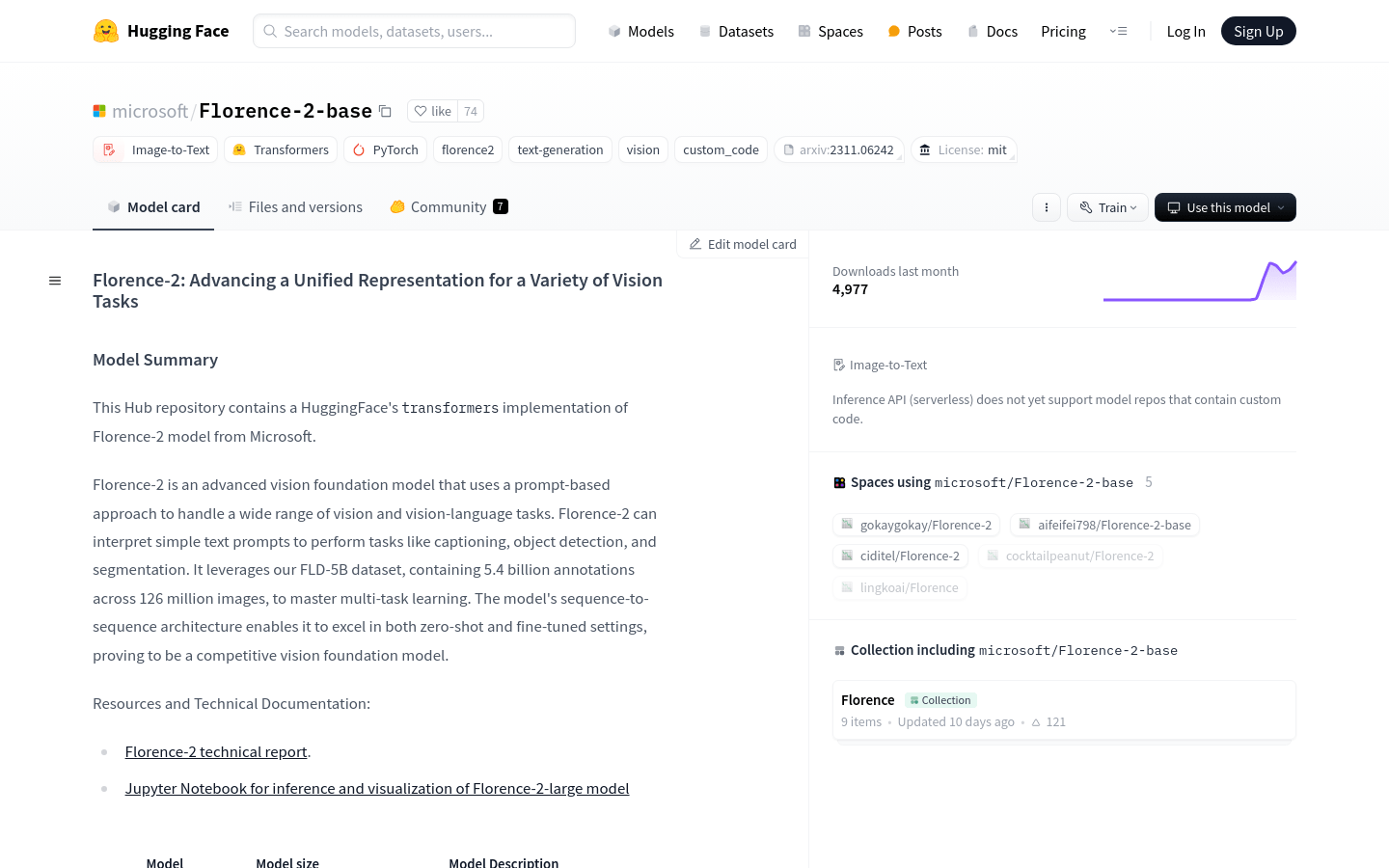

Florence-2 is an advanced visual foundation model developed by Microsoft. It uses a prompt-based approach to handle a wide range of visual and vision-language tasks. The model can interpret simple text prompts to perform tasks like image description, object detection, and segmentation. It is trained on the FLD-5B dataset, which includes 540 million annotated images, enabling it to excel in multi-task learning.

Target Audience:

Researchers and developers who need to process visual and vision-language tasks such as image description, object detection, and image segmentation will find Florence-2 particularly useful. Its capabilities in multi-task learning and sequence-to-sequence architecture make it an ideal choice for these applications.

Use Cases:

Generate image descriptions using Florence-2.

Perform object detection with Florence-2.

Implement image segmentation using Florence-2.

Key Features:

Converts images to text.

Generates text based on prompts.

Handles visual and vision-language tasks.

Supports multi-task learning.

Performs well in zero-shot and fine-tuning settings.

Uses a sequence-to-sequence architecture.

Tutorial:

1. Import necessary libraries and models: AutoModelForCausalLM and AutoProcessor.

2. Load the pre-trained model and processor from Hugging Face.

3. Define task prompts.

4. Load or obtain images for processing.

5. Convert text and images to input formats acceptable by the model using the processor.

6. Use the model to generate outputs like text descriptions or object detection boxes.

7. Post-process the generated output to get the final results.

8. Display the results through printing or other means.