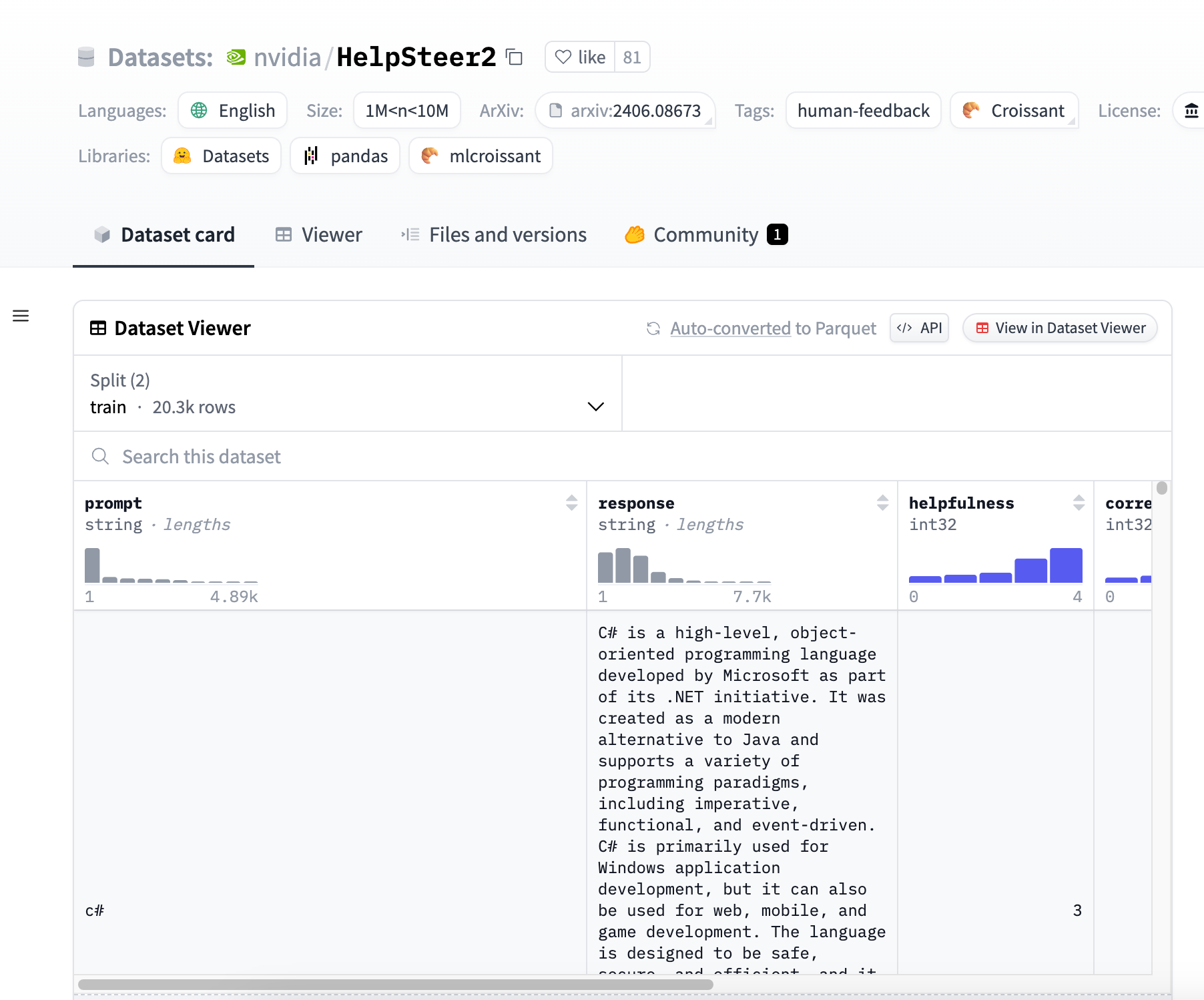

HelpSteer2 is an open source dataset released by NVIDIA designed to support training that aligns models to be more helpful, factually correct, and coherent, while being tunable in terms of complexity and redundancy of responses. This dataset was created in partnership with Scale AI.

Demand group:

The HelpSteer2 dataset is primarily intended for developers and researchers who need to train and optimize dialogue systems, reward models, and language models. It is particularly suitable for professionals who want to improve the performance of their models on specific tasks, such as customer service automation, virtual assistants, or any scenario that requires natural language understanding and generation.

Example of usage scenario:

Used to train the SteerLM regression reward model to improve the performance of the dialogue system on specific tasks.

As part of a research project, the response quality of different models when handling multiple turns of dialogue is analyzed and compared.

In education, help students understand how to improve the response of language models through machine learning techniques.

Product features:

Contains 21,362 samples, each sample includes a prompt, a response, and five human-annotated attribute scores.

Attribute ratings include helpfulness, correctness, coherence, complexity, and redundancy.

Samples that support multiple rounds of dialogue can be used for DPO or Preference RM training based on preference pairs.

Responses are generated by 10 different in-house large-scale language models, providing diverse yet reasonable responses.

Annotation using Scale AI ensures the quality and consistency of the data set.

The dataset is licensed under CC-BY-4.0 and can be used and distributed freely.

Usage tutorial:

Step 1: Visit the Hugging Face official website and search for HelpSteer2 data set.

Step 2: Download the dataset and load the dataset using the appropriate tool or library.

Step 3: Based on project requirements, select specific samples or attributes in the data set for analysis.

Step 4: Use the data set to train or optimize your language model and monitor the model's performance on various attributes.

Step 5: Adjust model parameters and improve the model training process as needed.

Step 6: Evaluate model performance to ensure it meets expected standards for helpfulness, correctness, and other key attributes.

Step 7: Deploy the trained model to a real-world application, such as a chatbot or virtual assistant.