What is HiDiffusion?

HiDiffusion is an advanced pre-trained diffusion model that enhances the resolution and speed of diffusion models by adding just one line of code. This model uses Resolution-Aware U-Net (RAU-Net) and Modified Shifted Window Multi-head Self-Attention (MSW-MSA) techniques to dynamically adjust feature map sizes, addressing object replication issues, and optimizing window attention to reduce computational load. HiDiffusion can expand image generation resolution up to 4096x4096 while maintaining 1.5 to 6 times faster inference speed than previous methods.

Who Can Benefit from HiDiffusion?

Professionals requiring high-resolution image synthesis

Researchers in image processing and visual arts

Designers needing more efficient creation tools

Businesses aiming to save time and costs in image generation

Example Scenarios:

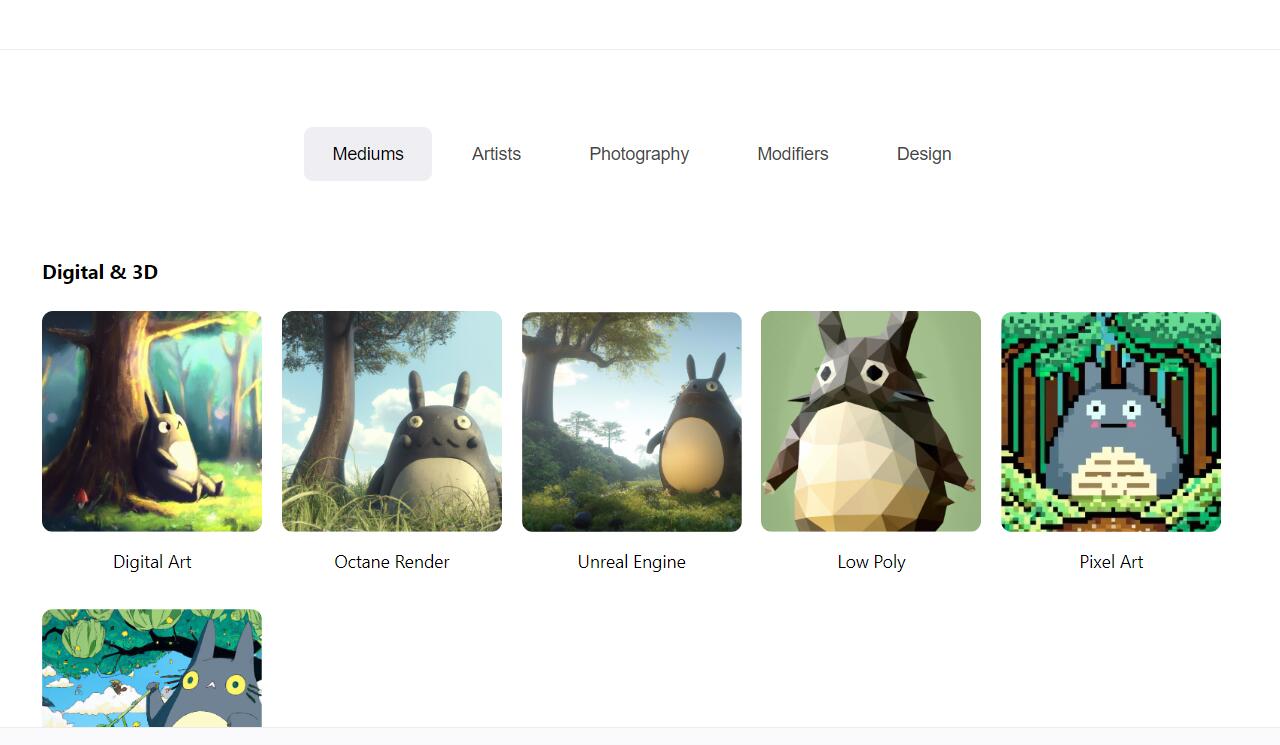

Generate detailed anime-style character images

Quickly produce high-quality background images for design projects

Create high-resolution image assets for film visual effects

Key Features:

Enhances resolution and speed with one line of code

Dynamically adjusts feature map sizes to prevent object replication

Optimizes window attention to minimize computation

Supports image resolutions up to 4096x4096

Achieves state-of-the-art performance in high-resolution image synthesis

Integrates seamlessly into various pre-trained diffusion models without extra adjustments

Improves inference speed by 1.5 to 6 times

How to Use HiDiffusion:

1. Visit the HiDiffusion website or GitHub page

2. Read the documentation to understand its working principles and integration methods

3. Choose a suitable pre-trained diffusion model based on your needs

4. Add the specific HiDiffusion code line to the model code

5. Adjust model parameters for desired resolution and speed

6. Run the model and observe the generated images

7. Perform any necessary post-processing to meet specific requirements