What is In-Context LoRA?

In-Context LoRA is a fine-tuning technique for diffusion transformers (DiTs) that combines images rather than relying solely on text. This approach allows for task-specific fine-tuning without compromising the model's task-agnostic nature. The key benefits include efficient fine-tuning with small datasets and no need to modify the original DiT model, just changing the training data.

Target Audience:

This method is ideal for researchers and developers in the field of image generation who need to fine-tune diffusion transformer models for specific tasks. In-Context LoRA offers an effective and cost-efficient way to enhance image generation results while maintaining the model’s versatility and flexibility.

Example Scenarios:

1. Movie Storyboard Generation: Use In-Context LoRA to create a series of images that tell a coherent story.

2. Portrait Photography: Generate a set of portraits that maintain the same identity.

3. Font Design: Create a collection of images with consistent font styles suitable for brand design.

Key Features:

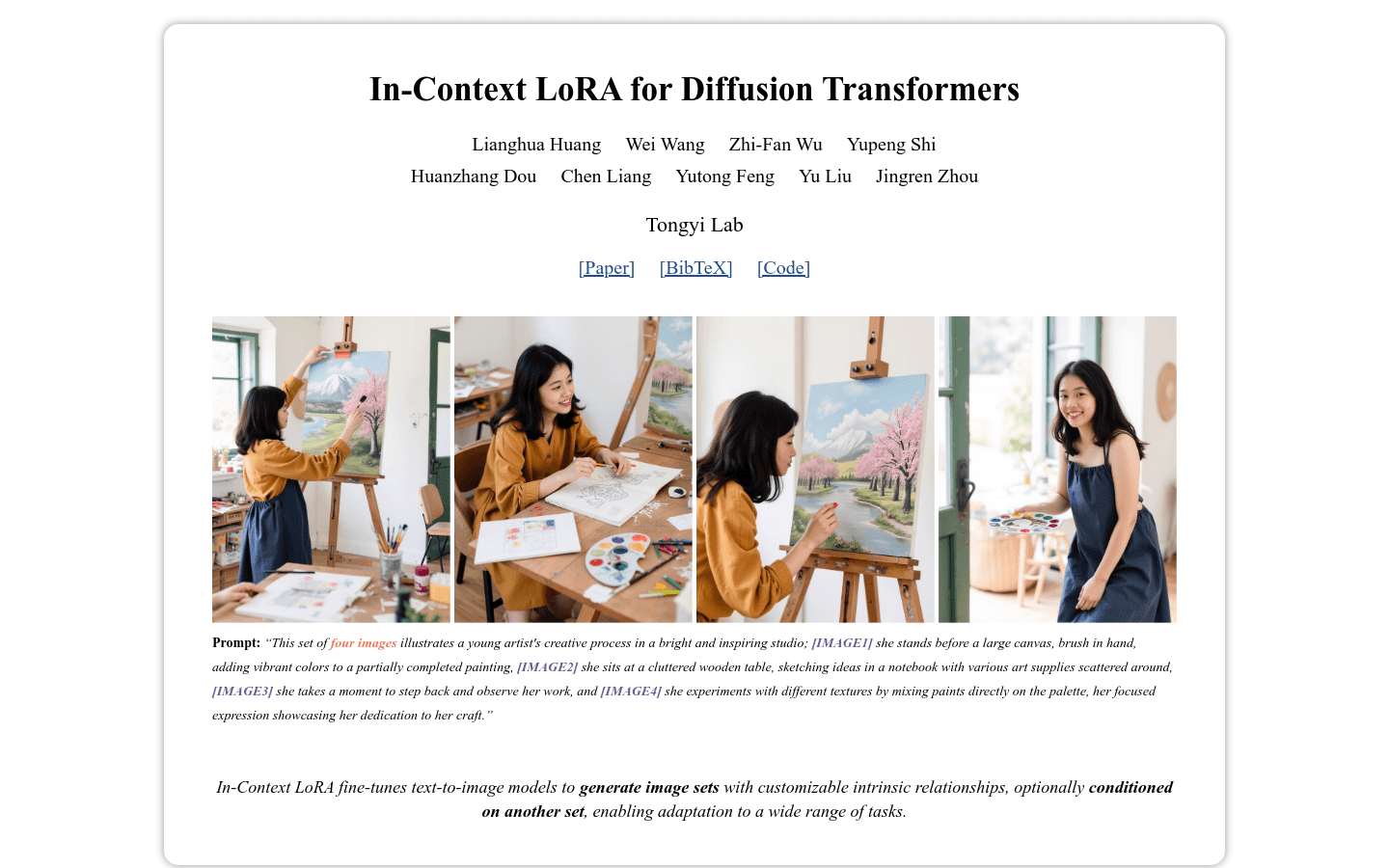

Jointly Describes Multiple Images: Merges multiple images into a single input rather than processing them individually, enhancing relevance and consistency.

Task-Specific LoRA Fine-Tuning: Utilizes small datasets (20-100 samples) for fine-tuning instead of large datasets for full parameter adjustment.

Generates High-Fidelity Image Sets: Optimizes training data to produce higher quality images that better meet prompt requirements.

Maintains Task-Agnostic Nature: Although fine-tuned for specific tasks, the overall architecture and process remain task-agnostic, increasing the model’s general applicability.

No Need to Modify Original DiT Model: Only changes training data are required; no alterations to the original model are necessary, simplifying the fine-tuning process.

Supports Various Image Generation Tasks: Including movie storyboard generation, portrait photography, and font design, showcasing the model’s adaptability.

Tutorial:

1. Prepare a set of images and corresponding descriptions.

2. Use the In-Context LoRA model to jointly describe these images and texts.

3. Select a small dataset based on the specific task for LoRA fine-tuning.

4. Adjust model parameters until the generated image set meets quality standards.

5. Apply the fine-tuned model to new image generation tasks.

6. Evaluate if the generated images meet expected prompts and quality criteria.

7. Further fine-tune the model if needed to improve image generation results.