What is INFP?

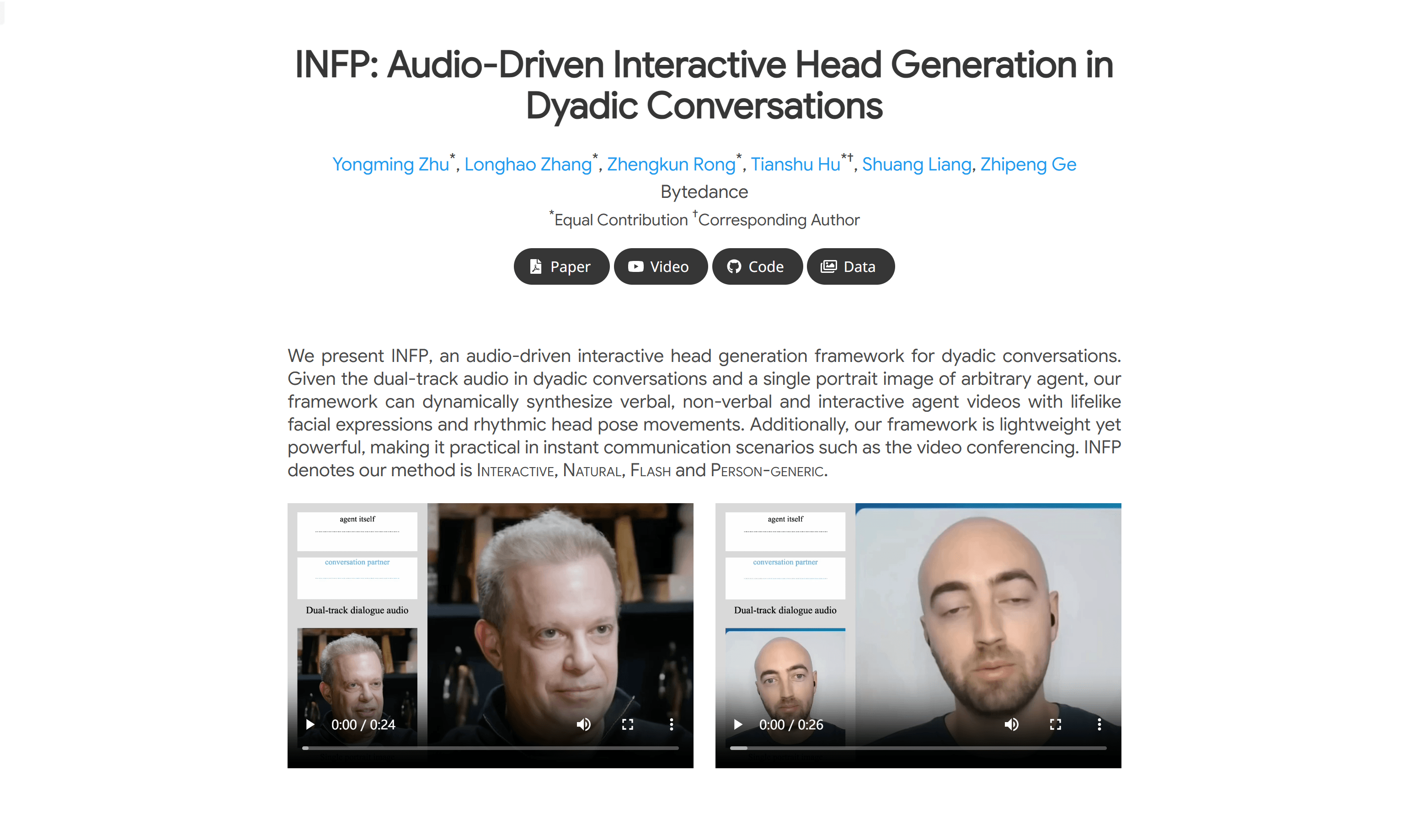

INFP is an advanced audio-driven interactive head generation framework tailored for two-person conversations. It dynamically synthesizes videos with lifelike facial expressions and rhythmic head movements based on dual-track audio from a conversation and a single portrait image of one participant. This lightweight yet powerful tool is ideal for real-time communication scenarios like video conferencing.

Who Can Use INFP?

The target audience includes users who need virtual agents in video meetings, online education, remote work, and similar settings. It's particularly suited for applications requiring natural and smooth interaction, such as customer service or online teaching.

Example Scenarios

Video conferences using INFP-generated virtual agents for remote communication.

Teachers in online education using INFP to create virtual representations for lectures.

Customer service employing INFP to generate virtual agents that interact with customers.

Key Features

Dynamic synthesis of verbal, non-verbal, and interactive agent videos based on input audio and images.

Lightweight and powerful, suitable for real-time communication like video conferencing.

Interactive and natural, adapting seamlessly to various dialogue states without manual role switching.

Fast inference speed, achieving over 40 fps on Nvidia Tesla A10 for real-time interactions.

High lip sync accuracy, ensuring rich facial expressions and rhythmic head movements.

Support for multiple languages and singing.

High-fidelity and natural facial behaviors with diverse head actions.

How to Use INFP

1. Prepare dual-track audio from a conversation and a single portrait image of an agent.

2. Visit the INFP website and download the necessary code and datasets.

3. Set up the environment according to the documentation and install required dependencies.

4. Input the prepared audio and image into the INFP framework.

5. The framework will dynamically generate an interactive head video based on the input audio.

6. Review the generated video to ensure it meets the requirements for realism and interactivity.

7. Adjust INFP parameters if needed to optimize the video output.

8. Apply the generated video in actual real-time communication scenarios.