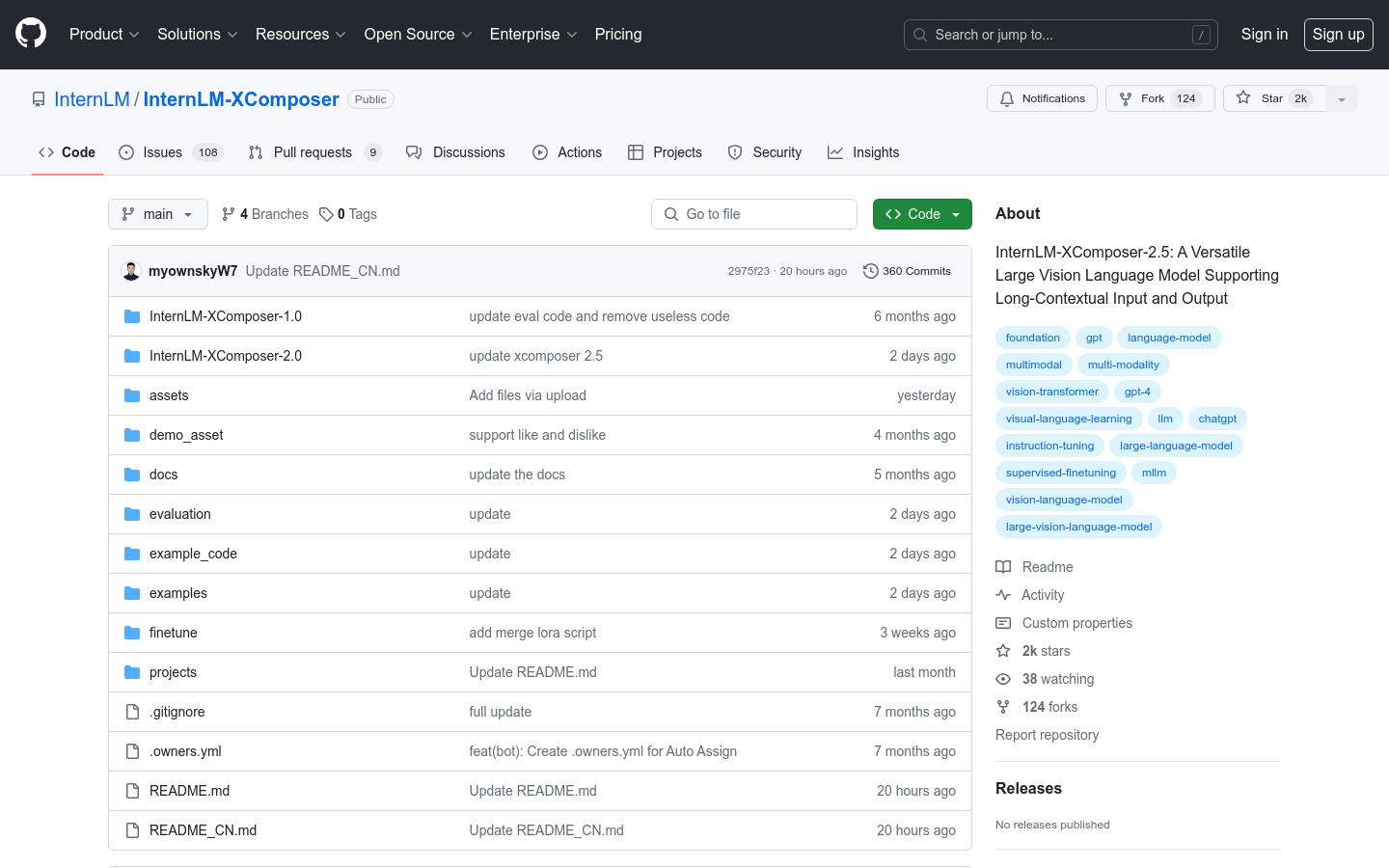

What is InternLM-XComposer-2.5?

InternLM-XComposer-2.5 is a powerful large visual language model that supports long context input and output. It excels in various text-image understanding and creation tasks, achieving performance comparable to GPT-4V but using only 7B of LLM backend. Trained with 24K interleaved image-text contexts, it can scale up to handle 96K long contexts through RoPE extrapolation. This model is particularly effective in tasks requiring extensive input and output contexts. Additionally, it supports high-resolution image understanding, fine-grained video understanding, multi-turn multi-image dialogues, web page creation, and generating high-quality图文 articles.

Who Can Benefit from InternLM-XComposer-2.5?

The target audience includes researchers, developers, content creators, and enterprise users. Researchers and developers who need to process large amounts of text and image data will find this useful. Content creators can use it to automatically generate high-quality图文 content. Enterprises can integrate it into their products to enhance the efficiency of creating product documentation and marketing materials.

Example Scenarios:

Researchers use the model for analyzing and understanding multimodal datasets.

Content creators leverage the model to auto-generate图文 articles.

Enterprises integrate the model into their products to improve the automation level of customer service.

Key Features:

Supports long context input and output, handling up to 96K contexts.

High-resolution image understanding, supporting images of any ratio.

Fine-grained video understanding, treating videos as composite images made up of tens to hundreds of frames.

Multi-turn multi-image dialogue support, enabling natural human-machine interactions.

Web page creation based on text and image instructions, generating HTML, CSS, and JavaScript code.

Generates high-quality图文 articles using Chain-of-Thought and Direct Preference Optimization techniques.

Getting Started:

1. Install necessary environment and dependencies, ensuring system requirements are met.

2. Use provided sample code or API to interact with the model.

3. Adjust model parameters according to specific needs for optimal performance.

4. Utilize the model for text and image understanding and creation tasks.

5. Evaluate model outputs and iterate based on feedback.