InternVL3: A Powerful Open-Source Multimodal Large Language Model

InternVL3 is a groundbreaking open-source multimodal large language model (MLLM) released by OpenGVLab. It boasts exceptional multimodal perception and reasoning capabilities, making it a versatile tool for a wide range of applications.

Multimodal Input: Processes text, images, and videos simultaneously, catering to diverse application needs.

Robust Multimodal Understanding and Reasoning: Excels at complex multimodal tasks, accurately understanding and generating relevant content.

Wide Applicability: Suitable for various domains including tool usage, GUI interaction, industrial image analysis, and 3D visual perception.

Native Multimodal Pre-training: Advanced pre-training techniques ensure superior performance across diverse tasks.

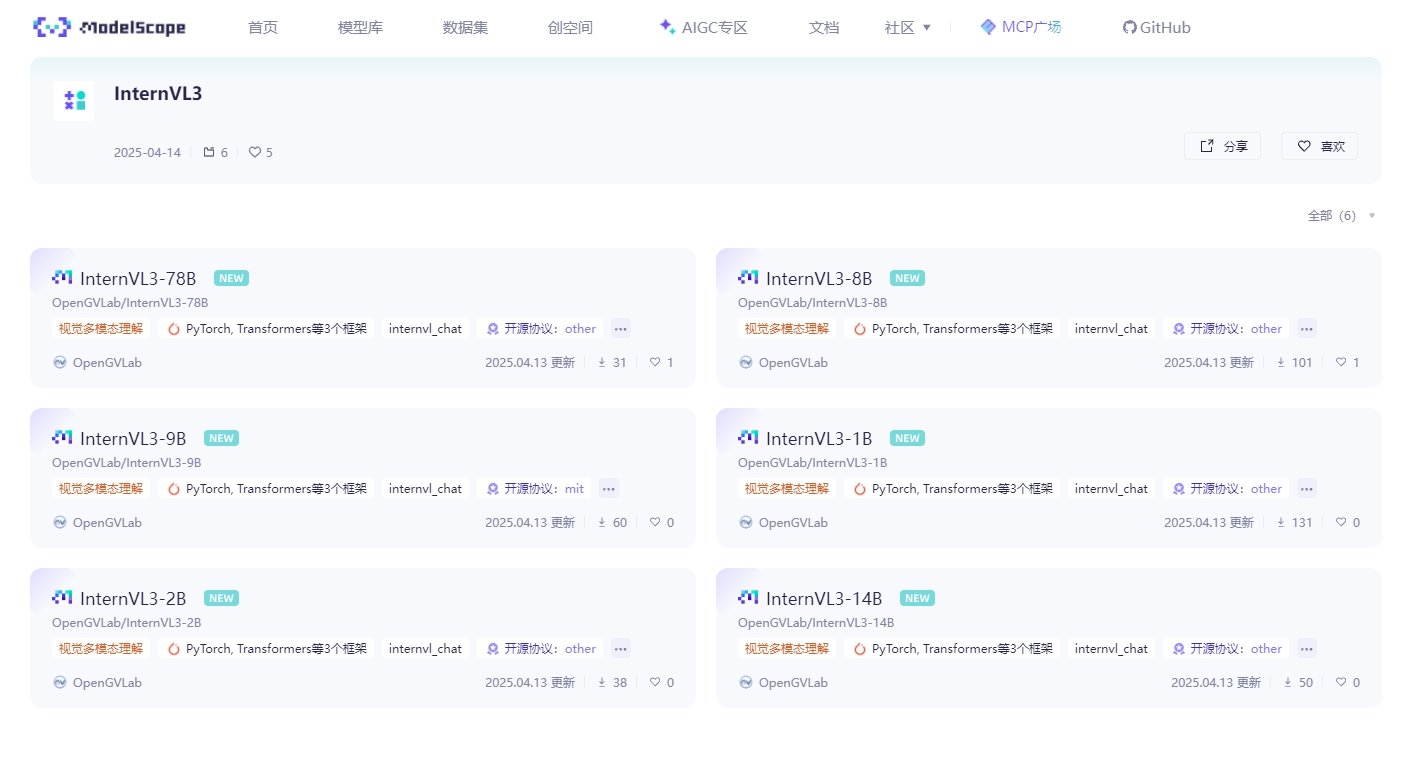

Flexible Model Sizes: Offers seven different model sizes, ranging from 1B to 78B parameters, allowing users to choose the optimal balance between performance and resource requirements. This scalability ensures suitability for various computational environments.

Superior Performance: InternVL3's overall text performance even surpasses that of the Qwen2.5 series.

InternVL3 is designed for a diverse audience, including:

AI Developers: Leverage its powerful multimodal processing capabilities to rapidly build and optimize multimodal applications.

Data Scientists: Utilize its comprehensive functionalities for advanced data analysis and model development.

Image Processing Engineers: Benefit from its strengths in industrial image analysis and 3D visual perception to tackle complex image-related tasks.

Researchers: Explore and advance the field of multimodal technology through research and experimentation.

InternVL3's versatility translates into numerous practical applications:

Industrial Production: Analyze images from production lines to detect quality issues in real-time, improving efficiency and reducing defects.

Smart Security: Process video data to automatically identify and warn against unusual behavior, enhancing security measures.

Education: Assist educators in creating engaging multimedia teaching materials by combining text, images, and videos to enrich learning experiences.

1. Access ModelScope: Visit the ModelScope community to find InternVL3 model information and download links.

2. Choose Your Model: Select the appropriate model size based on your project's requirements and computational resources.

3. Install Dependencies: Install necessary libraries such as `transformers` and `torch` and ensure your runtime environment is properly configured.

4. Load and Initialize: Load the model weights and configuration files to initialize the model instance.

5. Prepare Your Data: Prepare your input data (text, images, or videos) and preprocess it according to the model's specifications.

6. Run Inference: Execute inference using the loaded model and process the output results as needed. InternVL3's open-source nature fosters collaboration and innovation within the multimodal AI community. Its powerful capabilities and diverse applications make it a valuable asset for researchers and developers alike.