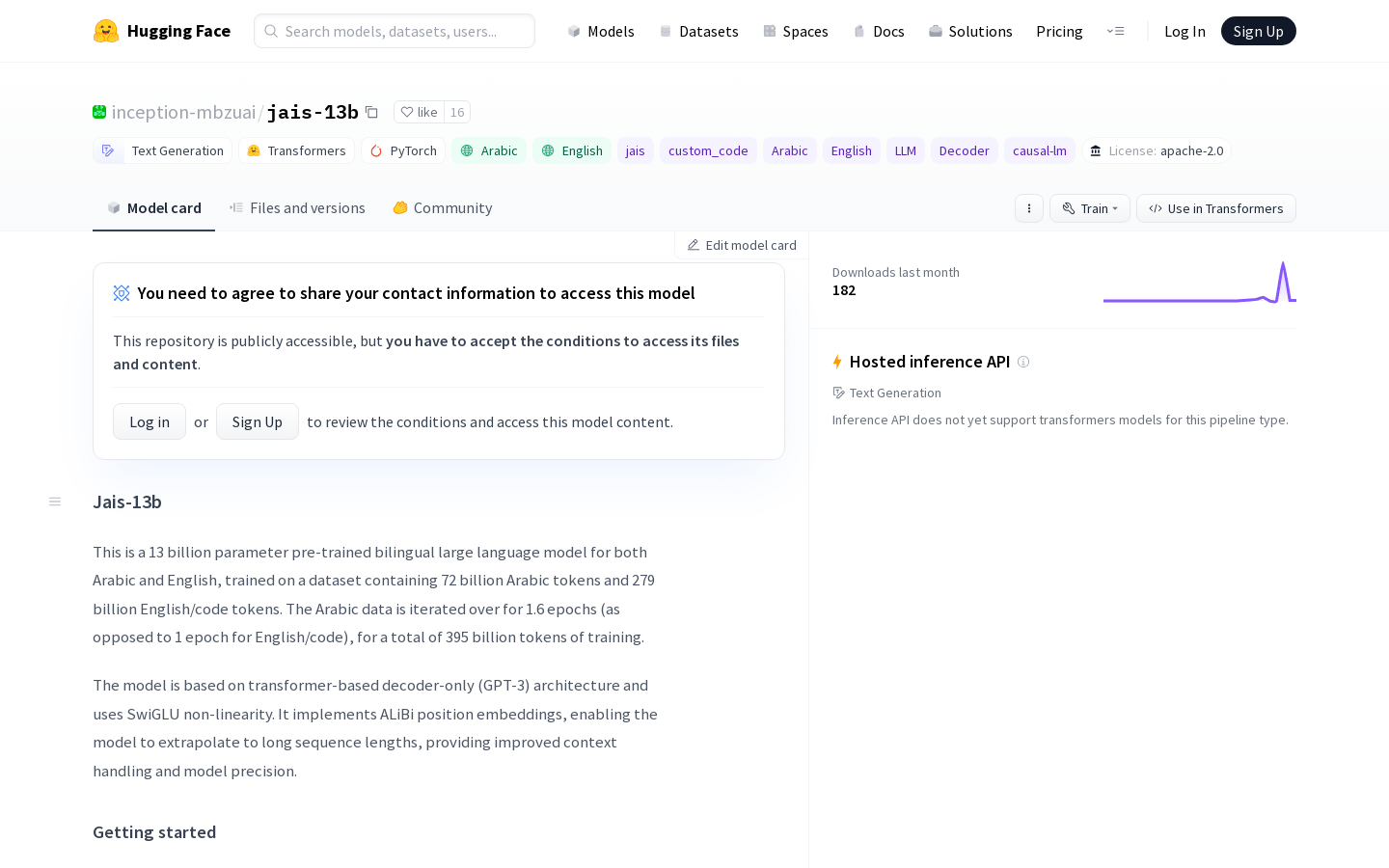

This is a 13 billion parameter pre-trained bilingual large-scale language model supporting Arabic and English, trained on a dataset of 72 billion Arabic tokens and 279 billion English/code tokens. The Arabic data was iterated for 1.6 epochs (compared to 1 epoch for English/Code), with a total of 395 billion tokens for training. The model is based on the Transformer decoder-specific architecture (GPT-3) and uses the SwiGLU nonlinear activation function. It implements ALiBi positional embeddings that can extrapolate to long sequence lengths, providing improved context handling and model accuracy.

Demand group:

["Research use", "Commercial use, such as chat assistant, customer service, etc."]

Example of usage scenario:

Used as a basic model for Arabic natural language processing research

Develop applications with integrated Arabic language functionality

Fine-tuning for downstream tasks like chat assistants

Product features:

Supports generative dialogue in Arabic and English

Can be fine-tuned for specific downstream tasks

Provide context awareness

Support long sequence generation