What is Janus-Pro-1B?

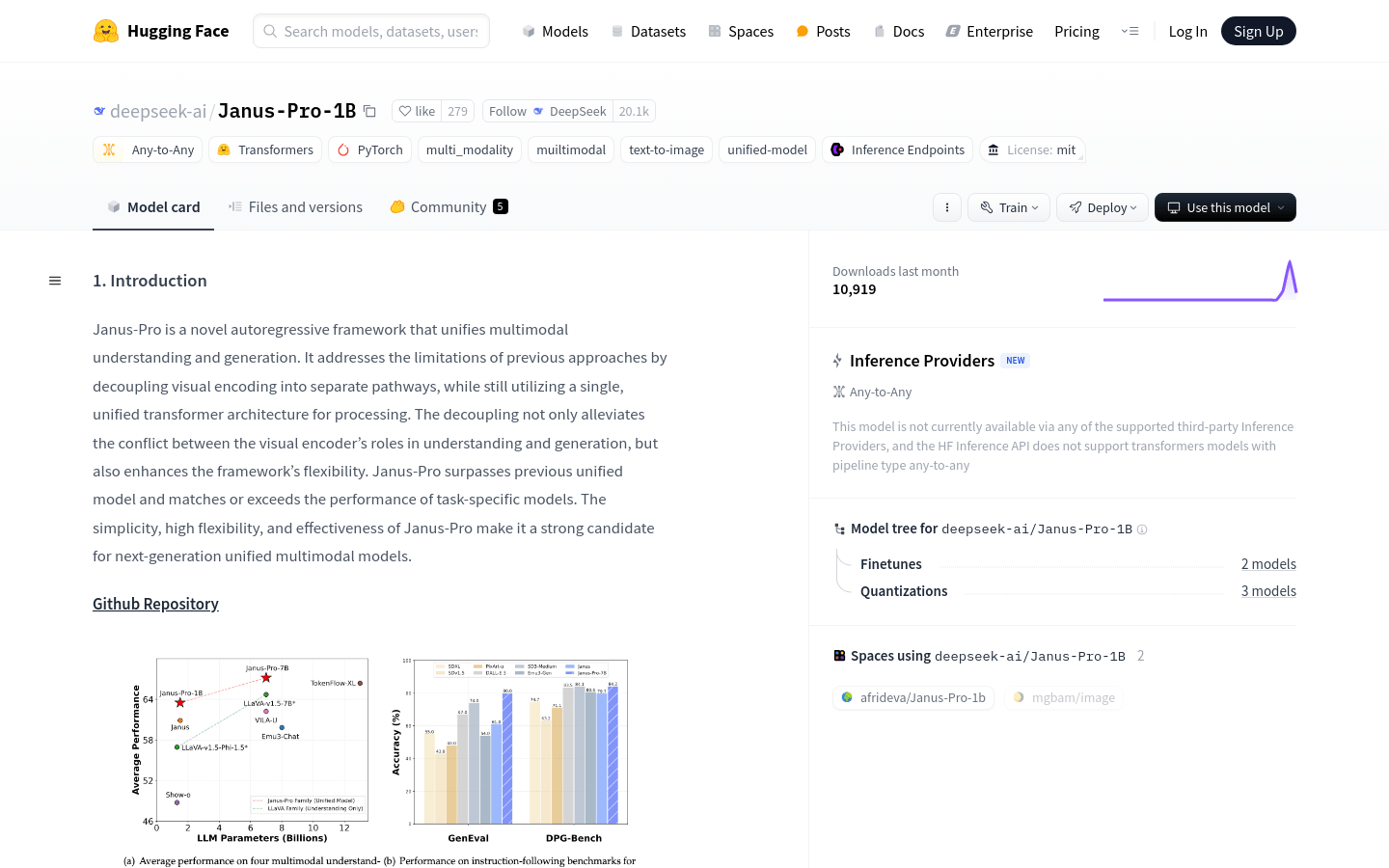

Janus-Pro-1B is an innovative multimodal model focused on unified multimodal understanding and generation. It addresses conflicts between understanding and generation tasks by separating the visual encoding path while maintaining a single unified Transformer architecture. This design enhances model flexibility and performance in various multimodal tasks.

Target Audience:

Developers and researchers needing multimodal understanding and generation can benefit from this model. It is particularly useful for image and text tasks, helping them quickly build and optimize solutions. Its open-source nature makes it ideal for academic research and commercial applications.

Use Cases:

Image Captioning: Input an image, and the model generates accurate descriptions.

Text-to-Image Generation: Input text descriptions, and the model creates corresponding images.

Multimodal Question Answering: Input questions with related images, and the model answers by combining image information.

Key Features:

Supports multimodal understanding and generation across multiple tasks.

Uses separated visual encoding paths to improve model flexibility.

Built on the robust DeepSeek-LLM architecture, ensuring excellent performance.

Supports high-resolution image inputs to enhance visual task outcomes.

Open-source license for easy secondary development and research.

Provides detailed documentation and community support for quick start.

Offers various inference endpoints for easy deployment and use.

Compatible with multiple deep learning frameworks like PyTorch.

Getting Started:

1. Visit the Hugging Face website and find the Janus-Pro-1B model page.

2. Review the model documentation to understand its architecture and features.

3. Download the model files or use Hugging Face's API interface.

4. Load the model using Python and the Hugging Face Transformers library.

5. Prepare input data such as images or text and preprocess it.

6. Feed the data into the model to get multimodal understanding and generation results.

7. Post-process results as needed, such as decoding text or rendering images.

8. Deploy the model to a production environment or continue local development and research.