Jockey is a conversational video proxy built on the Twelve Labs API and LangGraph. It uses the capabilities of existing large language models (LLMs) with Twelve Labs' API to assign tasks through LangGraph, allocating loads of complex video workflows to the appropriate base models. LLMs are used to logically plan execution steps and interact with users, while video-related tasks are passed to the Twelve Labs API supported by Video Foundation Models (VFMs) to process videos in a native way without the need for mediated representations like pre-generated subtitles.

Demand population:

" Jockey is primarily aimed at developers and teams who need to handle complex video workflows, especially those who want to leverage large language models to enhance video content creation and editing processes. It is suitable for professional users who need highly customization and automation of video processing tasks."

Example of usage scenarios:

The video editing team uses Jockey to automate video editing and subtitle generation.

Content creators use Jockey to generate video drafts and storyboards.

Educational institutions use Jockey to create interactive video tutorials.

Product Features:

Combining large language models with video processing APIs for load allocation of complex video workflows.

Use LangGraph for task allocation to improve video processing efficiency.

Enhance the user interactive experience through LLMs logical planning.

Without intermediary representation, directly use the video basic model to process video tasks.

Supports customization and extensions to suit different video-related use cases.

Provide terminal and LangGraph API server deployment options to flexibly adapt to development and testing needs.

Tutorials for use:

1. Install necessary external dependencies such as FFMPEG, Docker and Docker Compose.

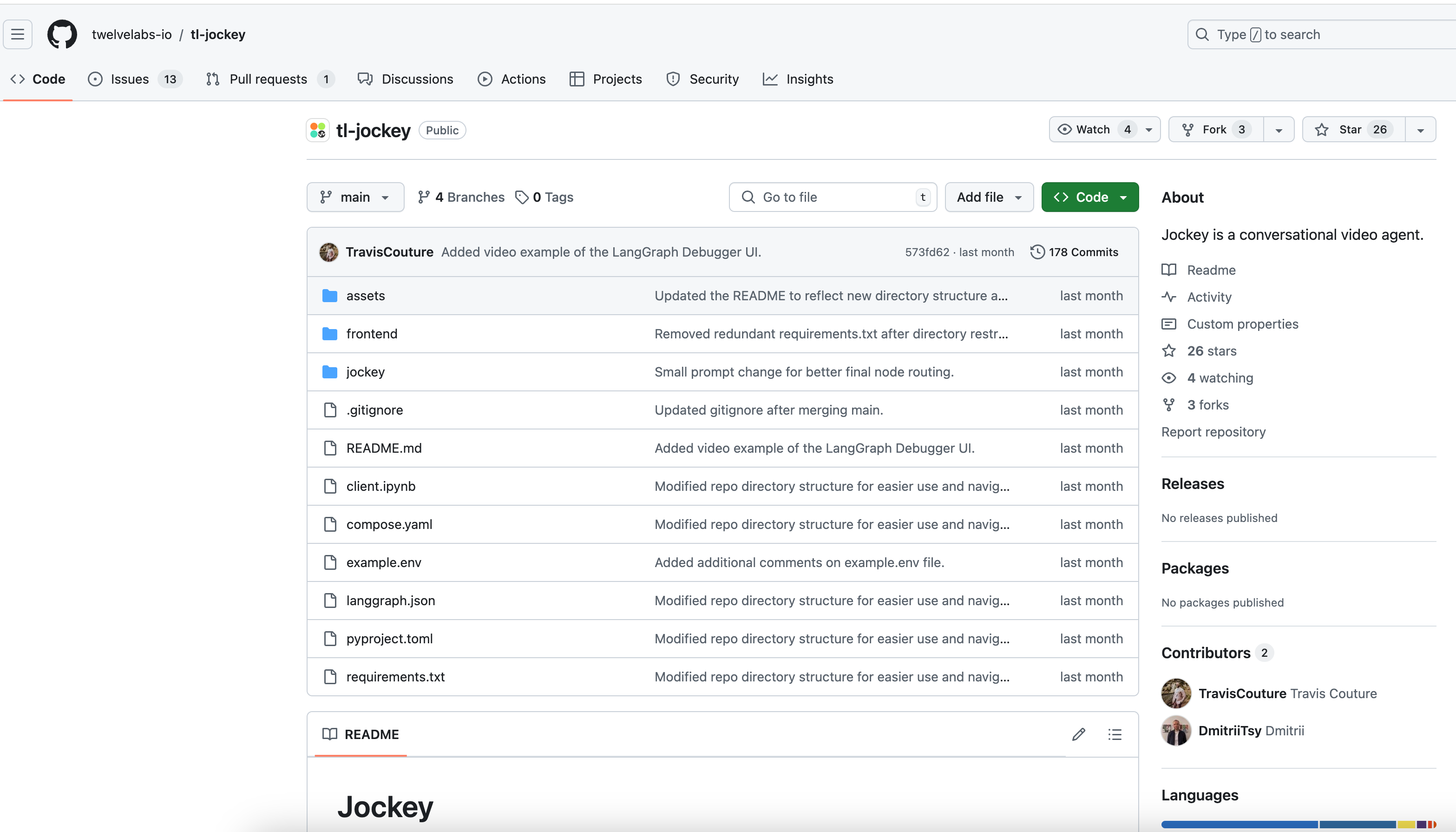

2.Clone Jockey 's GitHub repository to the local environment.

3. Create and activate the Python virtual environment and install the required Python packages.

4. Configure the .env file and add the necessary API keys and environment variables.

5. Use Docker Compose to deploy the Jockey API server.

6. Run Jockey instances through the terminal for testing or end-to-end deployment using the LangGraph API server.

7. Use LangGraph Debugger UI for debugging and end-to-end testing.