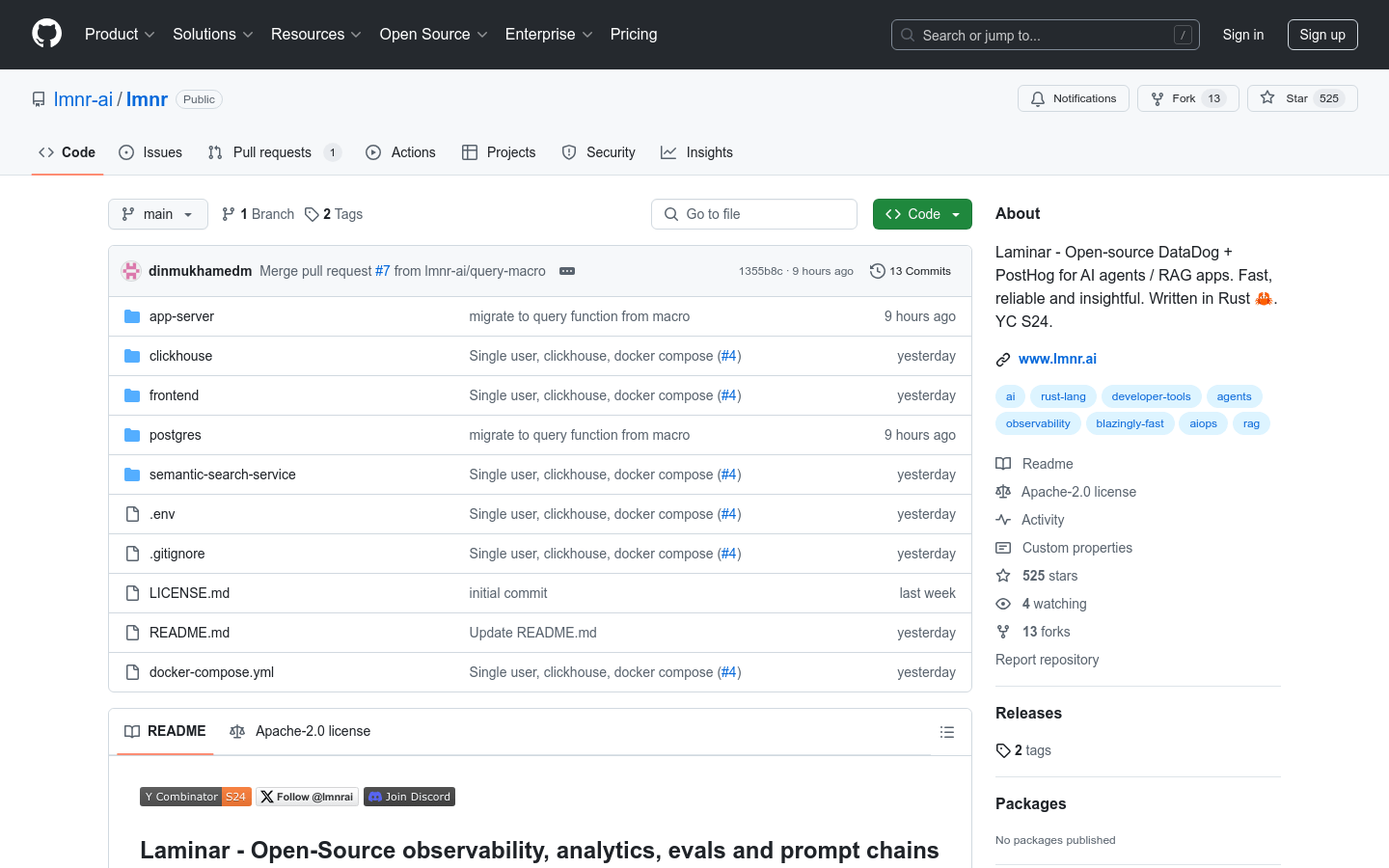

Laminar is an open source monitoring and analysis tool designed for AI proxy and RAG applications, providing features similar to DataDog and PostHog. It is automatically monitored based on OpenTelemetry, enabling fast and reliable data collection and analysis. Laminar is written in Rust for high performance and reliability for large-scale data processing. It helps developers and enterprises optimize the performance and user experience of AI applications by providing detailed tracking, event and analysis capabilities.

Demand population:

" Laminar 's target audience is AI application developers and enterprises, especially those that need to monitor and analyze the performance of their AI agents and RAG applications. It is suitable for occasions where large amounts of data are collected and analyzed quickly and reliably, helping them optimize application performance, improve user experience, and make smarter business decisions."

Example of usage scenarios:

Developers use Laminar to monitor the performance of their AI chatbots, promptly discovering and fixing performance bottlenecks.

The company analyzes user behavior through Laminar and optimizes the accuracy of the AI recommendation system.

Data scientists use Laminar to track and analyze training processes of large-scale machine learning models, improving the efficiency and effectiveness of the model.

Product Features:

Automatic monitoring based on OpenTelemetry, automatic tracking of LLM/vector database calls is realized with only two lines of code.

Supports semantic event analysis, can handle background job queues of LLM pipelines, and convert output into traceable metrics.

Built with modern technology stacks, including Rust, RabbitMQ, Postgres, Clickhouse, etc., ensures high performance and scalability.

Provides an intuitive and fast dashboard for tracking, spans and visualization of events.

Support local deployment through Docker Compose, which facilitates developers to start quickly.

Provides automatic monitoring and decorator of Python code, simplifying the tracking of function input/output.

Supports sending instant events and data-based evaluation events, enhancing the flexibility of event processing.

Laminar pipelines that allow creation and management of LLM call chains in the UI simplify management of complex processes.

Tutorials for use:

Visit Laminar 's GitHub page for project details and documentation.

Start the local version with Docker Compose and follow the steps in the documentation.

Integrate Laminar in the project, automatically monitor LLM calls by adding a few lines of code.

Manually track the input and output of a specific function using the provided decorator.

View and analyze tracking data through Laminar ’s dashboard.

Send events as needed, including instant events and data-based evaluation events.

Create and manage pipelines for LLM call chains in Laminar UI.

Read the documentation and tutorials to learn more about how to optimize AI applications using Laminar .