What is Llama-3 8B Instruct 262k?

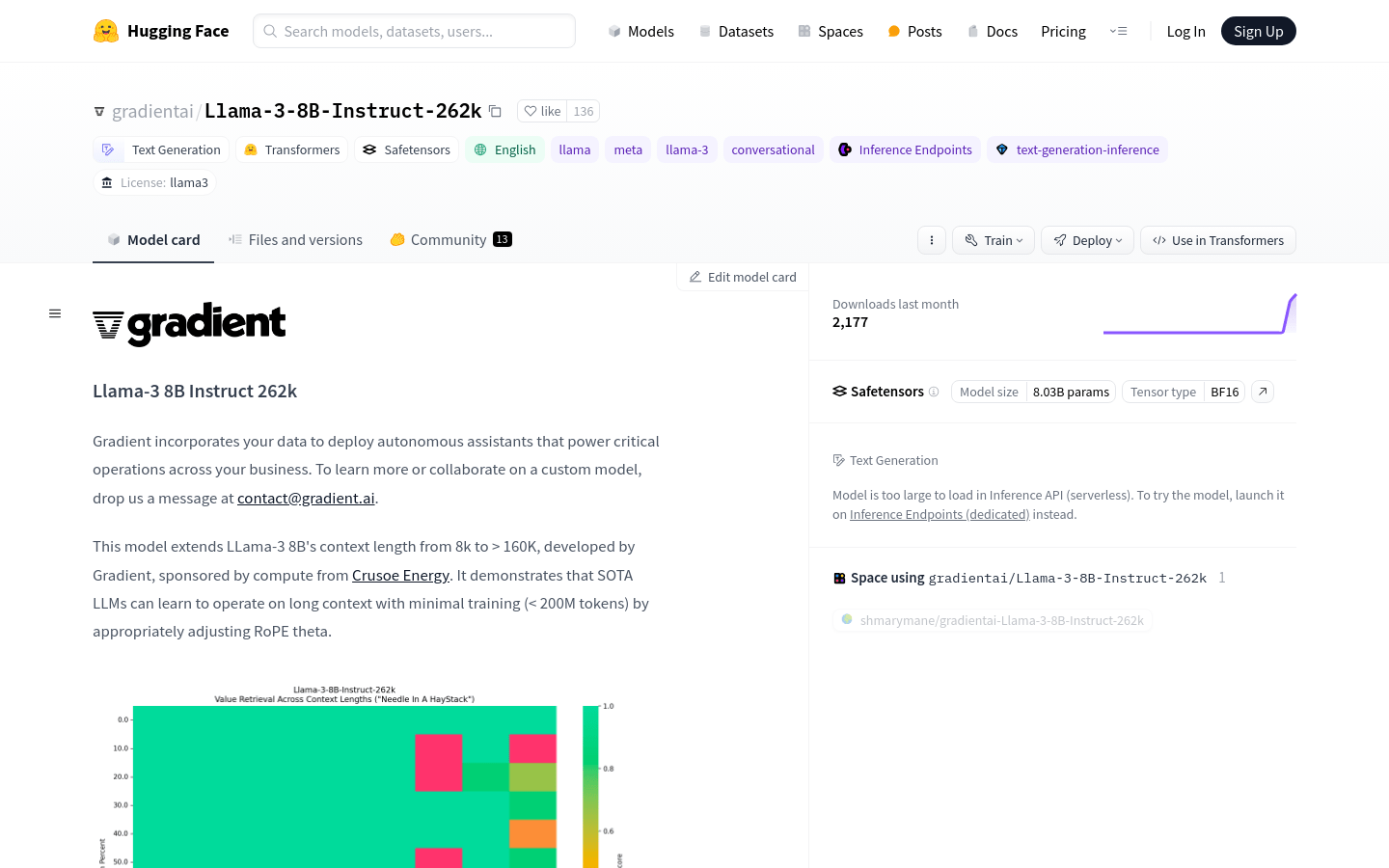

Llama-3 8B Instruct 262k is a text generation model developed by Gradient AI. It extends the context length of LLama-3 8B to over 160K tokens, showcasing state-of-the-art performance in handling long texts. The model uses advanced techniques like NTK-aware interpolation and data-driven optimization, along with the EasyContext Blockwise RingAttention library, for efficient training.

Who Can Use Llama-3 8B Instruct 262k?

Researchers and developers who need to handle long text generation.

Businesses that require automated assistants or customer service chatbots.

Educators who want to generate personalized learning materials and student feedback.

Content creators looking for assistance in creative writing and article generation.

Example Scenarios:

As a backend for chatbots to provide automatic responses.

To draft initial versions of news articles or reports.

On educational platforms to generate personalized learning materials.

For generating creative content and articles.

Key Features:

Supports long text generation with a context length exceeding 160K tokens.

Uses NTK-aware interpolation and data-driven optimization for training.

Built on the EasyContext Blockwise RingAttention library for efficient training.

Optimized for dialogue scenarios, enhancing usability and safety.

Available with multiple programming interfaces such as Transformers and llama3.

Offers quantized versions and GGUF formats for easy deployment.

How to Use Llama-3 8B Instruct 262k

Step 1: Visit the Hugging Face model library and select the Llama-3 8B Instruct 262k model.

Step 2: Choose the appropriate programming interface (Transformers or llama3) based on your needs.

Step 3: Download the model and its dependencies using an API or command-line tool.

Step 4: Refer to example code to write your own input text or instructions.

Step 5: Generate text using the model and adjust parameters to optimize output.

Step 6: Apply the generated text to your desired scenario, such as chatbot replies or article generation.

Step 7: Continuously adjust and optimize model parameters based on feedback for better performance.