What is LLaSA_training?

LLaSA_training is a project based on LLaMA that focuses on optimizing training and inference time computational resources for speech synthesis models. This project uses open-source datasets and internal datasets for training and supports multiple configurations and training methods, making it highly flexible and scalable. Key benefits include efficient data processing, strong speech synthesis results, and support for multiple languages. It is ideal for researchers and developers seeking high-performance speech synthesis solutions, applicable in areas like smart voice assistants and voice broadcasting systems.

Who can benefit from this project?

This project is beneficial for researchers and developers who need high-performance speech synthesis solutions, especially those working on voice synthesis technology, smart voice assistants, or voice broadcasting systems. It helps users quickly build and optimize speech synthesis models, improving development efficiency and model performance.

What are some example use cases?

Researchers can use the LLaSA_training model to develop smart voice assistants, enhancing the voice interaction experience.

Developers can use the trained model to add voice broadcasting features to online education platforms, increasing teaching efficiency.

Companies can use the LLaSA_training model to improve the voice synthesis module in their customer service systems, boosting customer satisfaction.

What are the unique features of LLaSA_training?

Supports training of speech synthesis models based on LLaMA with efficient computational optimization.

Compatible with various open-source datasets such as LibriHeavy and Emilia, totaling up to 160,000 hours of data.

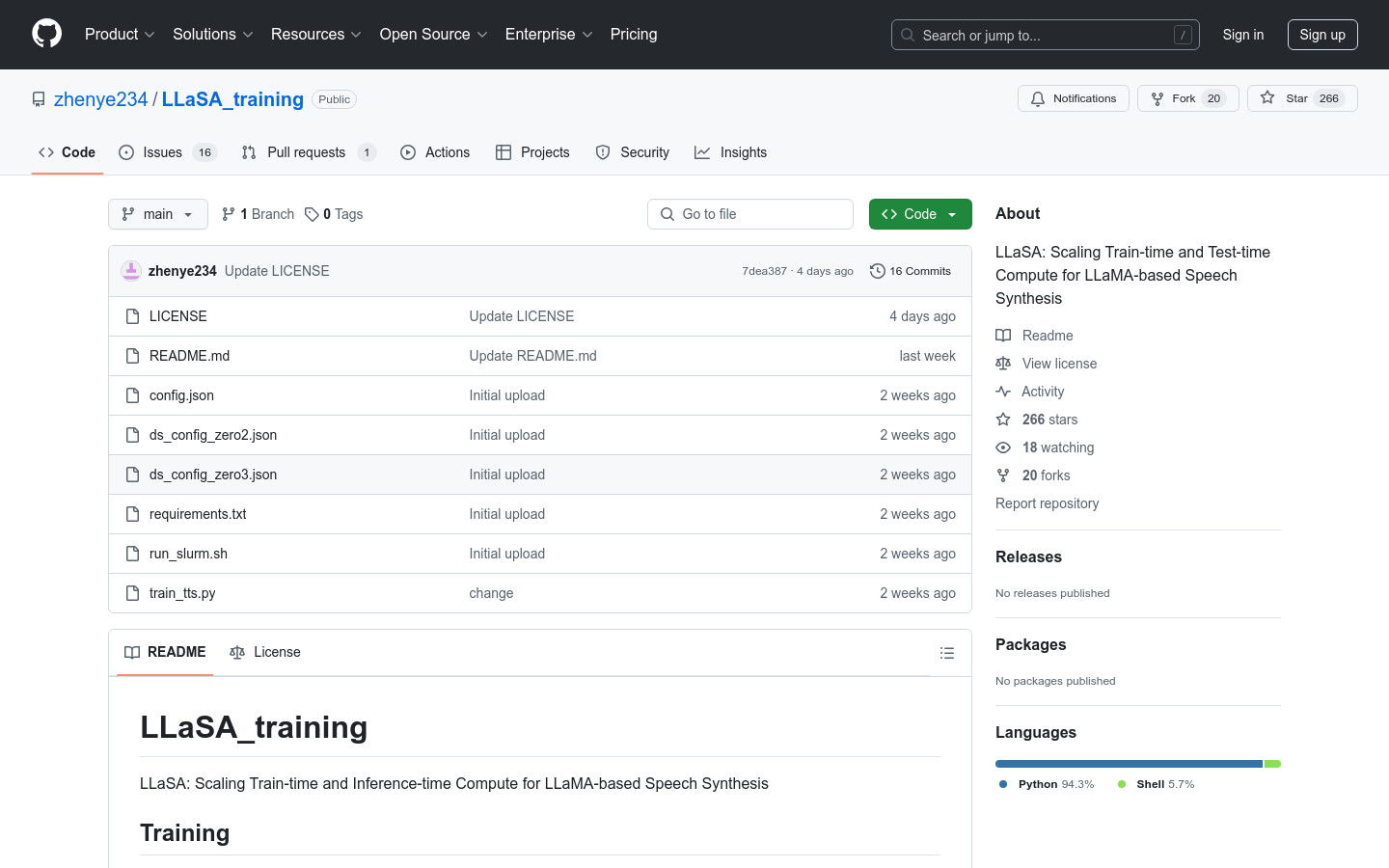

Offers multiple configuration files (like dsconfigzero2.json and dsconfigzero3.json) to meet different training needs.

Supports distributed training through the Slurm scheduling system to enhance training efficiency.

Allows direct use of related models on Hugging Face, such as Llasa-3B, Llasa-1B, and Llasa-8B.

How do I get started with LLaSA_training?

1. Clone the repository to your local machine: git clone https://github.com/zhenye234/LLaSA_training.git.

2. Download required open-source datasets like LibriHeavy and Emilia, or prepare your own dataset.

3. Choose an appropriate configuration file (such as dsconfigzero2.json or dsconfigzero3.json) based on your requirements.

4. Run the training script using the command torchrun --nprocpernode=8 train_tts.py config.json, or use the Slurm scheduling system.

5. After training is complete, you can directly use the trained model for speech synthesis on Hugging Face.