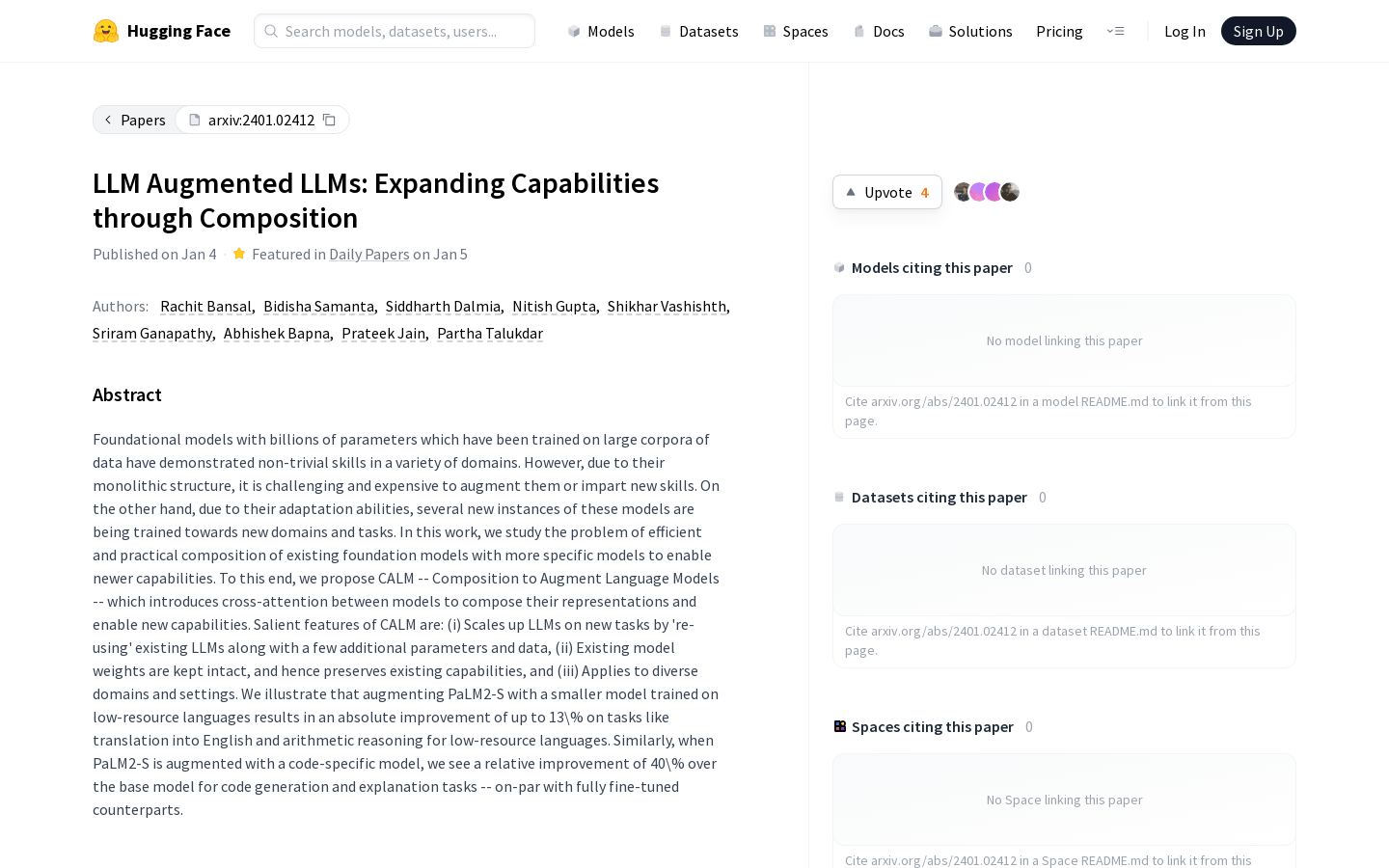

LLM Augmented LLMs enable new capabilities by combining existing base models with more specific models. CALM (Composition to Augment Language Models) introduces cross-attention between models to combine their representations and achieve new capabilities. Its salient features include: (i) extending the scale of LLMs on new tasks by "reusing" existing LLMs with a small amount of additional parameters and data; (ii) keeping existing model weights unchanged, thus retaining existing capabilities; (iii) Applicable to different domains and settings. Experiments demonstrate that augmenting PaLM2-S with smaller models trained on low-resource languages results in absolute improvements of up to 13% on tasks such as translation to English and arithmetic reasoning in low-resource languages. Similarly, when PaLM2-S is augmented with code-specific models, we see up to 40% improvement over the base model on both code generation and interpretation tasks, on par with its fully fine-tuned counterpart.

Demand group:

"Suitable for programming tasks that require extensions and enhancements to language models"

Example of usage scenario:

Augmenting PaLM2-S with code-specific models in code generation and interpretation tasks

Augmentation of smaller models trained on low-resource languages resulted in absolute improvements of up to 13% on translation tasks

Suitable for programming tasks that require extension and enhancement of language models

Product features:

Scale LLMs on new tasks by reusing existing LLMs with a small amount of additional parameters and data

Keep existing model weights unchanged and therefore retain existing capabilities

Suitable for different domains and settings