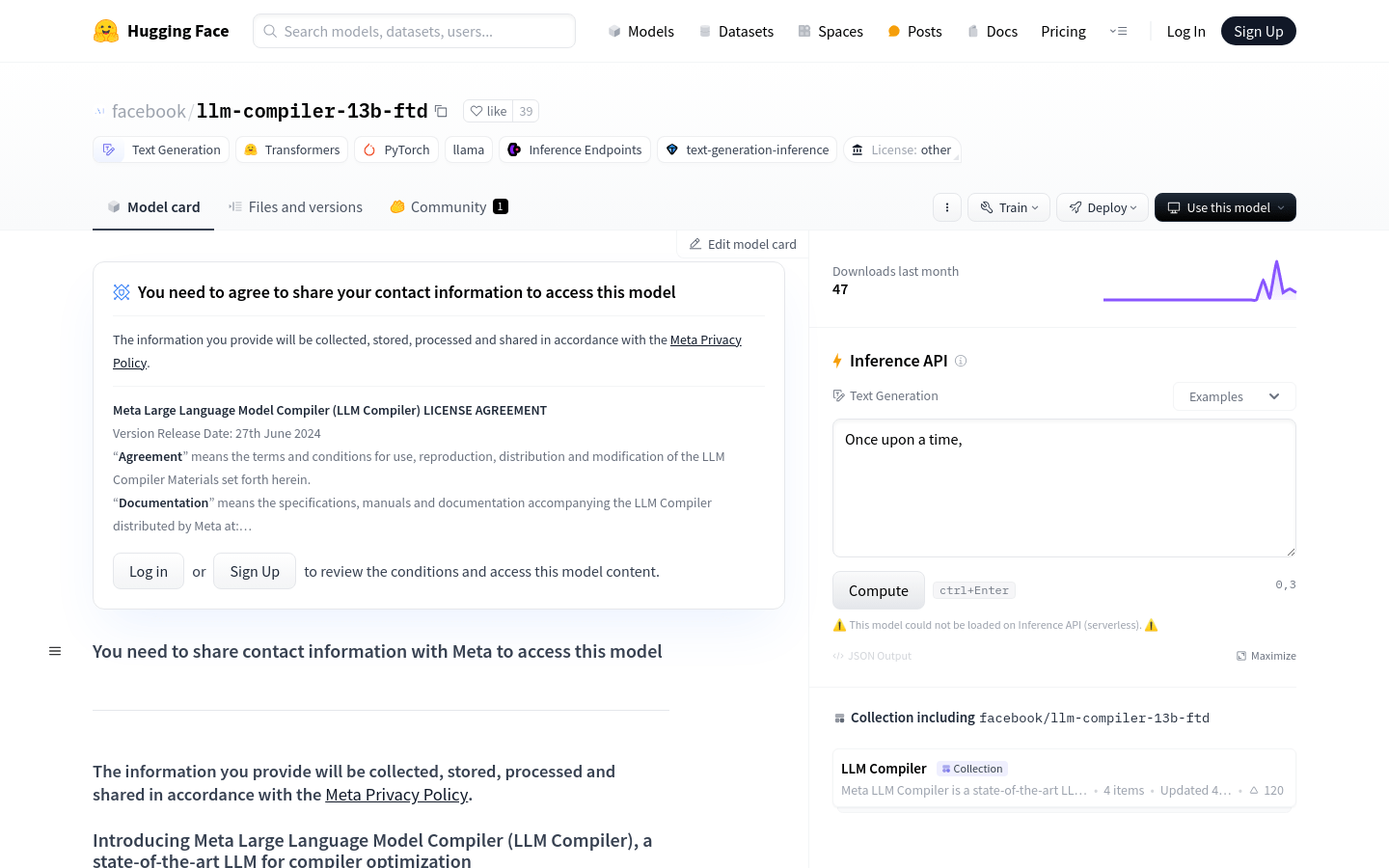

Meta Large Language Model Compiler ( LLM Compiler-13b-ftd ) is an advanced large language model built on Code Llama, focusing on compiler optimization and code reasoning. It shows excellent performance in predicting LLVM optimization effects and assembly code decompilation, which can significantly improve code efficiency and reduce code size.

Demand group:

"LLM Compiler is mainly targeted at compiler researchers and engineers, as well as developers who need to perform code optimization. It is suitable for professional users who seek to improve code efficiency and reduce program size through deep learning technology."

Example of usage scenario:

The code size used to optimize the intermediate representation (IR) generated by the compiler.

Predict optimal optimization pass sequences when developing new compiler technologies.

Decompile assembly code into LLVM IR to facilitate further code analysis and optimization.

Product features:

Predicting the impact of LLVM optimizations on code size

Generate optimized pass lists that minimize code size

Generate LLVM IR from x86_64 or ARM assembly code

Achieve near-perfect output replication in compiler optimization tasks

Provides models with 7B and 13B parameters to meet different service and latency requirements

Comply with Meta’s License and Acceptable Use Policy

Usage tutorial:

Install necessary libraries such as transformers.

Use AutoTokenizer to load tokenizers from pre-trained models.

Create transformers.pipeline to set up the text generation pipeline.

Pass the code snippet through the pipeline and set the generation parameters, such as do_sample, top_k, etc.

Obtain the generated sequence and evaluate the generated text to determine its suitability.