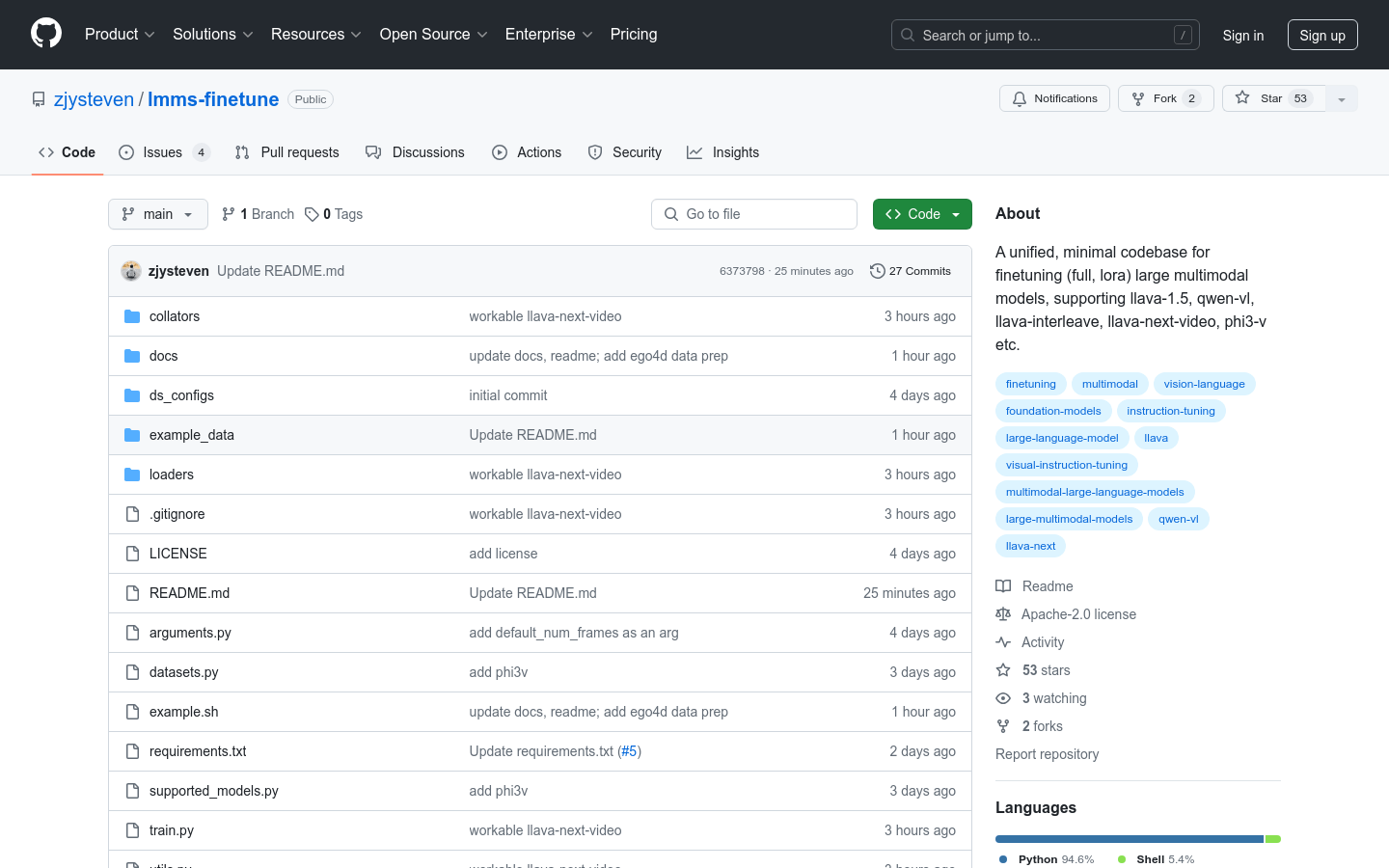

What is lmms-finetune ?

lmms-finetune is a unified code base designed to simplify the fine-tuning process of large multimodal models (LMMs). It provides researchers and developers with a structured framework that allows them to easily integrate and fine-tune the latest LMMs, supporting a variety of strategies such as full fine-tune, LoRA, and more. The code base is simple and lightweight, easy to understand and modify, and is suitable for a variety of models, including LLaVA-1.5, Phi-3-Vision, Qwen-VL-Chat, LLaVA-NeXT-Interleave and LLaVA-NeXT-Video.

Who needs lmms-finetune ?

lmms-finetune is mainly aimed at researchers and developers who need to fine-tune large multimodal models to suit specific tasks or datasets. Whether it is academic research or industrial applications, lmms-finetune provides a simple, flexible and easy-to-scaling platform that allows users to focus on model fine-tuning and experiments without paying too much attention to the underlying implementation details.

Example of usage scenario

1. Video content analysis: Researchers used lmms-finetune to fine-tune LLaVA-1.5 to improve performance on specific video content analysis tasks.

2. Image recognition: Developers use this code base to fine-tune the Phi-3-Vision model into a new image recognition task.

3. Teaching application: Educational institutions use lmms-finetune for teaching to help students understand the fine-tuning process and application of large multimodal models.

Product Features

A fine-tuning framework for unified structure: simplifies the integration and fine-tuning process.

Various fine-tuning strategies: support full fine-tuning, LoRA, Q-LoRA, etc.

Concise code base: easy to understand and modify.

Multi-model support: including single image model, multi-image/interleaved image model and video model.

Detailed documentation and examples: Help users get started quickly.

Flexible customization: Supports quick experimentation and customization requirements.

Usage tutorial

1. Cloning the code base: git clone https://github.com/zjysteven/lmms-finetune.git

2. Set Conda environment: conda create -n lmms-finetune python=3.10 -y after conda activate lmms-finetune

3. Installation dependencies: python -m pip install -r requirements.txt

4. Install additional libraries: such as python -m pip install --no-cache-dir --no-build-isolation flash-attn

5. View supported models: Run python supported_models.py to obtain supported model information.

6. Modify the training script: Modify example.sh according to the example or document, and set parameters such as target model, data path, etc.

7. Run the training script: bash example.sh Start the fine-tuning process.

With lmms-finetune , users can fine-tune the model more efficiently and focus on solving practical problems without worrying about complex underlying implementations.